Cluster and Correspondence Analysis in R

Martin Schweinberger

31 May, 2023

Introduction

This tutorial introduces classification and clustering using R. Cluster analyses fall within the domain of classification methods which are used to find groups or patterns in data or to predict group membership. As such, they are widely used and applied in machine learning. For linguists, classification is not only common when it comes to phylogenetics but also in annotation-based procedures such as part-of-speech tagging and syntactic parsing.

This tutorial is aimed at intermediate users of R with the aim of showcasing how to performing and visualizing the results of cluster and correspondence analyses in R. The aim is not to provide a fully-fledged analysis but rather to show and exemplify selected useful methods associated with cluster and correspondence analyses.

To be able to follow this tutorial, we suggest you check out and

familiarize yourself with the content of the following R

Basics and Data Science Basics tutorials:

- Getting started with R

- Basic Concepts in Quantitative Research

- Loading, saving, and generating data in R

- Handling tables in R

Click here1 to download the entire R Notebook for this tutorial.

A more elaborate and highly recommendable introduction to cluster analysis is Kassambara (2017). Other very useful resources are, e.g., King (2015); Kettenring (2006); Romesburg (2004); and Blashfield and Aldenderfer (1988).

Preparation and session set up

This tutorial is based on R. If you have not installed R or are new to it, you will find an introduction to and more information how to use R here. For this tutorials, we need to install certain packages from an R library so that the scripts shown below are executed without errors. Before turning to the code below, please install the packages by running the code below this paragraph. If you have already installed the packages mentioned below, then you can skip ahead and ignore this section. To install the necessary packages, simply run the following code - it may take some time (between 1 and 5 minutes to install all of the libraries so you do not need to worry if it takes some time).

# set options

options(stringsAsFactors = F) # no automatic data transformation

options("scipen" = 100, "digits" = 4) # suppress math annotation

# install packages

install.packages("cluster")

install.packages("factoextra")

install.packages("seriation")

install.packages("pvclust")

install.packages("ape")

install.packages("vcd")

install.packages("exact2x2")

install.packages("flextable")

install.packages("tibble")

install.packages("gplots")

# install klippy for copy-to-clipboard button in code chunks

install.packages("remotes")

remotes::install_github("rlesur/klippy")In a next step, we load the packages.

# load packages

library(cluster)

library(factoextra)

library(seriation)

library(pvclust)

library(ape)

library(vcd)

library(exact2x2)

library(flextable)

library(tibble)

library(gplots)

# activate klippy for copy-to-clipboard button

klippy::klippy()Once you have installed R and RStudio and initiated the session by executing the code shown above, you are good to go.

1 Cluster Analysis

The most common method in linguistics that is sued to detect groups in data are cluster analyses. Cluster analyses are common in linguistics because they not only detect commonalities based on the frequency or occurrence of features but they also allow to visualize when splits between groups have occurred and are thus the method of choice in historical linguistics to determine and show genealogical relationships.

Underlying Concepts

The next section focuses on the basic idea that underlies all cluster analyses. WE will have a look at some very basic examples to highlight and discuss the principles that cluster analyses rely on.

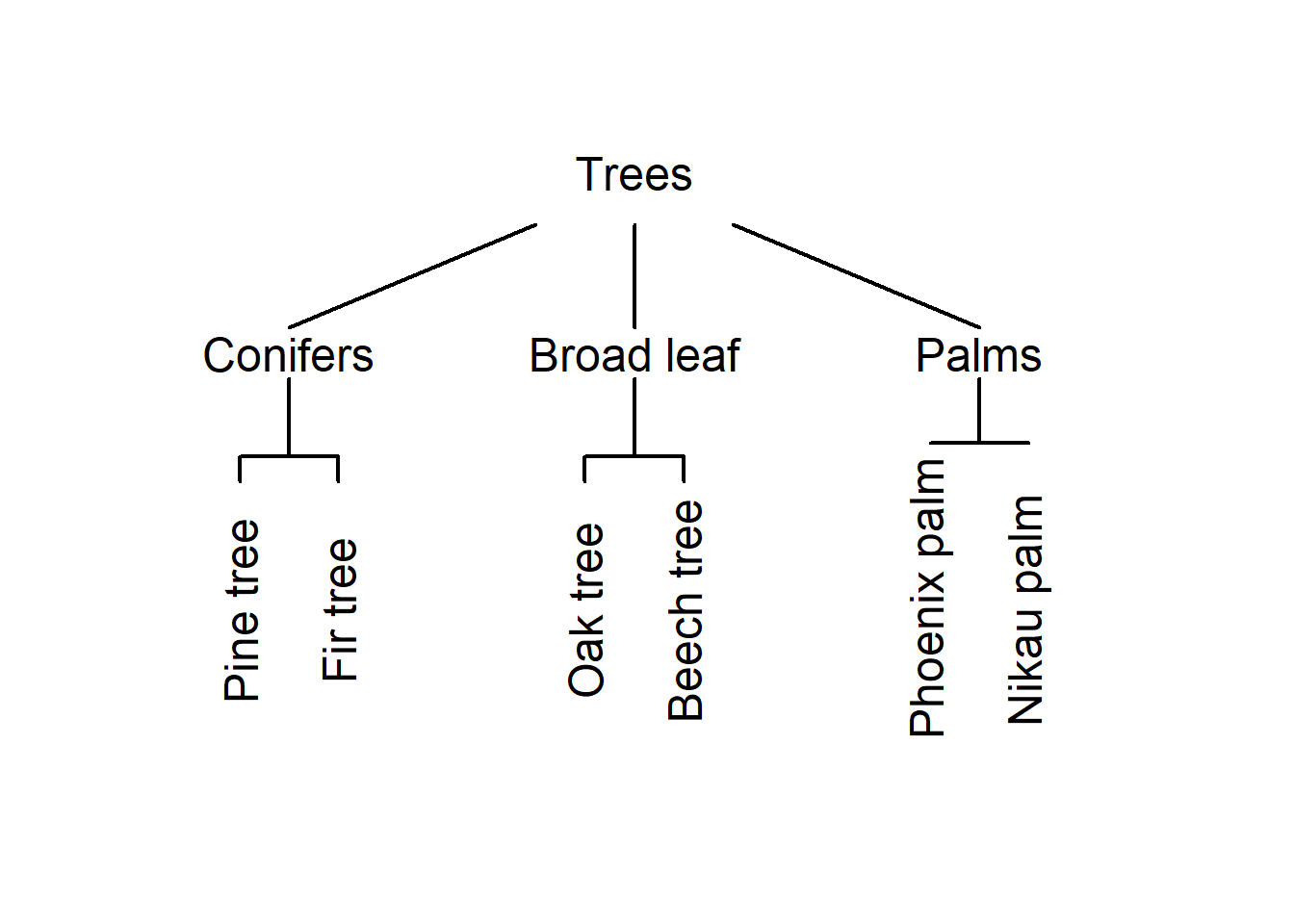

The underlying idea of cluster analysis is very simple and rather intuitive as we ourselves perform cluster analyses every day in our lives. This is so because we group things together under certain labels and into concepts. The first example used to show this, deals with types of trees and how we group these types of trees based on their outward appearance.

Imagine you see six trees representing different types of trees: a pine tree, a fir tree, an oak tree, a beech tree, a phoenix palm tree, and a nikau palm tree. Now, you were asked to group these trees according to similarity. Have a look at the plot below and see whether you would have come up with a similar type of grouping.

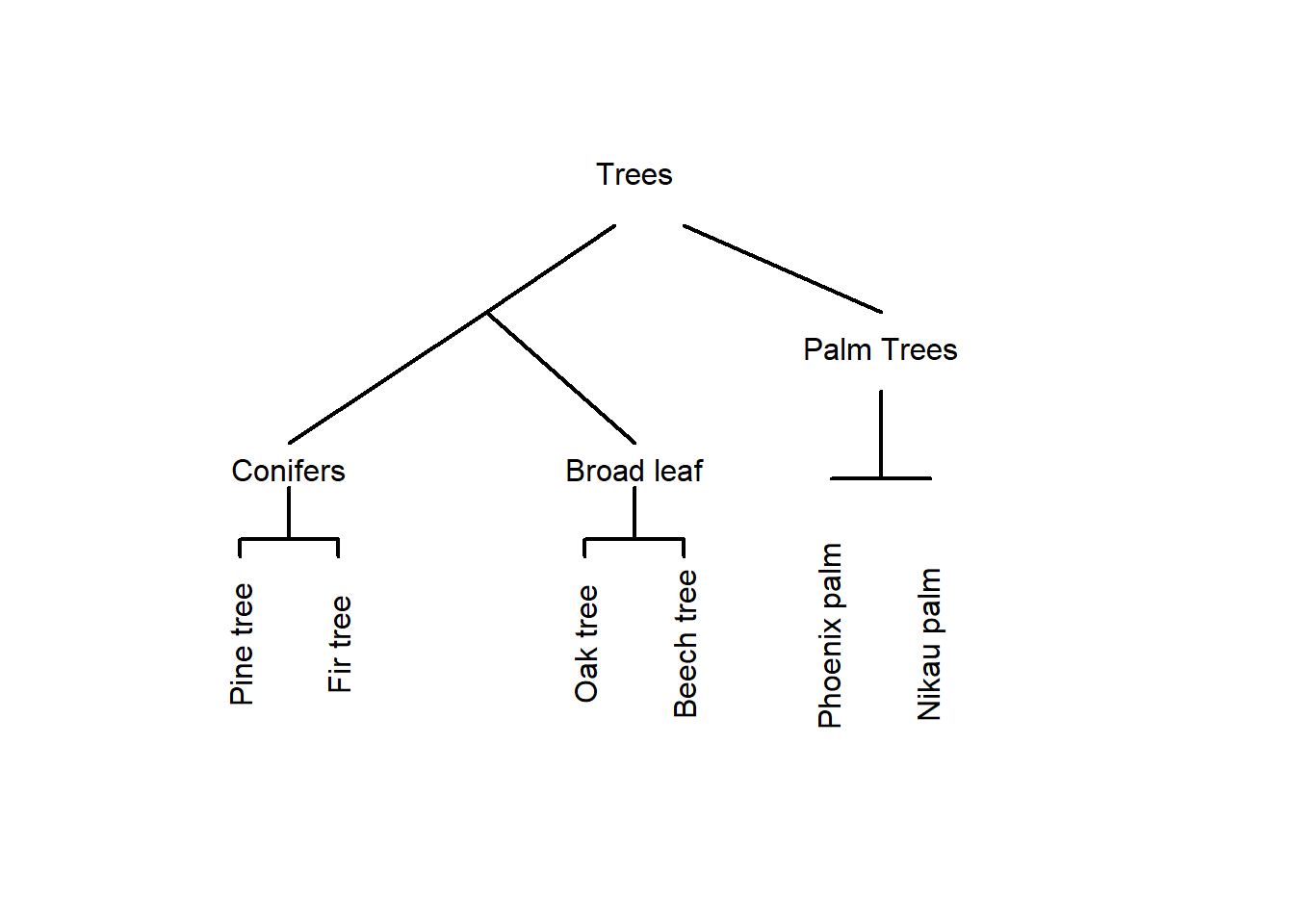

An alternative way to group the trees would be the following.

In this display, conifers and broad-leaf trees are grouped together because there are more similar to each other compared to palm trees. This poses the question of what is meant by similarity. Consider the display below.

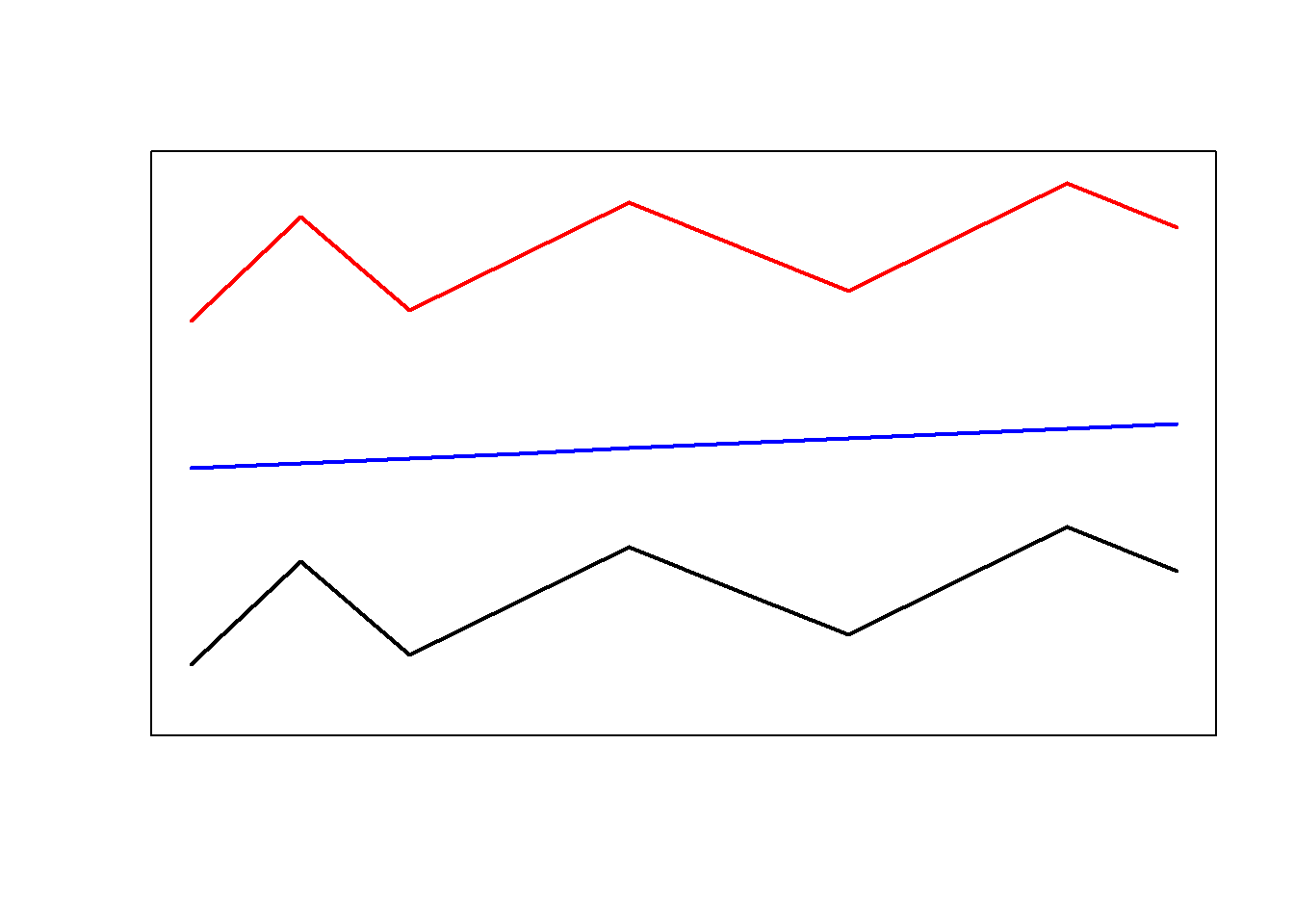

Are the red and the blue line more similar because they have the same shape or are the red and the black line more similar because they are closer together? There is no single correct answer here. Rather the plot intends to raise awareness about the fact that how cluster analyses group data depends on how similarity is defined in the respective algorithm.

Let’s consider another example to better understand how cluster analyses determine which data points should be merged when. Imagine you have five students and want to group them together based on their overall performance in school. The data that you rely on are their grades in math, music, and biology (with 1 being the best grade and 6 being the worst).

Student | Math | Music | Biology |

|---|---|---|---|

StudentA | 2 | 3 | 2 |

StudentB | 1 | 3 | 2 |

StudentC | 1 | 2 | 1 |

StudentD | 2 | 4 | 4 |

StudentE | 3 | 4 | 3 |

The first step in determining the similarity among students is to create a distance matrix.

diststudents <- dist(students, method = "manhattan") # create a distance matrixThe distance matrix below shows that Student A and Student B only differ by one grade. Student B and Student C differ by 2 grades. Student A and Student C differ by 3 grades and so on.

Student | StudentA | StudentB | StudentC | StudentD |

|---|---|---|---|---|

StudentB | 1 | |||

StudentC | 3 | 2 | ||

StudentD | 3 | 4 | 6 | |

StudentE | 3 | 4 | 6 | 2 |

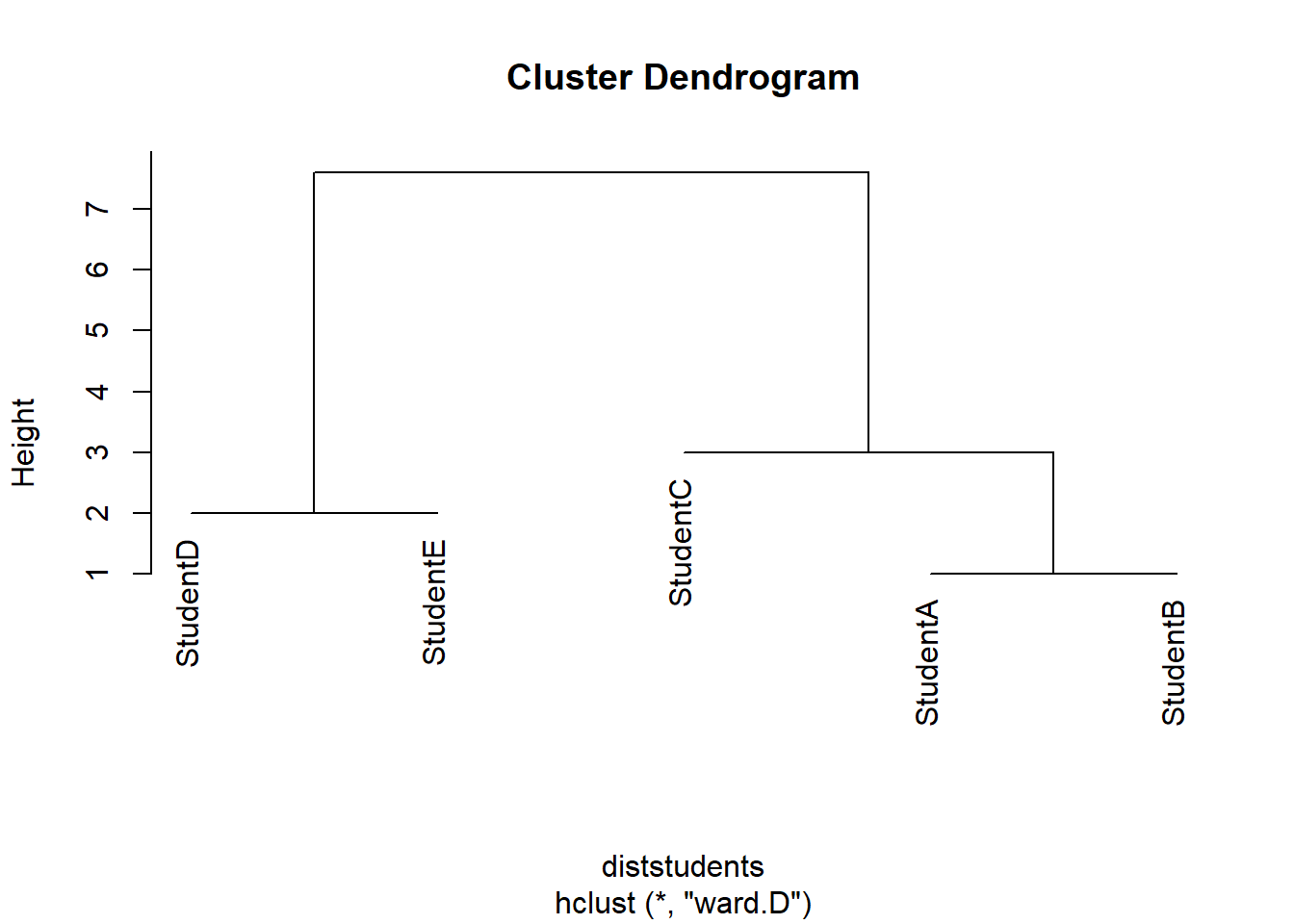

Based on this distance matrix, we can now implement a cluster analysis in R.

Cluster Analysis on Numeric Data

To create a simple cluster object in R, we use the

hclust function from the cluster package. The

resulting object is then plotted to create a dendrogram which shows how

students have been amalgamated (combined) by the clustering algorithm

(which, in the present case, is called ward.D).

# create hierarchical cluster object with ward.D as linkage method

clusterstudents <- hclust(diststudents, method="ward.D")

# plot result as dendrogram

plot(clusterstudents, hang = 0)

Let us have a look at how the clustering algorithm has amalgamated the students. The amalgamation process takes the distance matrix from above as a starting point and, in a first step, has merged Student A and Student B (because they were the most similar students in the data based on the distance matrix). After collapsing Student A and Student B, the resulting distance matrix looks like the distance matrix below (notice that Student A and Student B now form a cluster that is represented by the means of the grades of the two students).

students2 <- matrix(c(1.5, 3, 2, 1, 2, 1, 2, 4, 4, 3, 4, 3),

nrow = 4, byrow = T)

students2 <- as.data.frame(students2)

rownames(students2) <- c("Cluster1", "StudentC", "StudentD", "StudentE")

diststudents2 <- dist(students2, method = "manhattan")Student | Cluster 1 | Student C | Student D |

|---|---|---|---|

Student C | 2.5 | ||

Student D | 3.5 | 6.0 | |

Student E | 3.5 | 6.0 | 2.0 |

The next lowest distance now is 2.0 between Student D and Student E which means that these two students are merged next. The resulting distance matrix is shown below.

students3 <- matrix(c(1.5,3,2,1,2,1,2.5,4,3.5),

nrow = 3, byrow = T)

students3 <- as.data.frame(students3)

rownames(students3) <- c("Cluster1", "StudentC", "Cluster2")

diststudents3 <- dist(students3,

method = "manhattan")Student | Cluster 1 | Student C |

|---|---|---|

Student C | 2.5 | |

Cluster 2 | 3.5 | 6.0 |

Now, the lowest distance value occurs between Cluster 1 and Student C. Thus, Student C and Cluster 1 are merged. In the final step, the Cluster 2 is merged with the new cluster encompassing Student C and Cluster 1. This amalgamation process can then be displayed visually as a dendrogram (see above).

How and which elements are merged depends on the what is understood as distance. Since “distance” is such an important concept in cluster analyses, we will briefly discuss this notion to understand why there are so many different types of clustering algorithms and this cluster analyses.

Distance and Similarity Measures

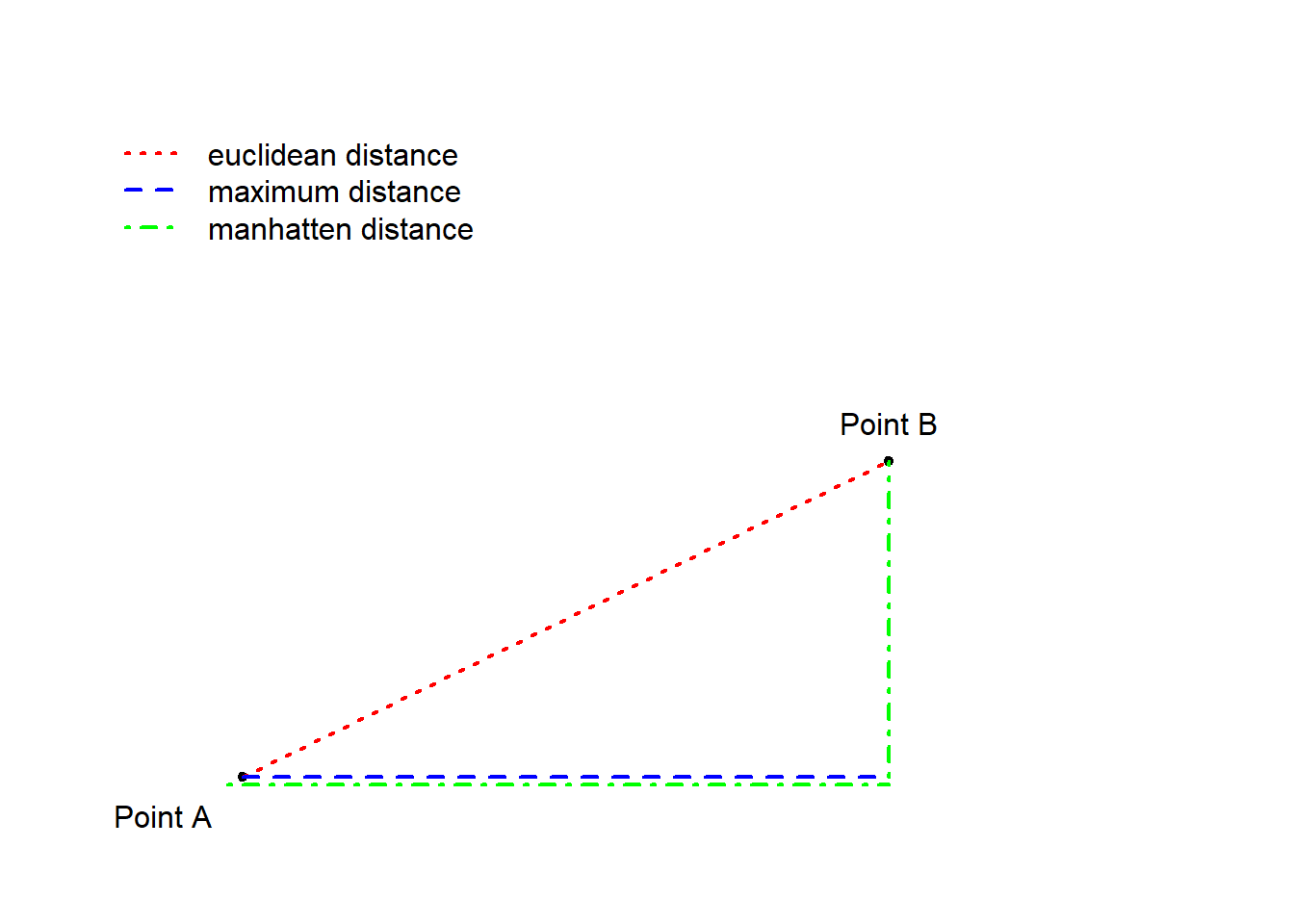

To understand how a cluster analysis determines to which cluster a given data point belongs, we need to understand what different distance measures represent. Have a look at the Figure below which visually represents three different ways to conceptualize distance.

The Figure above depicts three ways to measure distance: the

euclidean distance represents the distance between points

as the hypotenuse of the x- and y-axis distances while the “maximum

distance” represents distance as the longer distance of either the

distance on the x- or the y-axis. The manhatten distance

(or block distance) is the sum of the distances on the x- and the

y-axis.

We will now turn to another example in order to delve a little deeper into how clustering algorithms work. In this example, we will find cluster of varieties of English based on the relative frequency of selected non-standard features (such as the relative frequencies of cleft constructions and tag questions). As a first step, we generate some fictional data set for this analysis.

# generate data

IrishEnglish <- round(sqrt((rnorm(10, 9.5, .5))^2), 3)

ScottishEnglish <- round(sqrt((rnorm(10, 9.3, .4))^2), 3)

BritishEnglish <- round(sqrt((rnorm(10, 6.4, .7))^2), 3)

AustralianEnglish <- round(sqrt((rnorm(10, 6.6, .5))^2), 3)

NewZealandEnglish <- round(sqrt((rnorm(10, 6.5, .4))^2), 3)

AmericanEnglish <- round(sqrt((rnorm(10, 4.6, .8))^2), 3)

CanadianEnglish <- round(sqrt((rnorm(10, 4.5, .7))^2), 3)

JamaicanEnglish <- round(sqrt((rnorm(10, 1.4, .2))^2), 3)

PhillipineEnglish <- round(sqrt((rnorm(10, 1.5, .4))^2), 3)

IndianEnglish <- round(sqrt((rnorm(10, 1.3, .5))^2), 3)

clus <- data.frame(IrishEnglish, ScottishEnglish, BritishEnglish,

AustralianEnglish, NewZealandEnglish, AmericanEnglish,

CanadianEnglish, JamaicanEnglish, PhillipineEnglish, IndianEnglish)

# add row names

rownames(clus) <- c("nae_neg", "like", "clefts", "tags", "youse", "soitwas",

"dt", "nsr", "invartag", "wh_cleft")Feature | IrishEnglish | ScottishEnglish | BritishEnglish | AustralianEnglish | NewZealandEnglish | AmericanEnglish | CanadianEnglish | JamaicanEnglish | PhillipineEnglish | IndianEnglish |

|---|---|---|---|---|---|---|---|---|---|---|

nae_neg | 8.423 | 9.280 | 6.419 | 6.898 | 6.234 | 3.863 | 5.114 | 1.305 | 1.541 | 1.964 |

like | 9.337 | 10.099 | 8.345 | 7.124 | 6.740 | 4.714 | 4.712 | 1.579 | 1.286 | 0.685 |

clefts | 8.332 | 9.428 | 5.952 | 7.440 | 6.443 | 4.566 | 5.109 | 1.198 | 1.131 | 0.901 |

tags | 9.674 | 9.388 | 6.962 | 6.455 | 7.490 | 4.612 | 4.304 | 1.142 | 1.783 | 1.303 |

youse | 9.548 | 8.358 | 6.220 | 6.096 | 6.448 | 4.092 | 5.200 | 1.432 | 1.416 | 0.792 |

soitwas | 9.047 | 8.882 | 6.053 | 6.214 | 6.752 | 5.859 | 5.931 | 1.887 | 1.137 | 1.319 |

dt | 9.462 | 8.976 | 7.104 | 6.367 | 6.725 | 4.351 | 5.185 | 1.022 | 1.417 | 0.794 |

nsr | 9.679 | 9.325 | 5.627 | 5.780 | 6.273 | 5.458 | 4.870 | 1.512 | 1.820 | 0.042 |

invartag | 10.276 | 8.890 | 5.901 | 6.637 | 5.946 | 5.149 | 2.879 | 1.404 | 1.748 | 0.686 |

wh_cleft | 9.505 | 8.844 | 5.729 | 6.309 | 6.526 | 5.362 | 4.032 | 1.459 | 1.654 | 1.820 |

As a next step, we create a cluster object based on the data we have just generated.

# clean data

clusm <- as.matrix(clus)

clust <- t(clusm) # transpose data

clust <- na.omit(clust) # remove missing values

clusts <- scale(clust) # standardize variables

clusts <- as.matrix(clusts) # convert into matrixVariety | nae_neg | like | clefts | tags | youse | soitwas | dt | nsr | invartag | wh_cleft |

|---|---|---|---|---|---|---|---|---|---|---|

IrishEnglish | 8.423 | 9.337 | 8.332 | 9.674 | 9.548 | 9.047 | 9.462 | 9.679 | 10.276 | 9.505 |

ScottishEnglish | 9.280 | 10.099 | 9.428 | 9.388 | 8.358 | 8.882 | 8.976 | 9.325 | 8.890 | 8.844 |

BritishEnglish | 6.419 | 8.345 | 5.952 | 6.962 | 6.220 | 6.053 | 7.104 | 5.627 | 5.901 | 5.729 |

AustralianEnglish | 6.898 | 7.124 | 7.440 | 6.455 | 6.096 | 6.214 | 6.367 | 5.780 | 6.637 | 6.309 |

NewZealandEnglish | 6.234 | 6.740 | 6.443 | 7.490 | 6.448 | 6.752 | 6.725 | 6.273 | 5.946 | 6.526 |

AmericanEnglish | 3.863 | 4.714 | 4.566 | 4.612 | 4.092 | 5.859 | 4.351 | 5.458 | 5.149 | 5.362 |

CanadianEnglish | 5.114 | 4.712 | 5.109 | 4.304 | 5.200 | 5.931 | 5.185 | 4.870 | 2.879 | 4.032 |

JamaicanEnglish | 1.305 | 1.579 | 1.198 | 1.142 | 1.432 | 1.887 | 1.022 | 1.512 | 1.404 | 1.459 |

PhillipineEnglish | 1.541 | 1.286 | 1.131 | 1.783 | 1.416 | 1.137 | 1.417 | 1.820 | 1.748 | 1.654 |

IndianEnglish | 1.964 | 0.685 | 0.901 | 1.303 | 0.792 | 1.319 | 0.794 | 0.042 | 0.686 | 1.820 |

We assess if data is “clusterable” by testing if the data contains non-randomness. To this end, we calculate the Hopkins statistic which indicates how similar the data is to a random distribution.

A Hopkins value of 0.5 indicates that the data is random and that there are no inherent clusters.

If the Hopkins statistic is close to 1, then the data is highly clusterable.

Values of 0 indicate that the data is uniform (Aggarwal 2015, 158).

The n in the get_clust_tendency functions

represents the maximum number of clusters to be tested which should be

number of predictors in the data.

# apply get_clust_tendency to cluster object

clusttendency <- get_clust_tendency(clusts,

# define number of points from sample space

n = 9,

gradient = list(

# define color for low values

low = "steelblue",

# define color for high values

high = "white"))

clusttendency[1]## $hopkins_stat

## [1] 0.7550177As the Hopkins value is substantively higher than .5 (randomness) and closer to 1 (highly clusterable) than to .5, thus indicating that there is sufficient structure in the data to warrant a cluster analysis. As such, we can assume that there are actual clusters in the data and continue by generating a distance matrix using euclidean distances.

clustd <- dist(clusts, # create distance matrix

method = "euclidean") # use euclidean (!) distanceVariety | IrishEnglish | ScottishEnglish | BritishEnglish | AustralianEnglish | NewZealandEnglish | AmericanEnglish | CanadianEnglish | JamaicanEnglish | PhillipineEnglish | IndianEnglish |

|---|---|---|---|---|---|---|---|---|---|---|

IrishEnglish | 0.00 | 0.84 | 3.15 | 3.01 | 2.91 | 4.67 | 4.83 | 8.14 | 8.04 | 8.51 |

ScottishEnglish | 0.84 | 0.00 | 2.88 | 2.70 | 2.69 | 4.50 | 4.57 | 7.95 | 7.85 | 8.30 |

BritishEnglish | 3.15 | 2.88 | 0.00 | 0.75 | 0.69 | 2.00 | 1.96 | 5.18 | 5.09 | 5.54 |

AustralianEnglish | 3.01 | 2.70 | 0.75 | 0.00 | 0.64 | 2.00 | 2.05 | 5.29 | 5.21 | 5.63 |

NewZealandEnglish | 2.91 | 2.69 | 0.69 | 0.64 | 0.00 | 1.94 | 2.01 | 5.31 | 5.22 | 5.66 |

AmericanEnglish | 4.67 | 4.50 | 2.00 | 2.00 | 1.94 | 0.00 | 1.09 | 3.53 | 3.47 | 3.94 |

CanadianEnglish | 4.83 | 4.57 | 1.96 | 2.05 | 2.01 | 1.09 | 0.00 | 3.54 | 3.51 | 3.91 |

JamaicanEnglish | 8.14 | 7.95 | 5.18 | 5.29 | 5.31 | 3.53 | 3.54 | 0.00 | 0.40 | 0.71 |

PhillipineEnglish | 8.04 | 7.85 | 5.09 | 5.21 | 5.22 | 3.47 | 3.51 | 0.40 | 0.00 | 0.77 |

IndianEnglish | 8.51 | 8.30 | 5.54 | 5.63 | 5.66 | 3.94 | 3.91 | 0.71 | 0.77 | 0.00 |

Below are other methods to create distance matrices with some comments on when using which metric is appropriate.

# create distance matrix (euclidean method: not good when dealing with many dimensions)

clustd <- dist(clusts, method = "euclidean")

# create distance matrix (maximum method: here the difference between points dominates)

clustd_maximum <- round(dist(clusts, method = "maximum"), 2)

# create distance matrix (manhattan method: most popular choice)

clustd_manhatten <- round(dist(clusts, method = "manhattan"), 2)

# create distance matrix (canberra method: for count data only - focuses on small differences and neglects larger differences)

clustd_canberra <- round(dist(clusts, method = "canberra"), 2)

# create distance matrix (binary method: for binary data only!)

clustd_binary <- round(dist(clusts, method = "binary"), 2)

# create distance matrix (minkowski method: is not a true distance measure)

clustd_minkowski <- round(dist(clusts, method = "minkowski"), 2)

# distance method for words: daisy (other possible distances are "manhattan" and "gower")

clustd_daisy <- round(daisy(clusts, metric = "euclidean"), 2) If you call the individual distance matrices, you will see that

depending on which distance measure is used, the distance matrices

differ dramatically! Have a look at the distance matrix created using

the manhatten metric and compare it to the distance matrix

created using the euclidian metric (see above).

clustd_maximumVariety | IrishEnglish | ScottishEnglish | BritishEnglish | AustralianEnglish | NewZealandEnglish | AmericanEnglish | CanadianEnglish | JamaicanEnglish | PhillipineEnglish | IndianEnglish |

|---|---|---|---|---|---|---|---|---|---|---|

IrishEnglish | 0.00 | 0.43 | 1.35 | 1.23 | 1.34 | 1.82 | 2.29 | 2.80 | 2.73 | 3.04 |

ScottishEnglish | 0.43 | 0.00 | 1.17 | 1.12 | 1.07 | 1.90 | 1.86 | 2.80 | 2.71 | 2.93 |

BritishEnglish | 1.35 | 1.17 | 0.00 | 0.48 | 0.47 | 1.06 | 1.06 | 1.97 | 2.06 | 2.24 |

AustralianEnglish | 1.23 | 1.12 | 0.48 | 0.00 | 0.32 | 1.06 | 1.16 | 2.02 | 2.04 | 2.11 |

NewZealandEnglish | 1.34 | 1.07 | 0.47 | 0.32 | 0.00 | 0.90 | 1.00 | 1.99 | 1.93 | 1.97 |

AmericanEnglish | 1.82 | 1.90 | 1.06 | 1.06 | 0.90 | 0.00 | 0.70 | 1.37 | 1.63 | 1.71 |

CanadianEnglish | 2.29 | 1.86 | 1.06 | 1.16 | 1.00 | 0.70 | 0.00 | 1.39 | 1.65 | 1.59 |

JamaicanEnglish | 2.80 | 2.80 | 1.97 | 2.02 | 1.99 | 1.37 | 1.39 | 0.00 | 0.26 | 0.46 |

PhillipineEnglish | 2.73 | 2.71 | 2.06 | 2.04 | 1.93 | 1.63 | 1.65 | 0.26 | 0.00 | 0.56 |

IndianEnglish | 3.04 | 2.93 | 2.24 | 2.11 | 1.97 | 1.71 | 1.59 | 0.46 | 0.56 | 0.00 |

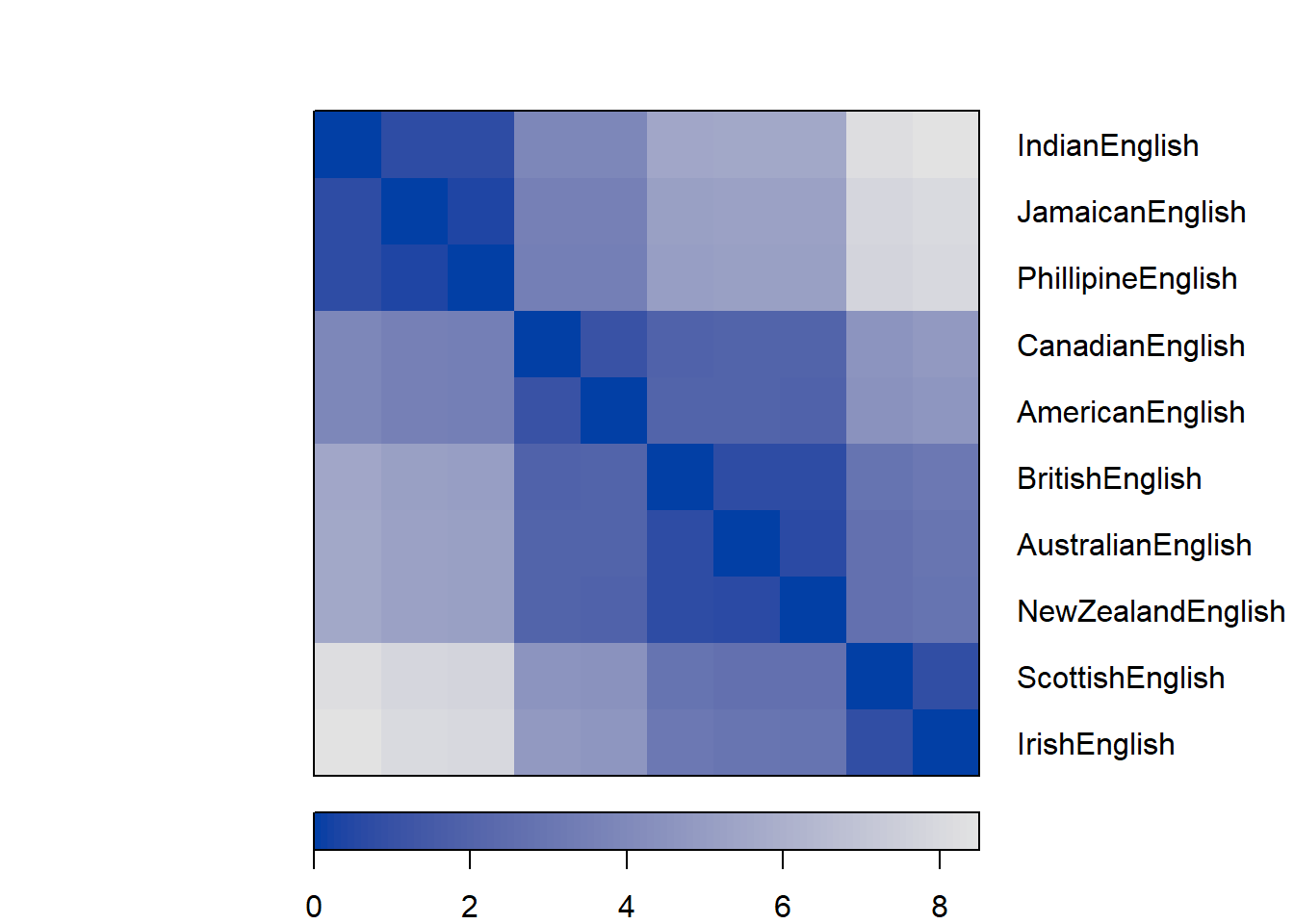

Next, we create a distance plot using the distplot

function. If the distance plot shows different regions (non-random,

non-uniform gray areas) then clustering the data is permittable as the

data contains actual structures.

# create distance plot

dissplot(clustd)

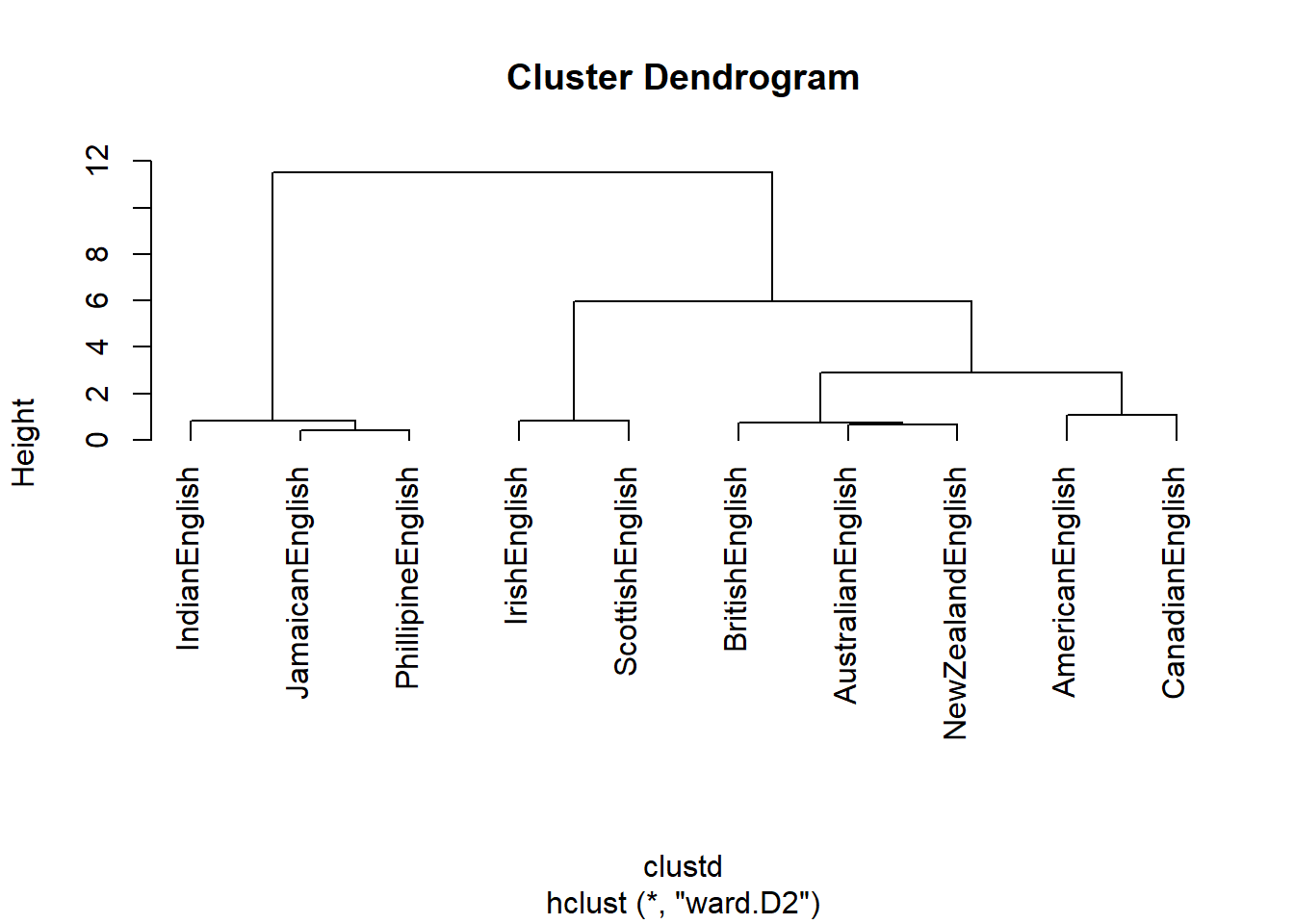

The most common method for clustering is called ward.D

or ward.D2. Both of these linkage functions seek to

minimize variance. This means that they cluster in a way that the amount

of variance is at a minimum (comparable to the regression line in an

ordinary least squares (OLS) design).

# create cluster object

cd <- hclust(clustd, method="ward.D2")

# display dendrogram

plot(cd, hang = -1)

We will briefly go over some other, alternative linkage methods. Which linkage method is and should be used depends on various factors, for example, the type of variables (nominal versus numeric) or whether the focus should be placed on commonalities or differences.

# single linkage: cluster with nearest data point

cd_single <- hclust(clustd, method="single")

# create cluster object (ward.D linkage)

cd_wardd <- hclust(clustd, method="ward.D")

# create cluster object (ward.D2 linkage):

# cluster in a way to achieve minimum variance

cd_wardd2 <- hclust(clustd, method="ward.D2")

# average linkage: cluster with closest mean

cd_average <- hclust(clustd, method="average")

# mcquitty linkage

cd_mcquitty <- hclust(clustd, method="mcquitty")

# median linkage: cluster with closest median

cd_median <- hclust(clustd, method="median")

# centroid linkage: cluster with closest prototypical point of target cluster

cd_centroid <- hclust(clustd, method="centroid")

# complete linkage: cluster with nearest/furthest data point of target cluster

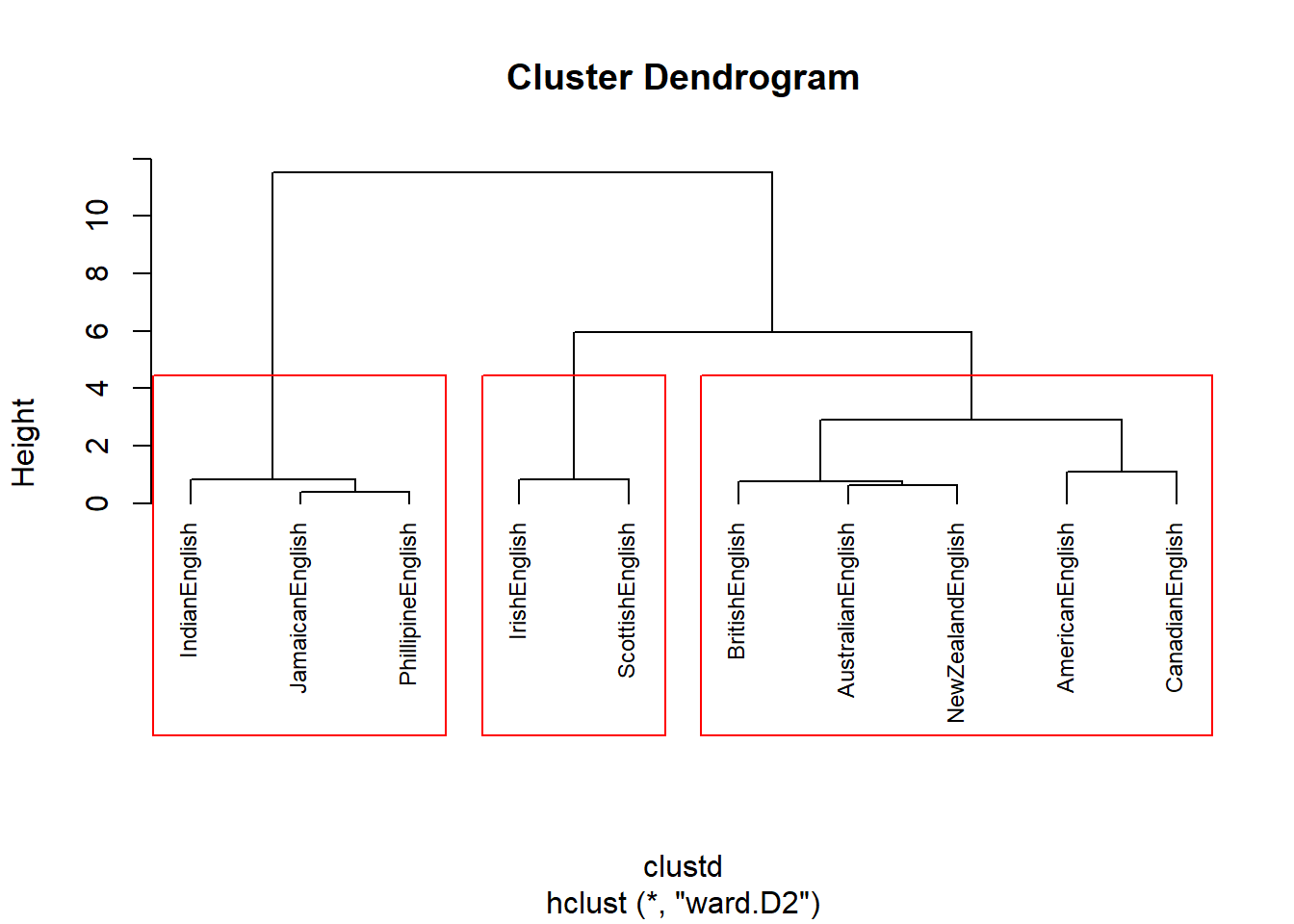

cd_complete <- hclust(clustd, method="complete") Now, we determine the optimal number of clusters based on silhouette widths which shows the ratio of internal similarity of clusters against the similarity between clusters. If the silhouette widths have values lower than .2 then this indicates that clustering is not appropriate (Levshina 2015, 311). The function below displays the silhouette width values of 2 to 8 clusters.

optclus <- sapply(2:8, function(x) summary(silhouette(cutree(cd, k = x), clustd))$avg.width)

optclus # inspect results## [1] 0.6361654 0.6765275 0.6770810 0.5845810 0.4428797 0.2852484 0.1120639optnclust <- which(optclus == max(optclus)) # determine optimal number of clusters

groups <- cutree(cd, k=optnclust) # cut tree into optimal number of clustersThe optimal number of clusters is the cluster solution with the highest silhouette width. We cut the tree into the optimal number of clusters and plot the result.

groups <- cutree(cd, k=optnclust) # cut tree into optimal clusters

plot(cd, hang = -1, cex = .75) # plot result as dendrogram

rect.hclust(cd, k=optnclust, border="red") # draw red borders around clusters

In a next step, we aim to determine which factors are particularly important for the clustering - this step is comparable to measuring the effect size in inferential designs.

# which factors are particularly important

celtic <- clusts[c(1,2),]

others <- clusts[-c(1,2),]

# calculate column means

celtic.cm <- colMeans(celtic)

others.cm <- colMeans(others)

# calculate difference between celtic and other englishes

diff <- celtic.cm - others.cm

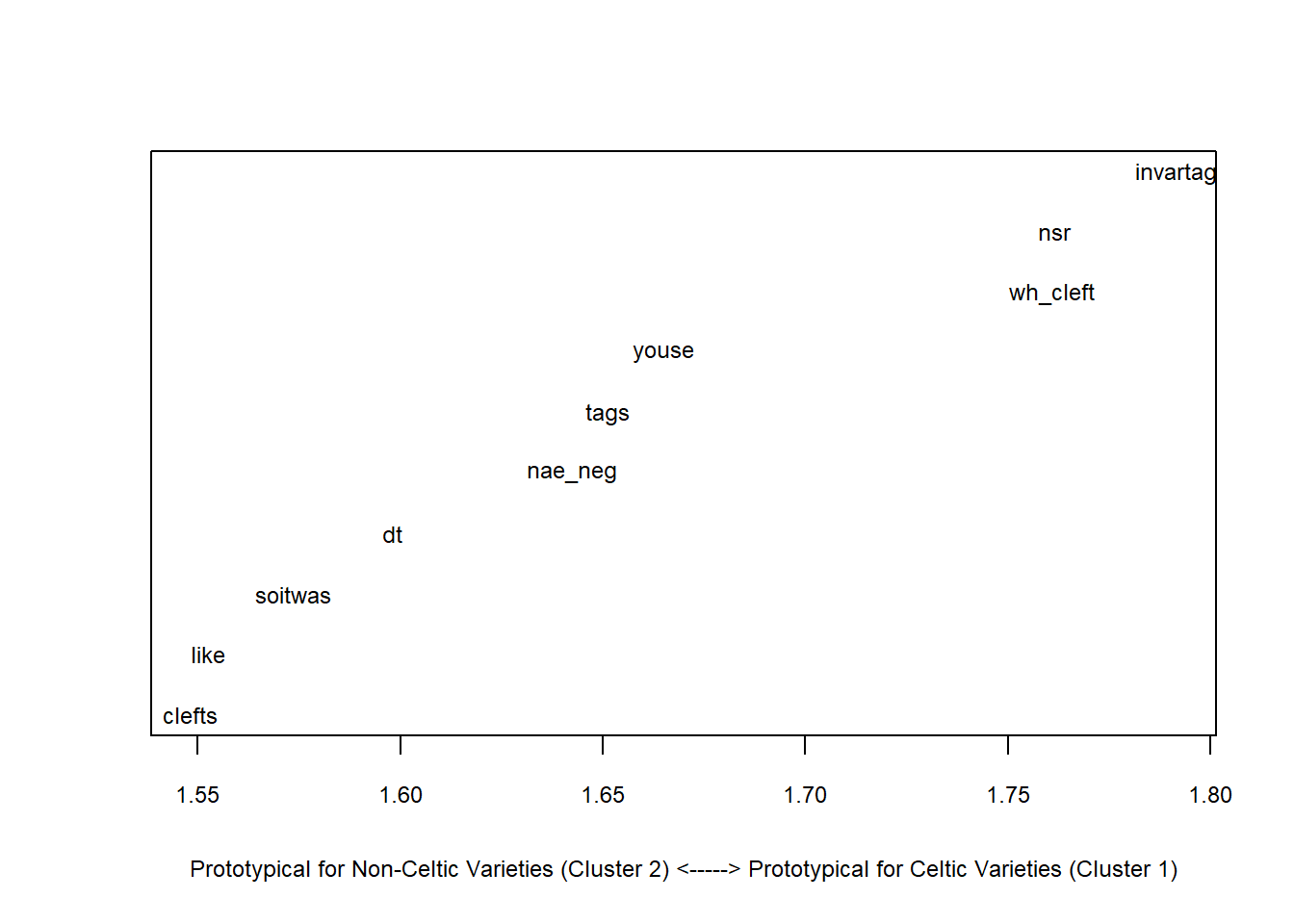

sort(diff, decreasing = F)## clefts like soitwas dt nae_neg tags youse wh_cleft

## 1.548272 1.552459 1.573656 1.598100 1.642429 1.651240 1.664917 1.760904

## nsr invartag

## 1.761451 1.791637plot(sort(diff), # y-values

1:length(diff), # x-values

type= "n", # plot type (empty)

cex.axis = .75, # axis font size

cex.lab = .75, # label font size

xlab ="Prototypical for Non-Celtic Varieties (Cluster 2) <-----> Prototypical for Celtic Varieties (Cluster 1)", # x-axis label

yaxt = "n", # no y-axis tick marks

ylab = "") # no y-axis label

text(sort(diff), 1:length(diff), names(sort(diff)), cex = .75) # plot text into plot

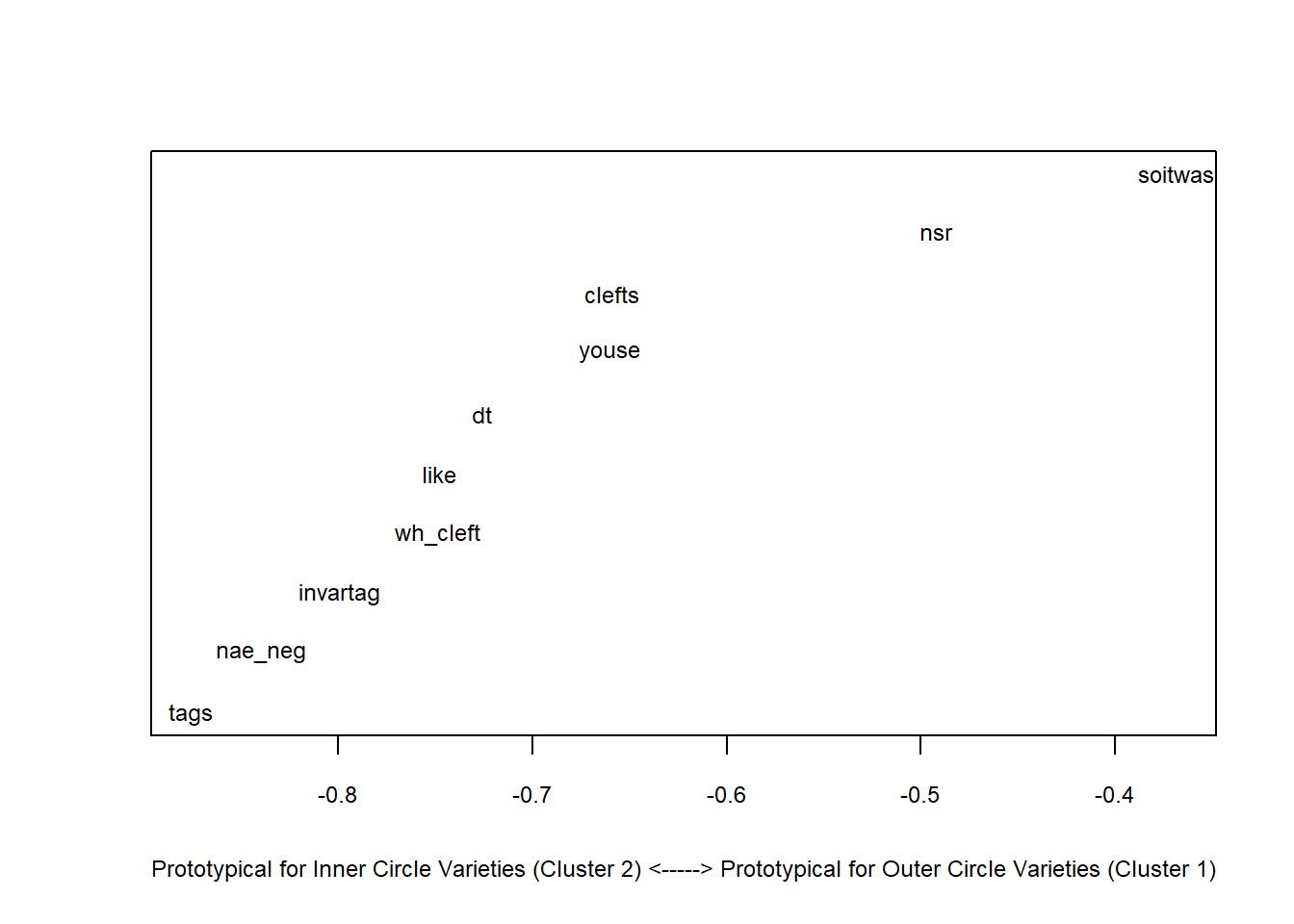

Outer <- clusts[c(6:8),] # data of outer circle varieties

Inner <- clusts[-c(6:8),] # data of inner circle varieties

Outer.cm <- colMeans(Outer) # column means for outer circle

Inner.cm <- colMeans(Inner) # column means for inner circle

diff <- Outer.cm - Inner.cm # difference between inner and outer circle

sort(diff, decreasing = F) # order difference between inner and outer circle## tags nae_neg invartag wh_cleft like dt youse

## -0.8759389 -0.8398876 -0.7991570 -0.7484110 -0.7478019 -0.7258523 -0.6602738

## clefts nsr soitwas

## -0.6586555 -0.4924847 -0.3684580plot( # start plot

sort(diff), # y-values

1:length(diff), # x-values

type= "n", # plot type (empty)

cex.axis = .75, # axis font size

cex.lab = .75, # label font size

xlab ="Prototypical for Inner Circle Varieties (Cluster 2) <-----> Prototypical for Outer Circle Varieties (Cluster 1)", # x-axis label

yaxt = "n", # no y-axis tick marks

ylab = "") # no y-axis label

text(sort(diff), 1:length(diff), names(sort(diff)), cex = .75) # plot text into plot

We see that discourse like is typical for other varieties and that the use of youse as 2nd person plural pronoun and invariant tags are typical for Celtic Englishes.

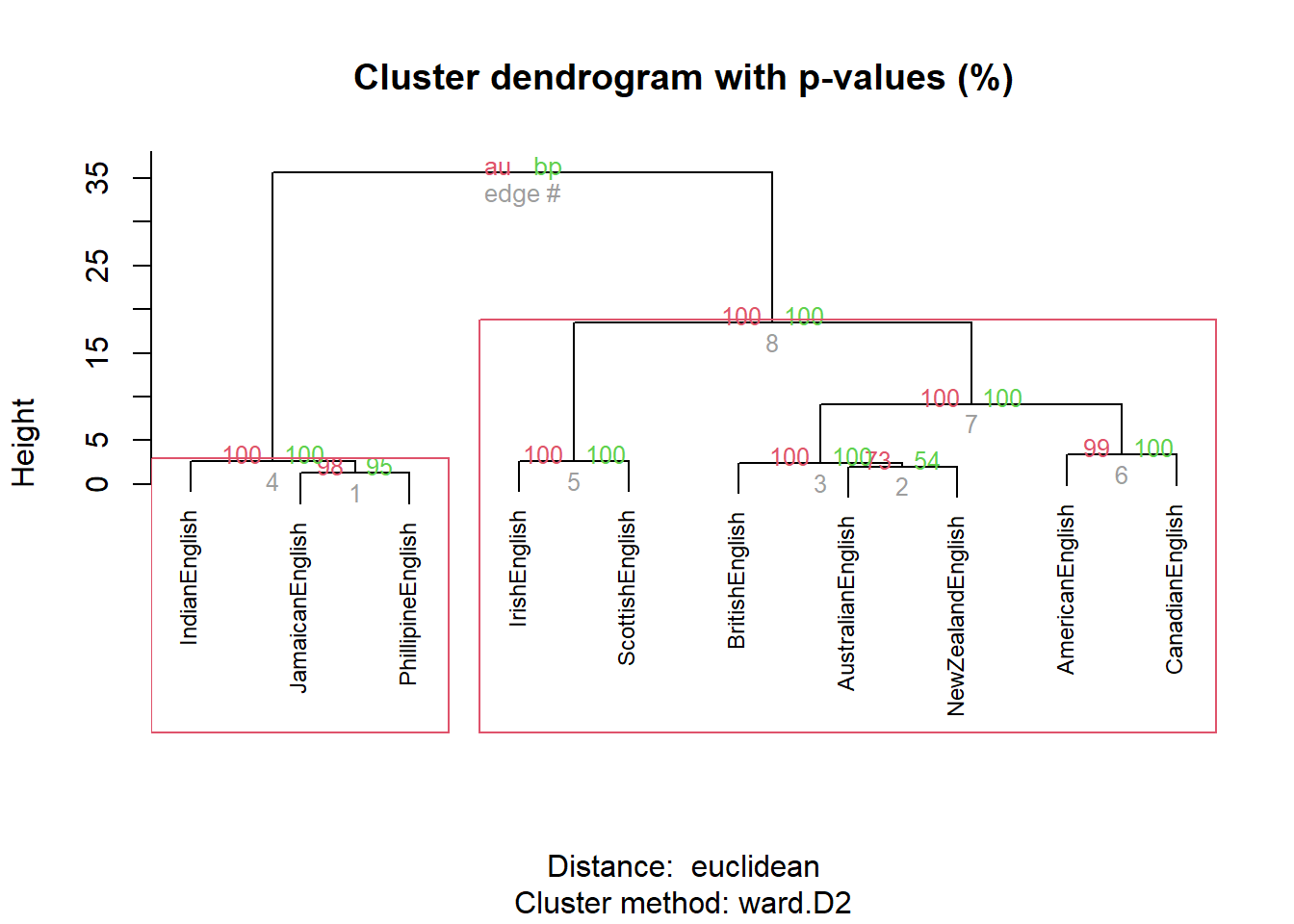

We will now test whether the cluster is justified by validating the cluster solution using bootstrapping.

res.pv <- pvclust(clus, # apply pvclust method to clus data

method.dist="euclidean", # use eucledian distance

method.hclust="ward.D2", # use ward.d2 linkage

nboot = 100) # use 100 bootstrap runs## Bootstrap (r = 0.5)... Done.

## Bootstrap (r = 0.6)... Done.

## Bootstrap (r = 0.7)... Done.

## Bootstrap (r = 0.8)... Done.

## Bootstrap (r = 0.9)... Done.

## Bootstrap (r = 1.0)... Done.

## Bootstrap (r = 1.1)... Done.

## Bootstrap (r = 1.2)... Done.

## Bootstrap (r = 1.3)... Done.

## Bootstrap (r = 1.4)... Done.The clustering provides approximately unbiased p-values and bootstrap probability value (see Levshina 2015, 316).

plot(res.pv, cex = .75)

pvrect(res.pv)

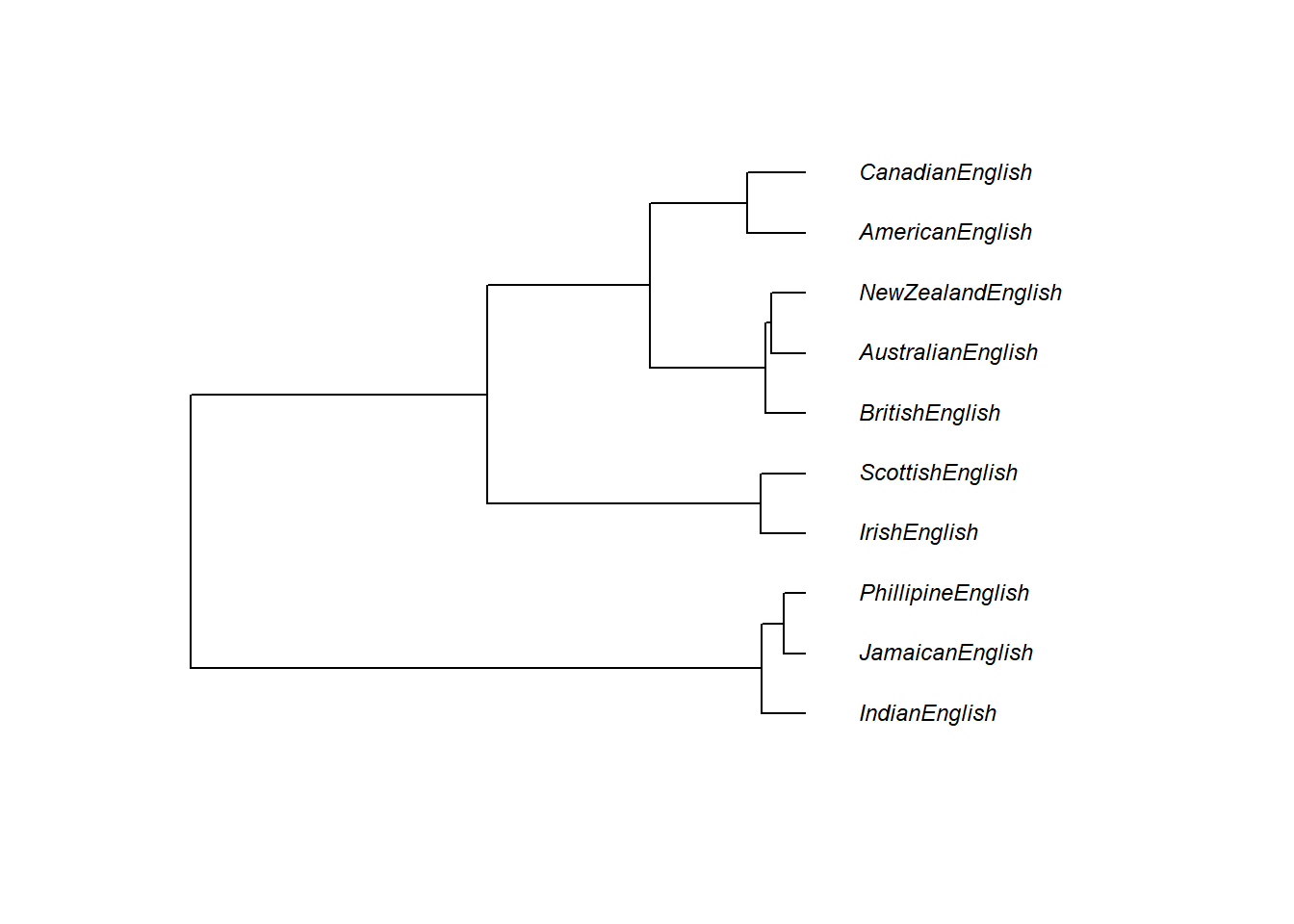

We can also use other packages to customize the dendrograms.

plot(as.phylo(cd), # plot cluster object

cex = 0.75, # .75 font size

label.offset = .5) # .5 label offset

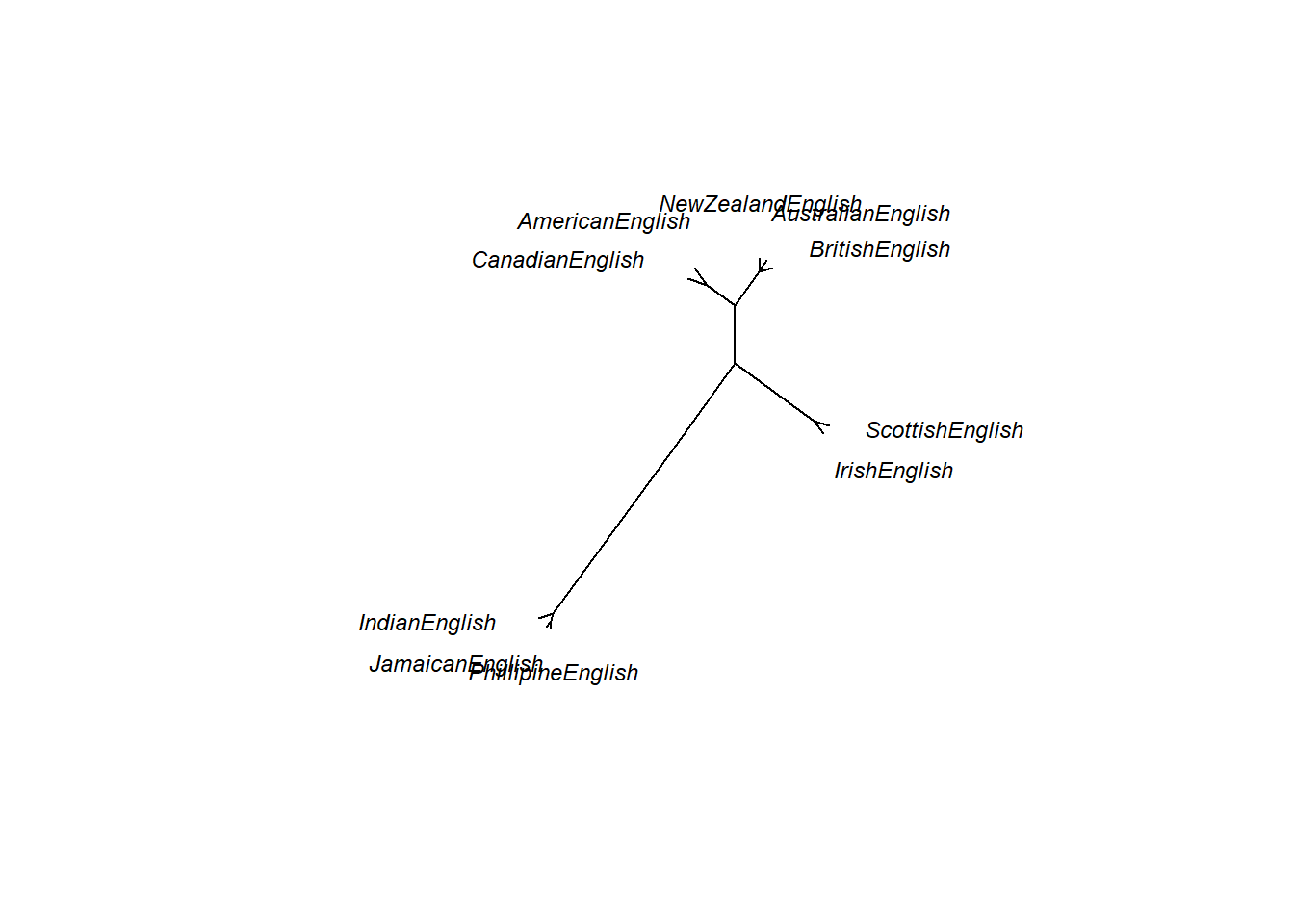

One useful customization is to display an unrooted rather than a rooted tree diagram.

# plot as unrooted tree

plot(as.phylo(cd), # plot cluster object

type = "unrooted", # plot as unrooted tree

cex = .75, # .75 font size

label.offset = 1) # .5 label offset

Cluster Analysis on Nominal Data

So far, all analyses were based on numeric data. However, especially when working with language data, the data is nominal or categorical rather than numeric. The following will thus show to implement a clustering method for nominal data.

In a first step, we will create a simple data set representing the presence and absence of features across varieties of English.

# generate data

IrishEnglish <- c(1,1,1,1,1,1,1,1,1,1)

ScottishEnglish <- c(1,1,1,1,1,1,1,1,1,1)

BritishEnglish <- c(0,1,1,1,0,0,1,0,1,1)

AustralianEnglish <- c(0,1,1,1,0,0,1,0,1,1)

NewZealandEnglish <- c(0,1,1,1,0,0,1,0,1,1)

AmericanEnglish <- c(0,1,1,1,0,0,0,0,1,0)

CanadianEnglish <- c(0,1,1,1,0,0,0,0,1,0)

JamaicanEnglish <- c(0,0,1,0,0,0,0,0,1,0)

PhillipineEnglish <- c(0,0,1,0,0,0,0,0,1,0)

IndianEnglish <- c(0,0,1,0,0,0,0,0,1,0)

clus <- data.frame(IrishEnglish, ScottishEnglish, BritishEnglish,

AustralianEnglish, NewZealandEnglish, AmericanEnglish,

CanadianEnglish, JamaicanEnglish, PhillipineEnglish, IndianEnglish)

# add row names

rownames(clus) <- c("nae_neg", "like", "clefts", "tags", "youse", "soitwas",

"dt", "nsr", "invartag", "wh_cleft")

# convert into factors

clus <- apply(clus, 1, function(x){

x <- as.factor(x) })Variety | nae_neg | like | clefts | tags | youse | soitwas | dt | nsr | invartag | wh_cleft |

|---|---|---|---|---|---|---|---|---|---|---|

IrishEnglish | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

ScottishEnglish | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

BritishEnglish | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 1 |

AustralianEnglish | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 1 |

NewZealandEnglish | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 1 |

AmericanEnglish | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

CanadianEnglish | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

JamaicanEnglish | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

PhillipineEnglish | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

IndianEnglish | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

Now that we have our data, we will create a distance matrix but in contrast to previous methods, we will use a different distance measure that takes into account that we are dealing with nominal (or binary) data.

# clean data

clusts <- as.matrix(clus)

# create distance matrix

clustd <- dist(clusts, method = "binary") # create a distance object with binary (!) distanceVariety | IrishEnglish | ScottishEnglish | BritishEnglish | AustralianEnglish | NewZealandEnglish | AmericanEnglish | CanadianEnglish | JamaicanEnglish | PhillipineEnglish | IndianEnglish |

|---|---|---|---|---|---|---|---|---|---|---|

IrishEnglish | 0.0 | 0.0 | 0.40 | 0.40 | 0.40 | 0.60 | 0.60 | 0.80 | 0.80 | 0.80 |

ScottishEnglish | 0.0 | 0.0 | 0.40 | 0.40 | 0.40 | 0.60 | 0.60 | 0.80 | 0.80 | 0.80 |

BritishEnglish | 0.4 | 0.4 | 0.00 | 0.00 | 0.00 | 0.33 | 0.33 | 0.67 | 0.67 | 0.67 |

AustralianEnglish | 0.4 | 0.4 | 0.00 | 0.00 | 0.00 | 0.33 | 0.33 | 0.67 | 0.67 | 0.67 |

NewZealandEnglish | 0.4 | 0.4 | 0.00 | 0.00 | 0.00 | 0.33 | 0.33 | 0.67 | 0.67 | 0.67 |

AmericanEnglish | 0.6 | 0.6 | 0.33 | 0.33 | 0.33 | 0.00 | 0.00 | 0.50 | 0.50 | 0.50 |

CanadianEnglish | 0.6 | 0.6 | 0.33 | 0.33 | 0.33 | 0.00 | 0.00 | 0.50 | 0.50 | 0.50 |

JamaicanEnglish | 0.8 | 0.8 | 0.67 | 0.67 | 0.67 | 0.50 | 0.50 | 0.00 | 0.00 | 0.00 |

PhillipineEnglish | 0.8 | 0.8 | 0.67 | 0.67 | 0.67 | 0.50 | 0.50 | 0.00 | 0.00 | 0.00 |

IndianEnglish | 0.8 | 0.8 | 0.67 | 0.67 | 0.67 | 0.50 | 0.50 | 0.00 | 0.00 | 0.00 |

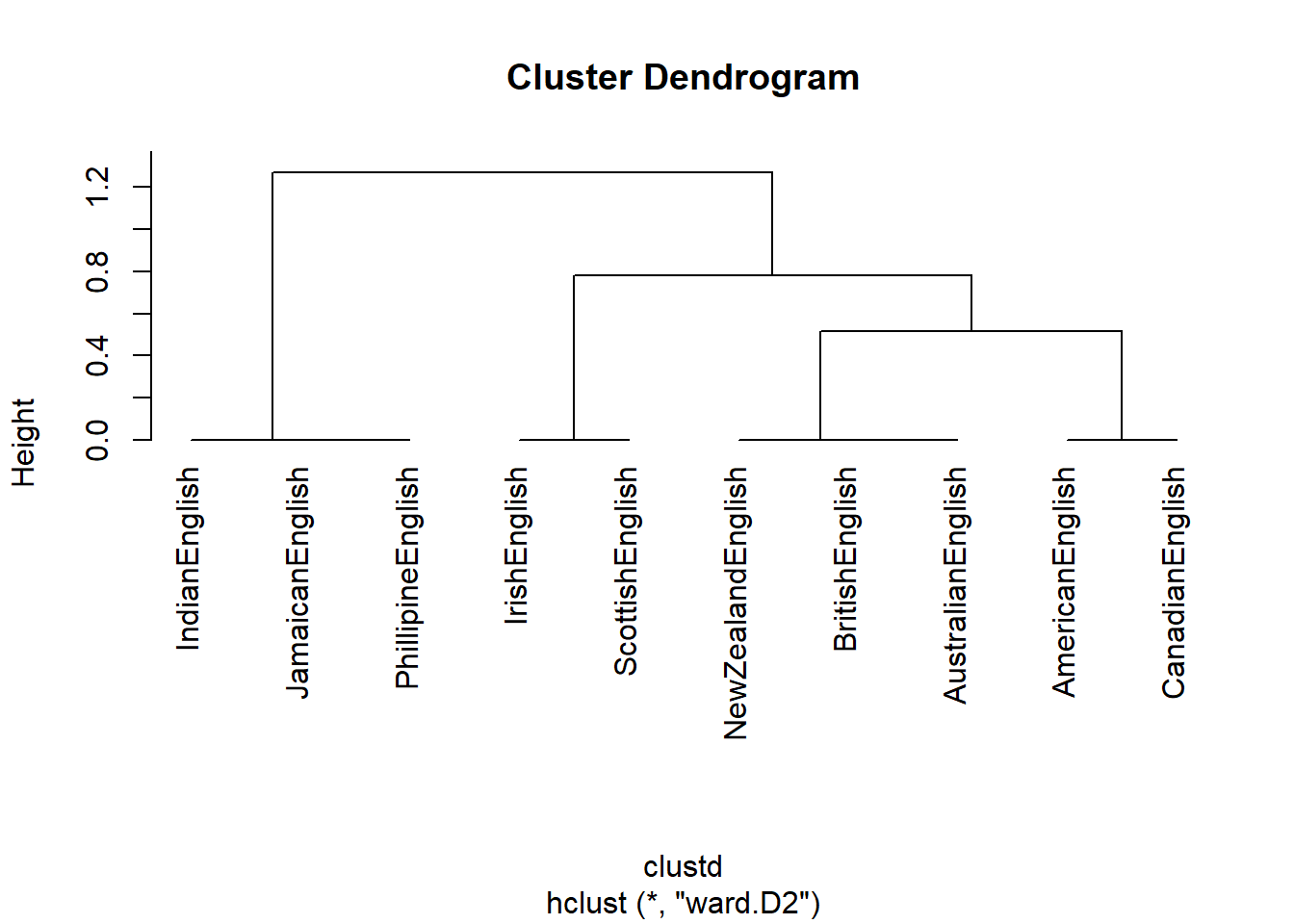

As before, we can now use hierarchical clustering to display the results as a dendrogram

# create cluster object (ward.D2 linkage) : cluster in a way to achieve minimum variance

cd <- hclust(clustd, method="ward.D2")

# plot result as dendrogram

plot(cd, hang = -1) # display dendogram

In a next step, we want to determine which features are particularly distinctive for one cluster (the “Celtic” cluster containing Irish and Scottish English).

# create factor with celtic varieties on one hand and other varieties on other

cluster <- as.factor(ifelse(as.character(rownames(clusts)) == "IrishEnglish", "1",

ifelse(as.character(rownames(clusts)) == "ScottishEnglish", "1", "0")))

# convert into data frame

clsts.df <- as.data.frame(clusts)

# determine significance

library(exact2x2)

pfish <- fisher.exact(table(cluster, clsts.df$youse))

pfish[[1]]## [1] 0.02222222# determine effect size

assocstats(table(cluster, clsts.df$youse))## X^2 df P(> X^2)

## Likelihood Ratio 10.008 1 0.0015586

## Pearson 10.000 1 0.0015654

##

## Phi-Coefficient : 1

## Contingency Coeff.: 0.707

## Cramer's V : 1assocstats(table(cluster, clsts.df$like))## X^2 df P(> X^2)

## Likelihood Ratio 1.6323 1 0.20139

## Pearson 1.0714 1 0.30062

##

## Phi-Coefficient : 0.327

## Contingency Coeff.: 0.311

## Cramer's V : 0.327Clustering is a highly complex topic and there many more complexities to it. However, this should have helped to get you started.

2 Correspondence Analysis

Correspondence analysis (CA) represents a multivariate statistical technique that provides a graphic method of exploring the relationship between variables in a contingency table. CA is conceptually similar to principal component analysis (PCA), but applies to categorical rather than continuous data.

CA consists out of the following four steps:

- Computing row and column averages

- Computing expected values

- Computing the residuals

- Plotting residuals

In this tutorial, we investigate similarities among amplifiers based on their co-occurrences (word embeddings) with adjectives. Adjective amplifiers are elements such as those in 1. to 5.

- The veryamplifier niceadjective man.

- A truelyamplifier remarkableadjective woman.

- He was reallyamplifier hesitantadjective.

- The child was awfullyamplifier loudadjective.

- The festival was soamplifier amazingadjective!

The similarity among adjective amplifiers can then be used to find clusters or groups of amplifiers that behave similarly and are interchangeable. To elaborate, adjective amplifiers are interchangeable with some variants but not with others (consider 6. to 8.; the question mark signifies that the example is unlikely to be used or grammatically not acceptable by L1 speakers of English).

- The veryamplifier niceadjective man.

- The reallyamplifier niceadjective man.

- ?The completelyamplifier niceadjective man.

We start by loading the data, and then displaying the data which is

called vsmdata and consist of 5,000 observations of

adjectives and contains two columns: one column with the adjectives

(Adjectives) and another column which has the amplifiers (0 means that

the adjective occurred without an amplifier).

# load data

vsmdata <- base::readRDS(url("https://slcladal.github.io/data/vsd.rda", "rb"))Amplifier | Adjective |

|---|---|

0 | serious |

0 | sure |

so | many |

0 | many |

0 | good |

0 | much |

0 | good |

0 | good |

0 | last |

0 | nice |

For this tutorial, we will reduce the number of amplifiers and adjectives and thus simplify the data to render it easier to understand what is going on. To simplify the data, we remove

- all non-amplified adjectives

- the adjectives many and much

- adjectives that are amplified less than 10 times

In addition, we collapse all amplifiers that occur less than 20 times into a bin category (other).

# simplify data

vsmdata_simp <- vsmdata %>%

# remove non-amplifier adjectives

dplyr::filter(Amplifier != 0,

Adjective != "many",

Adjective != "much") %>%

# collapse infrequent amplifiers

dplyr::group_by(Amplifier) %>%

dplyr::mutate(AmpFreq = dplyr::n()) %>%

dplyr::ungroup() %>%

dplyr::mutate(Amplifier = ifelse(AmpFreq > 20, Amplifier, "other")) %>%

# collapse infrequent adjectives

dplyr::group_by(Adjective) %>%

dplyr::mutate(AdjFreq = dplyr::n()) %>%

dplyr::ungroup() %>%

dplyr::mutate(Adjective = ifelse(AdjFreq > 10, Adjective, "other")) %>%

dplyr::filter(Adjective != "other") %>%

dplyr::select(-AmpFreq, -AdjFreq)Amplifier | Adjective |

|---|---|

very | good |

really | nice |

really | good |

really | bad |

very | nice |

really | nice |

very | hard |

other | good |

really | nice |

really | good |

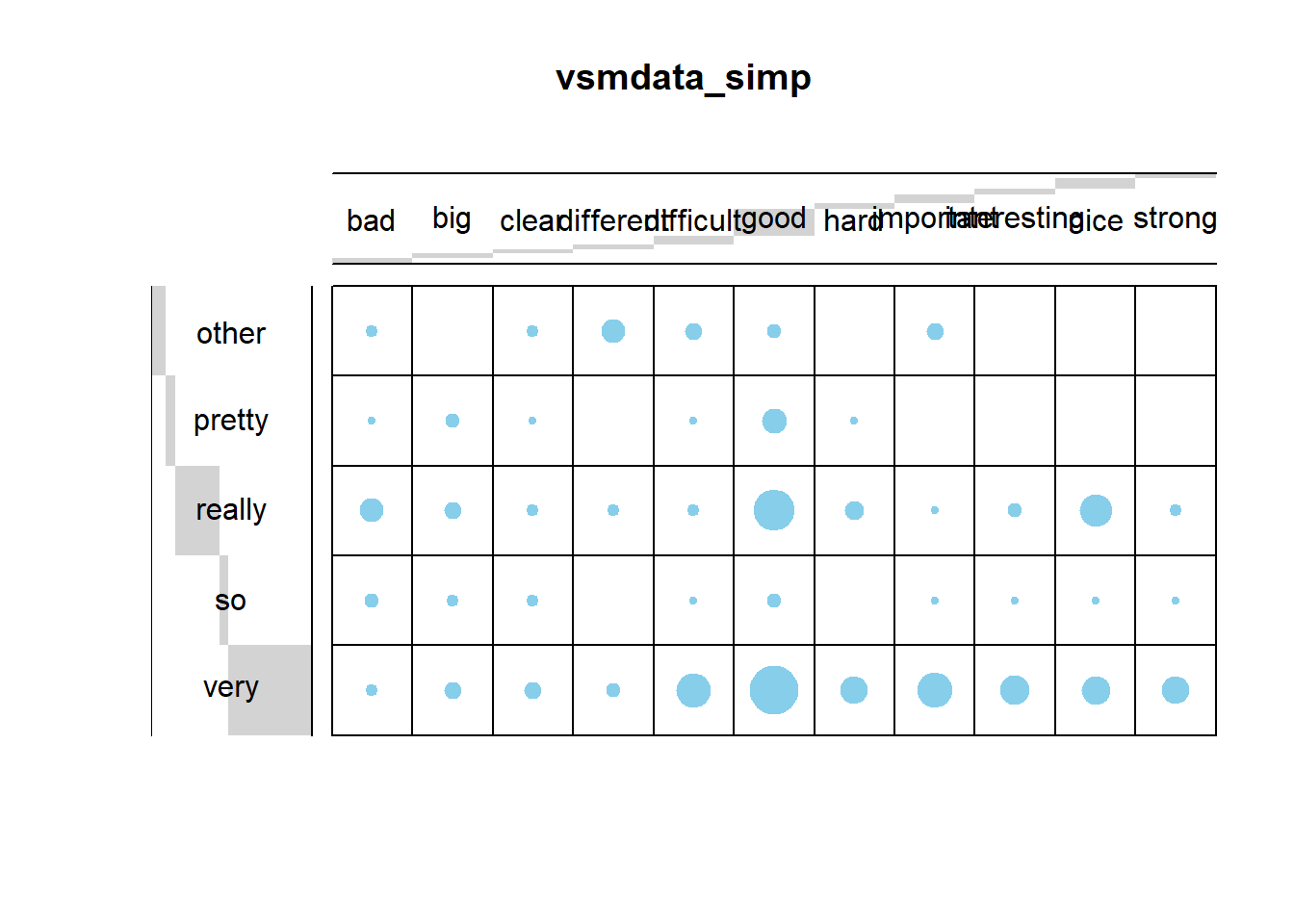

We now use a balloon plot to see if there are any potential correlations between amplifiers and adjectives.

# 1. convert the data as a table

dt <- as.matrix(table(vsmdata_simp))

# 2. Graph

balloonplot(t(dt), main ="vsmdata_simp", xlab ="", ylab="",

label = FALSE, show.margins = FALSE)

The balloon plot suggests that there are potential correlations as the dots (balloons) are not distributed evenly according to frequency. To validate if there is significant correlation between the amplifier types and the adjectives using a \(\chi\)2- test.

chisq <- chisq.test(dt)

chisq##

## Pearson's Chi-squared test

##

## data: dt

## X-squared = 124.4, df = 40, p-value = 1.375e-10The \(\chi\)2- test confirms that there is a significant correlations between amplifier types and the adjectives.

res.ca <- FactoMineR::CA(dt, graph = FALSE)

# inspect results of the CA

#print(res.ca)

eig.val <- get_eigenvalue(res.ca)

eig.val## eigenvalue variance.percent cumulative.variance.percent

## Dim.1 0.24138007 49.868237 49.86824

## Dim.2 0.14687839 30.344536 80.21277

## Dim.3 0.06125177 12.654392 92.86716

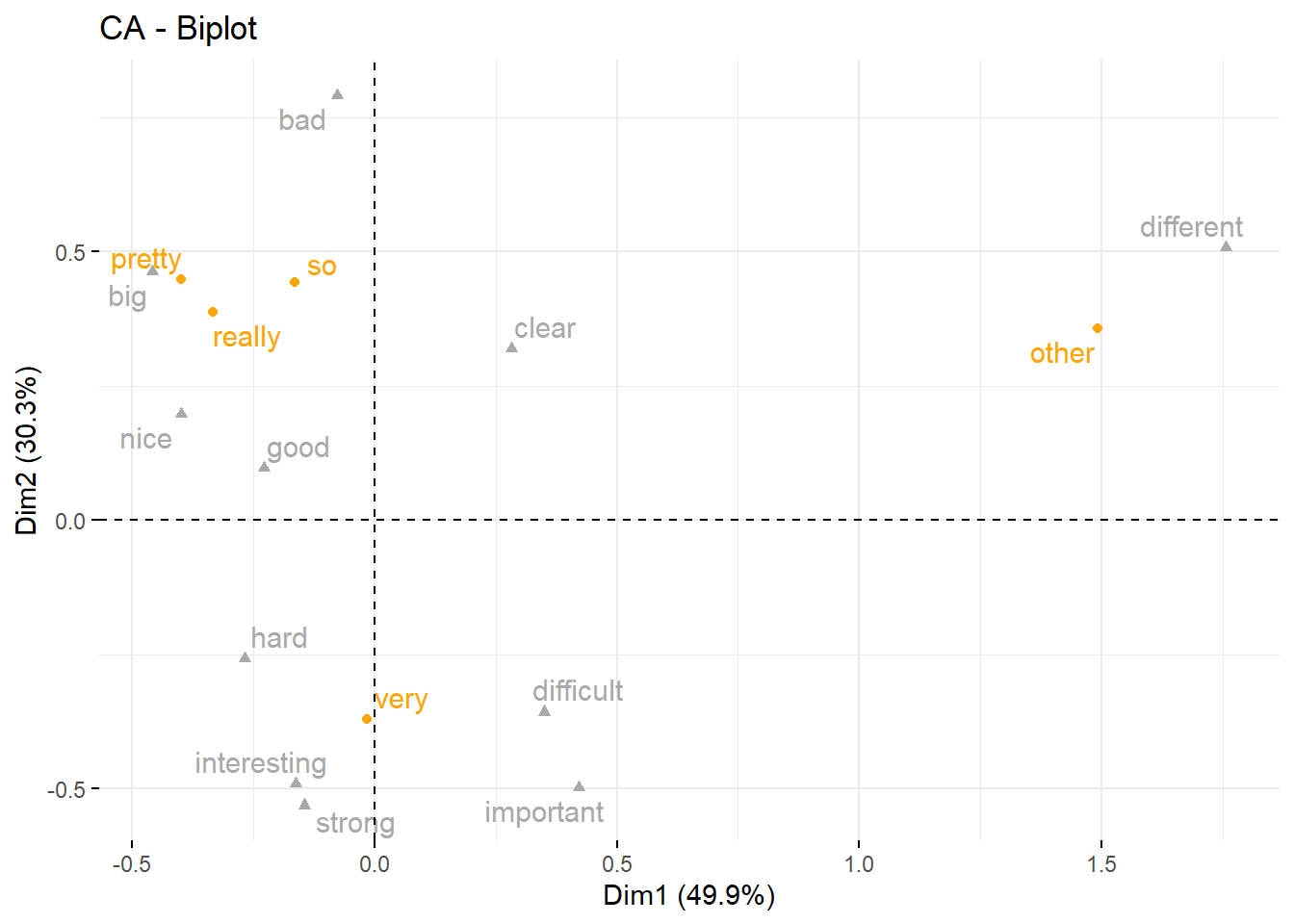

## Dim.4 0.03452547 7.132836 100.00000The display of the eigenvalues provides information on the amount of variance that is explained by each dimension. The first dimension explains 49.87 percent of the variance, the second dimension explains another 30.34 percent of the variance, leaving all other variables with relative moderate explanatory power as they only account for 20 percent variance. We now plot and interpret the results of the CA.

# repel= TRUE to avoid text overlapping (slow if many point)

fviz_ca_biplot(res.ca,

repel = TRUE,

col.row = "orange",

col.col = "darkgray")

The results of the CA show that the adjective different is collocating with other amplifiers while very is collocating with difficult and important, pretty is collocating with big, really is collocating with nice, and so is collocating with bad.

Citation & Session Info

Schweinberger, Martin. 2023. Cluster and Correspondence Analysis in R. Brisbane: The University of Queensland. url: https://ladal.edu.au/clust.html (Version 2023.05.31).

@manual{schweinberger2023clust,

author = {Schweinberger, Martin},

title = {Cluster and Correspondence Analysis in R},

note = {https://ladal.edu.au/clust.html},

year = {2023},

organization = "The University of Queensland, Australia. School of Languages and Cultures},

address = {Brisbane},

edition = {2023.05.31}

}sessionInfo()## R version 4.2.2 (2022-10-31 ucrt)

## Platform: x86_64-w64-mingw32/x64 (64-bit)

## Running under: Windows 10 x64 (build 22621)

##

## Matrix products: default

##

## locale:

## [1] LC_COLLATE=English_Australia.utf8 LC_CTYPE=English_Australia.utf8

## [3] LC_MONETARY=English_Australia.utf8 LC_NUMERIC=C

## [5] LC_TIME=English_Australia.utf8

##

## attached base packages:

## [1] grid stats graphics grDevices utils datasets methods

## [8] base

##

## other attached packages:

## [1] gplots_3.1.3 tibble_3.2.1 flextable_0.9.1 exact2x2_1.6.8

## [5] exactci_1.4-2 testthat_3.1.8 ssanv_1.1 vcd_1.4-11

## [9] ape_5.7-1 pvclust_2.2-0 seriation_1.4.2 factoextra_1.0.7

## [13] ggplot2_3.4.2 cluster_2.1.4

##

## loaded via a namespace (and not attached):

## [1] colorspace_2.1-0 ggsignif_0.6.4 ellipsis_0.3.2

## [4] estimability_1.4.1 httpcode_0.3.0 rstudioapi_0.14

## [7] ggpubr_0.6.0 farver_2.1.1 ggrepel_0.9.3

## [10] DT_0.28 fansi_1.0.4 mvtnorm_1.1-3

## [13] xml2_1.3.4 codetools_0.2-19 leaps_3.1

## [16] cachem_1.0.8 knitr_1.43 jsonlite_1.8.4

## [19] broom_1.0.4 shiny_1.7.4 compiler_4.2.2

## [22] backports_1.4.1 emmeans_1.8.6 assertthat_0.2.1

## [25] fastmap_1.1.1 cli_3.6.1 later_1.3.1

## [28] htmltools_0.5.5 tools_4.2.2 gtable_0.3.3

## [31] glue_1.6.2 reshape2_1.4.4 dplyr_1.1.2

## [34] FactoMineR_2.8 Rcpp_1.0.10 carData_3.0-5

## [37] jquerylib_0.1.4 fontquiver_0.2.1 vctrs_0.6.2

## [40] crul_1.4.0 nlme_3.1-162 iterators_1.0.14

## [43] lmtest_0.9-40 xfun_0.39 stringr_1.5.0

## [46] brio_1.1.3 mime_0.12 lifecycle_1.0.3

## [49] gtools_3.9.4 rstatix_0.7.2 klippy_0.0.0.9500

## [52] ca_0.71.1 MASS_7.3-60 zoo_1.8-12

## [55] scales_1.2.1 TSP_1.2-4 ragg_1.2.5

## [58] promises_1.2.0.1 parallel_4.2.2 fontLiberation_0.1.0

## [61] yaml_2.3.7 curl_5.0.0 gdtools_0.3.3

## [64] sass_0.4.6 stringi_1.7.12 fontBitstreamVera_0.1.1

## [67] highr_0.10 foreach_1.5.2 caTools_1.18.2

## [70] zip_2.3.0 rlang_1.1.1 pkgconfig_2.0.3

## [73] systemfonts_1.0.4 bitops_1.0-7 evaluate_0.21

## [76] lattice_0.21-8 purrr_1.0.1 labeling_0.4.2

## [79] htmlwidgets_1.6.2 tidyselect_1.2.0 plyr_1.8.8

## [82] magrittr_2.0.3 R6_2.5.1 generics_0.1.3

## [85] multcompView_0.1-9 pillar_1.9.0 withr_2.5.0

## [88] abind_1.4-5 scatterplot3d_0.3-44 car_3.1-2

## [91] crayon_1.5.2 gfonts_0.2.0 uuid_1.1-0

## [94] KernSmooth_2.23-21 utf8_1.2.3 rmarkdown_2.21

## [97] officer_0.6.2 data.table_1.14.8 digest_0.6.31

## [100] flashClust_1.01-2 xtable_1.8-4 tidyr_1.3.0

## [103] httpuv_1.6.11 textshaping_0.3.6 openssl_2.0.6

## [106] munsell_0.5.0 registry_0.5-1 bslib_0.4.2

## [109] askpass_1.1References

If you want to render the R Notebook on your machine, i.e. knitting the document to html or a pdf, you need to make sure that you have R and RStudio installed and you also need to download the bibliography file and store it in the same folder where you store the Rmd file.↩︎