Fixed- and Mixed-Effects Regression Models in R

Martin Schweinberger

2024-11-08

Introduction

This tutorial introduces regression analyses (also called regression modeling) using R.1 Regression models are among the most widely used quantitative methods in the language sciences to assess if and how predictors (variables or interactions between variables) correlate with a certain response.

This tutorial is aimed at intermediate and advanced users of R with the aim of showcasing how to perform regression analysis using R. The aim is not to provide a fully-fledged analysis but rather to show and exemplify common regression types, model diagnostics, and model fitting using R.

The entire R Notebook for the tutorial can be downloaded here.

If you want to render the R Notebook on your machine, i.e. knitting the

document to html or a pdf, you need to make sure that you have R and

RStudio installed and you also need to download the bibliography

file and store it in the same folder where you store the

Rmd or the Rproj file.

Regression models are so popular because they can

incorporate many predictors in a single model (multivariate: allows to test the impact of one predictor while the impact of (all) other predictors is controlled for)

extremely flexible and and can be fitted to different types of predictors and dependent variables

provide output that can be easily interpreted

conceptually relative simple and not overly complex from a mathematical perspective

R offers various ready-made functions with which implementing different types of regression models is very easy.

The most widely use regression models are

linear regression (dependent variable is numeric, no outliers)

logistic regression (dependent variable is binary)

ordinal regression (dependent variable represents an ordered factor, e.g. Likert items)

multinomial regression (dependent variable is categorical)

The major difference between these types of models is that they take different types of dependent variables: linear regressions take numeric, logistic regressions take nominal variables, ordinal regressions take ordinal variables, and Poisson regressions take dependent variables that reflect counts of (rare) events. Robust regression, in contrast, is a simple multiple linear regression that is able to handle outliers due to a weighing procedure.

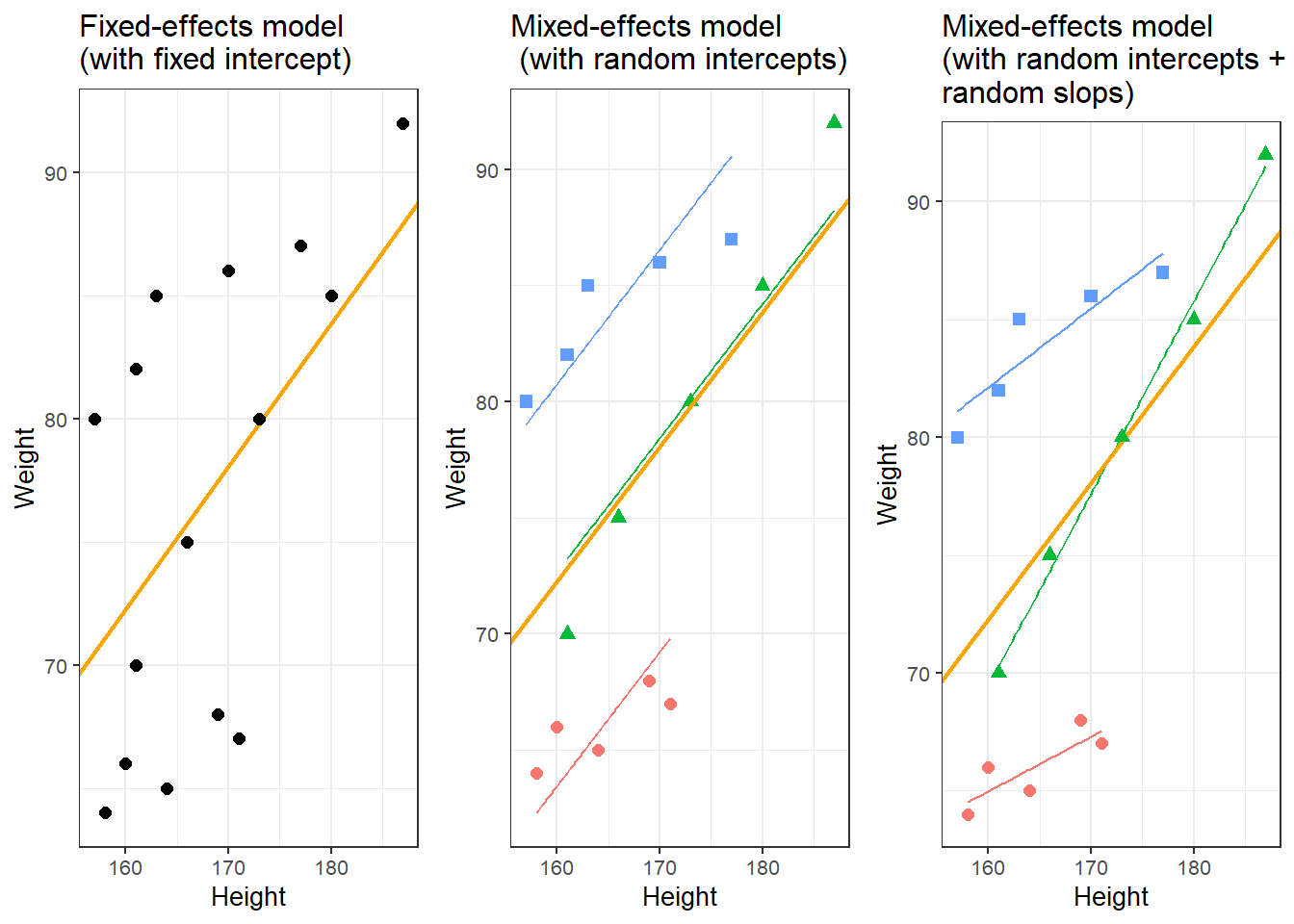

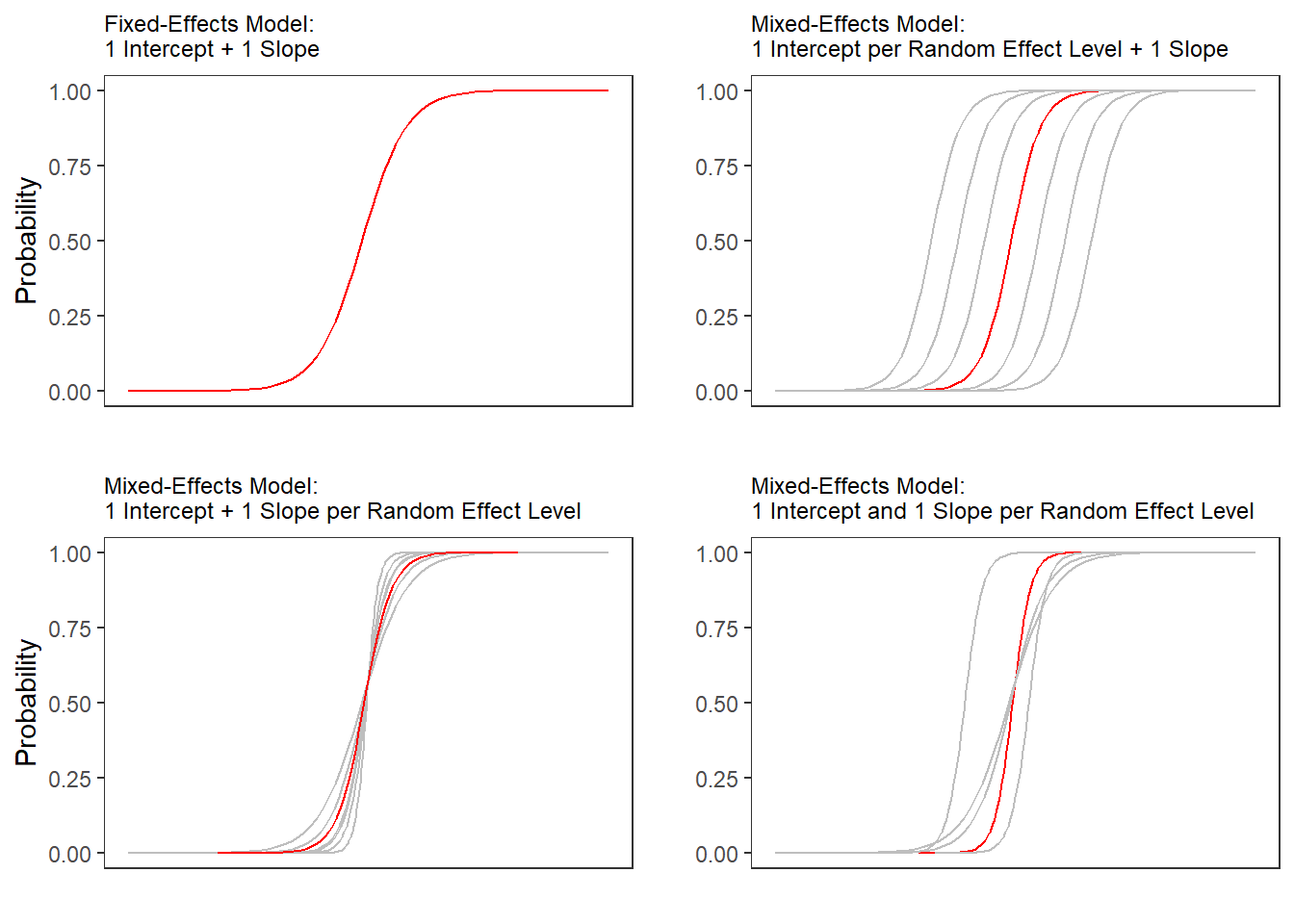

If regression models contain a random effect structure which is used to model nestedness or dependence among data points, the regression models are called mixed-effect models. regressions that do not have a random effect component to model nestedness or dependence are referred to as fixed-effect regressions (we will have a closer look at the difference between fixed and random effects below).

There are two basic types of regression models:

fixed-effects regression models

mixed-effects regression models (which are fitted using the

lme4package (Bates et al. 2015) in this tutorial).

Fixed-effects regression models are models that assume a non-hierarchical data structure, i.e. data where data points are not nested or grouped in higher order categories (e.g. students within classes). The first part of this tutorial focuses on fixed-effects regression models while the second part focuses on mixed-effects regression models.

There exists a wealth of literature focusing on regression analysis and the concepts it is based on. For instance, there are Achen (1982), Bortz (2006), Crawley (2005), Faraway (2002), Field, Miles, and Field (2012) (my personal favorite), Gries (2021), Levshina (2015), and Wilcox (2009) to name just a few. Introductions to regression modeling in R are Baayen (2008), Crawley (2012), Gries (2021), or Levshina (2015).

The basic principle

The idea behind regression analysis is expressed formally in the equation below where \(f_{(x)}\) is the \(y\)-value we want to predict, \(\alpha\) is the intercept (the point where the regression line crosses the \(y\)-axis), \(\beta\) is the coefficient (the slope of the regression line).

\[\begin{equation} f_{(x)} = \alpha + \beta_{i}x + \epsilon \end{equation}\]

To understand what this means, let us imagine that we have collected information about the how tall people are and what they weigh. Now we want to predict the weight of people of a certain height - let’s say 180cm.

Height | Weight |

|---|---|

173 | 80 |

169 | 68 |

176 | 72 |

166 | 75 |

161 | 70 |

164 | 65 |

160 | 62 |

158 | 60 |

180 | 85 |

187 | 92 |

We can run a simple linear regression on the data and we get the following output:

# model for upper panels

summary(glm(Weight ~ 1, data = df))##

## Call:

## glm(formula = Weight ~ 1, data = df)

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 72.900 3.244 22.48 3.24e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for gaussian family taken to be 105.2111)

##

## Null deviance: 946.9 on 9 degrees of freedom

## Residual deviance: 946.9 on 9 degrees of freedom

## AIC: 77.885

##

## Number of Fisher Scoring iterations: 2To estimate how much some weights who is 180cm tall, we would multiply the coefficient (slope of the line) with 180 (\(x\)) and add the value of the intercept (point where line crosses the \(y\)-axis). If we plug in the numbers from the regression model below, we get

\[\begin{equation} -93.77 + 0.98 ∗ 180 = 83.33 (kg) \end{equation}\]

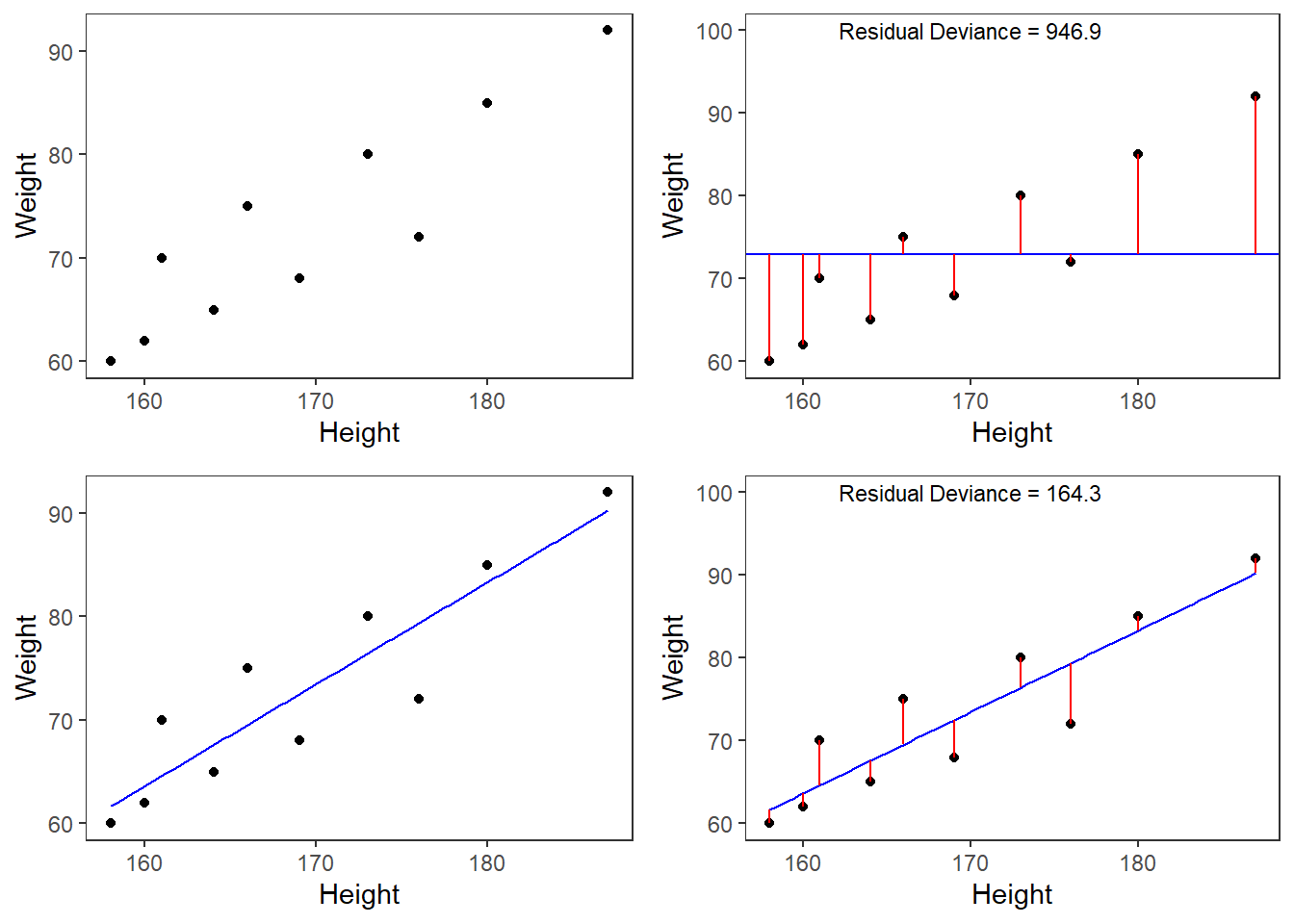

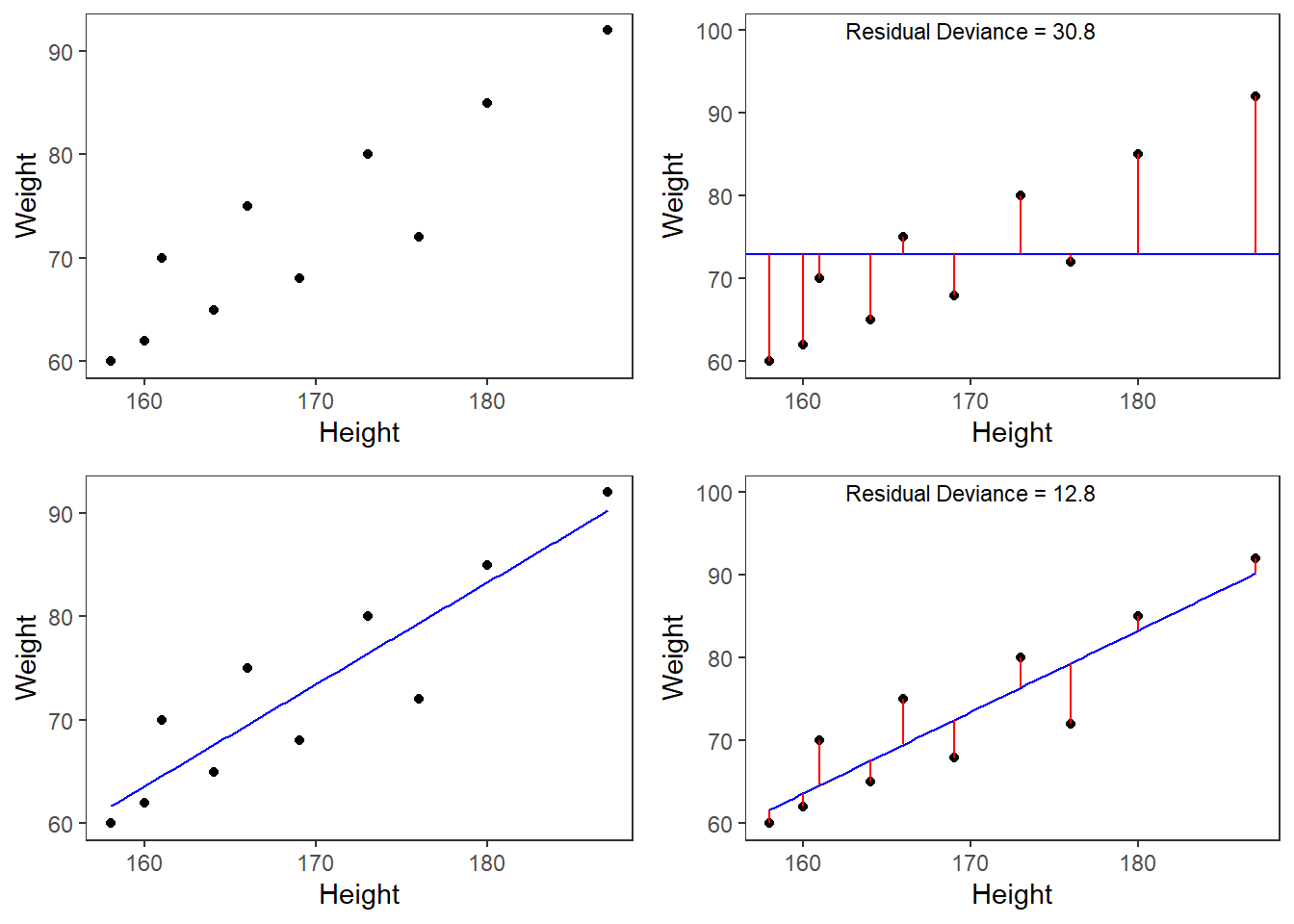

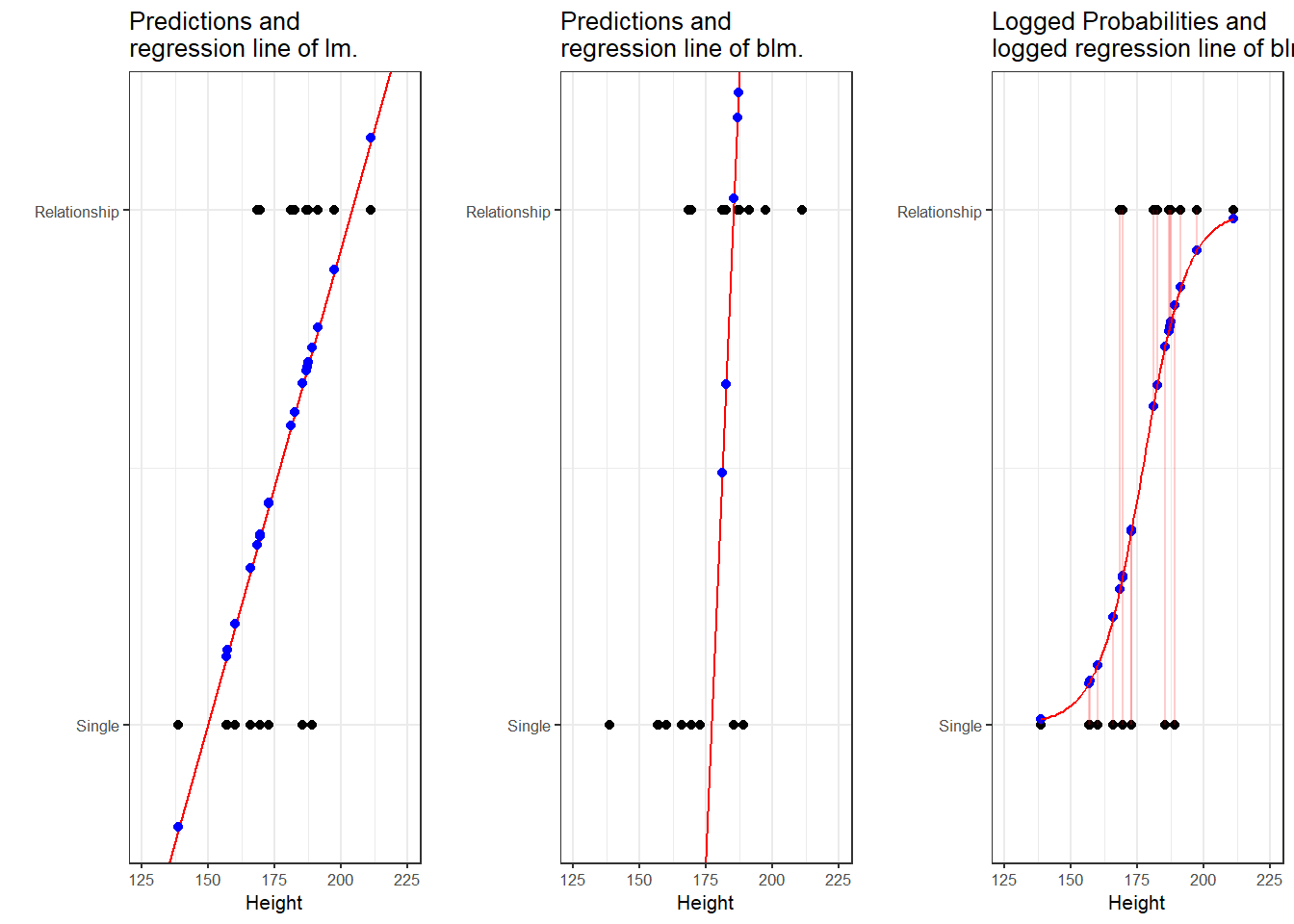

A person who is 180cm tall is predicted to weigh 83.33kg. Thus, the predictions of the weights are visualized as the red line in the figure below. Such lines are called regression lines. Regression lines are those lines where the sum of the red lines should be minimal. The slope of the regression line is called coefficient and the point where the regression line crosses the y-axis at x = 0 is called the intercept. Other important concepts in regression analysis are variance and residuals. Residuals are the distance between the line and the points (the red lines) and it is also called variance.

Some words about the plots in the figure above: the upper left panel shows the raw observed data (black dots). The upper right panel shows the mean weight (blue line) and the residuals (in red). residuals are the distances from the expected or predicted values to the observed values (in this case the mean is the most most basic model which we use to predict values while the observed values simply represent the actual data points). The lower left panel shows observed values and the regression line, i.e, that line which, when drawn through the data points, will have the lowest sum of residuals. The lower right panel shows the regression line and the residuals, i.e. the distances between the expected or predicted values to the actual observed values (in red). Note that the sum of residuals in the lower right panel is much smaller than the sum of residuals in the upper right panel. This suggest that considering Height is a good idea as it explains a substantive amount of residual error and reduces the sum of residuals (or variance).2

Now that we are familiar with the basic principle of regression modeling - i.e. finding the line through data that has the smallest sum of residuals, we will apply this to a linguistic example.

Preparation and session set up

This tutorial is based on R. If you have not installed R or are new to it, you will find an introduction to and more information how to use R here. For this tutorials, we need to install certain packages from an R library so that the scripts shown below are executed without errors. Before turning to the code below, please install the packages by running the code below this paragraph. If you have already installed the packages mentioned below, then you can skip ahead and ignore this section. To install the necessary packages, simply run the following code - it may take some time (between 1 and 5 minutes to install all of the libraries so you do not need to worry if it takes some time).

# install

install.packages("Boruta")

install.packages("car")

install.packages("emmeans")

install.packages("effects")

install.packages("flextable")

install.packages("glmulti")

install.packages("ggplot2")

install.packages("ggpubr")

install.packages("Hmisc")

install.packages("knitr")

install.packages("lme4")

install.packages("MASS")

install.packages("mclogit")

install.packages("MuMIn")

install.packages("nlme")

install.packages("ordinal")

install.packages("rms")

install.packages("robustbase")

install.packages("sjPlot")

install.packages("stringr")

install.packages("tibble")

install.packages("dplyr")

install.packages("vcd")

install.packages("vip")

install.packages("gridExtra")

install.packages("performance")

install.packages("see")

# install klippy for copy-to-clipboard button in code chunks

install.packages("remotes")

remotes::install_github("rlesur/klippy")Now that we have installed the packages, we activate them as shown below.

# set options

options(stringsAsFactors = F) # no automatic data transformation

options("scipen" = 100, "digits" = 12) # suppress math annotation

# load packages

library(Boruta)

library(car)

library(effects)

library(emmeans)

library(flextable)

library(glmulti)

library(ggfortify)

library(ggplot2)

library(ggpubr)

library(Hmisc)

library(knitr)

library(lme4)

library(MASS)

library(mclogit)

library(MuMIn)

library(nlme)

library(ordinal)

library(rms)

library(robustbase)

library(sjPlot)

library(stringr)

library(tibble)

library(vcd)

library(vip)

library(gridExtra)

library(performance)

library(see)

# activate klippy for copy-to-clipboard button

klippy::klippy()Once you have installed R and RStudio and initiated the session by executing the code shown above, you are good to go.

1 Fixed Effects Regression

Before turning to mixed-effects models which are able to represent hierarchical data structures, we will focus on traditional fixed effects regression models and begin with multiple linear regression.

Simple Linear Regression

This section focuses on a very widely used statistical method which is called regression. Regressions are used when we try to understand how independent variables correlate with a dependent or outcome variable. So, if you want to investigate how a certain factor affects an outcome, then a regression is the way to go. We will have a look at two simple examples to understand what the concepts underlying a regression mean and how a regression works. The R code, that we will use, is adapted from many highly recommendable introductions which also focus on regression (among other types of analyses), for example, Gries (2021), Winter (2019), Levshina (2015), Winter (2019) or Wilcox (2009). Baayen (2008) is also very good but probably not the first book one should read about statistics but it is highly recommendable for advanced learners.

Although the basic logic underlying regressions is identical to the conceptual underpinnings of analysis of variance (ANOVA), a related method, sociolinguists have traditionally favored regression analysis in their studies while ANOVAs have been the method of choice in psycholinguistics. The preference for either method is grounded in historical happenstances and the culture of these subdisciplines rather than in methodological reasoning. However, ANOVA are more restricted in that they can only take numeric dependent variables and they have stricter model assumptions that are violated more readily. In addition, a minor difference between regressions and ANOVA lies in the fact that regressions are based on the \(t\)-distribution while ANOVAs use the F-distribution (however, the F-value is simply the value of t squared or t2). Both t- and F-values report on the ratio between explained and unexplained variance.

The idea behind regression analysis is expressed formally in the equation below where\(f_{(x)}\) is the y-value we want to predict, \(\alpha\) is the intercept (the point where the regression line crosses the y-axis at x = 0), \(\beta\) is the coefficient (the slope of the regression line).

\[\begin{equation} f_{(x)} = \alpha + \beta_{i}x + \epsilon \end{equation}\]

In other words, to estimate how much some weights who is 180cm tall, we would multiply the coefficient (slope of the line) with 180 (x) and add the value of the intercept (point where line crosses the y-axis at x = 0).

However, the idea behind regressions can best be described graphically: imagine a cloud of points (like the points in the scatterplot in the upper left panel below). Regressions aim to find that line which has the minimal summed distance between points and the line (like the line in the lower panels). Technically speaking, the aim of a regression is to find the line with the minimal deviance (or the line with the minimal sum of residuals). Residuals are the distance between the line and the points (the red lines) and it is also called variance.

Thus, regression lines are those lines where the sum of the red lines should be minimal. The slope of the regression line is called coefficient and the point where the regression line crosses the y-axis at x = 0 is called the intercept.

A word about standard errors (SE) is in order here because most commonly used statistics programs will provide SE values when reporting regression models. The SE is a measure that tells us how much the coefficients were to vary if the same regression were applied to many samples from the same population. A relatively small SE value therefore indicates that the coefficients will remain very stable if the same regression model is fitted to many different samples with identical parameters. In contrast, a large SE tells you that the model is volatile and not very stable or reliable as the coefficients vary substantially if the model is applied to many samples.

Mathematically, the SE is the standard deviation (SD) divided by the square root of the sample size (N) (see below).The SD is the square root of the deviance (that is, the SD is the square root of the sum of the mean \(\bar{x}\) minus each data point (xi) squared divided by the sample size (N) minus 1).

\[\begin{equation} Standard Error (SE) = \frac{\sum (\bar{x}-x_{i})^2/N-1}{\sqrt{N}} = \frac{SD}{\sqrt{N}} \end{equation}\]

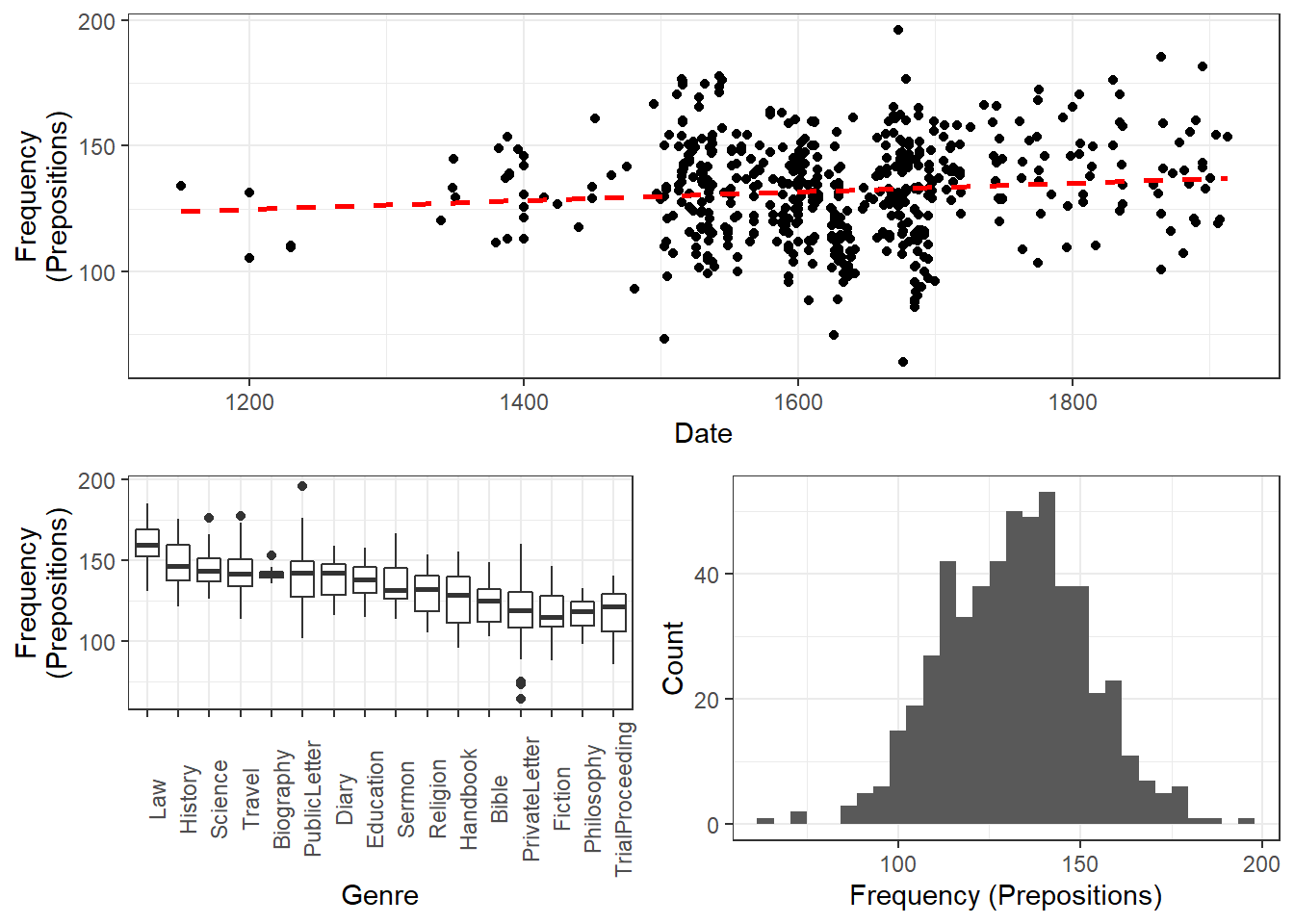

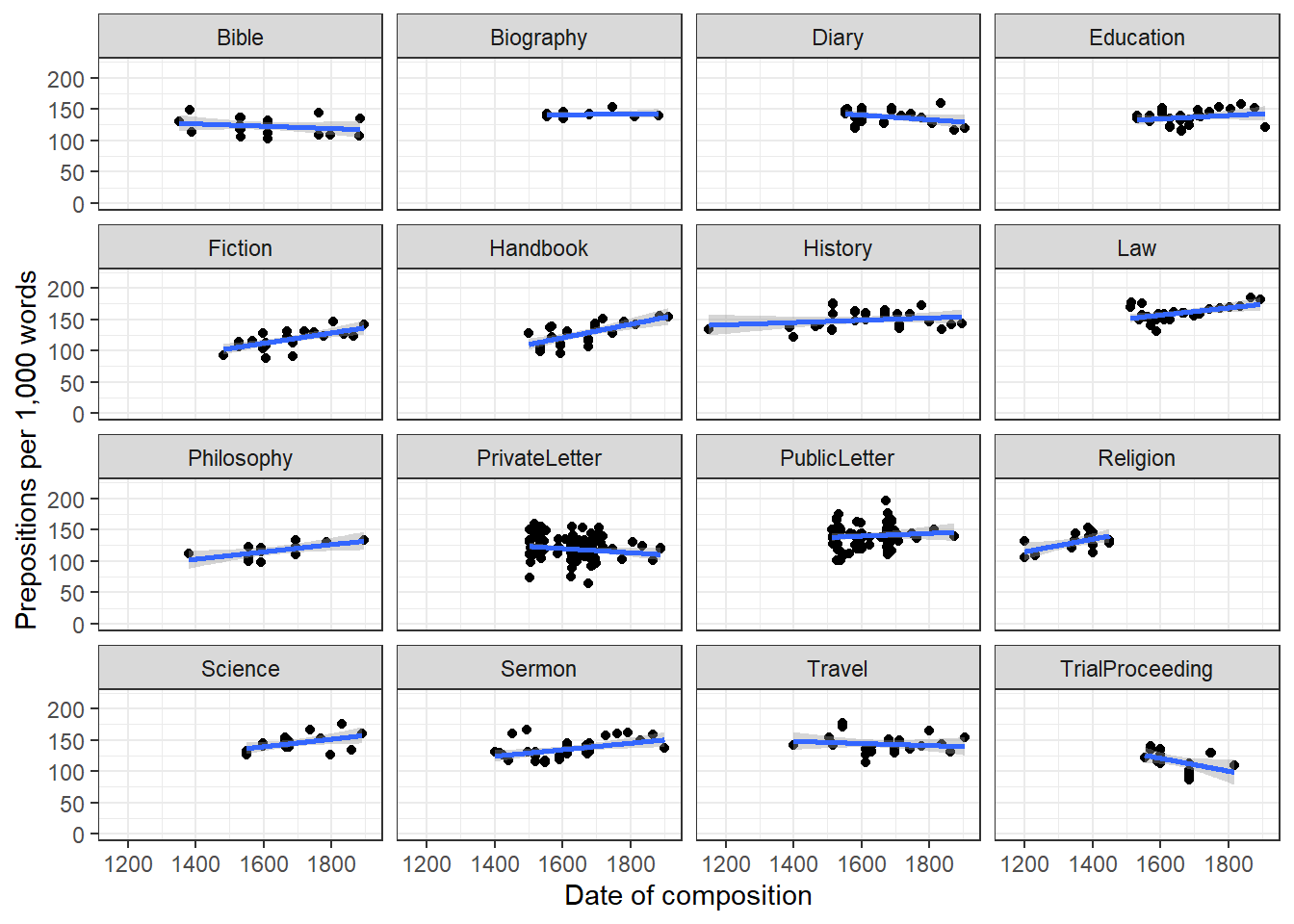

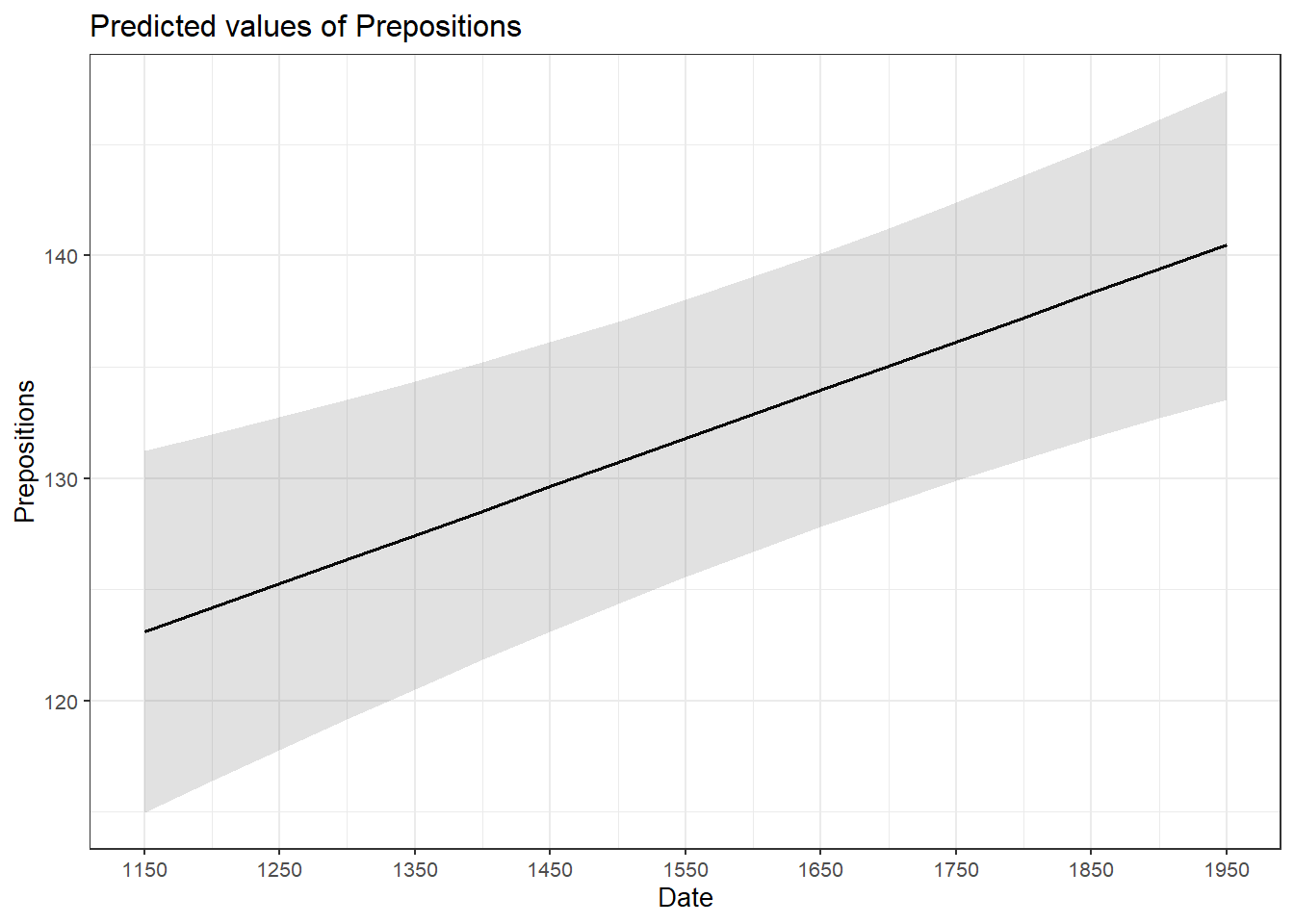

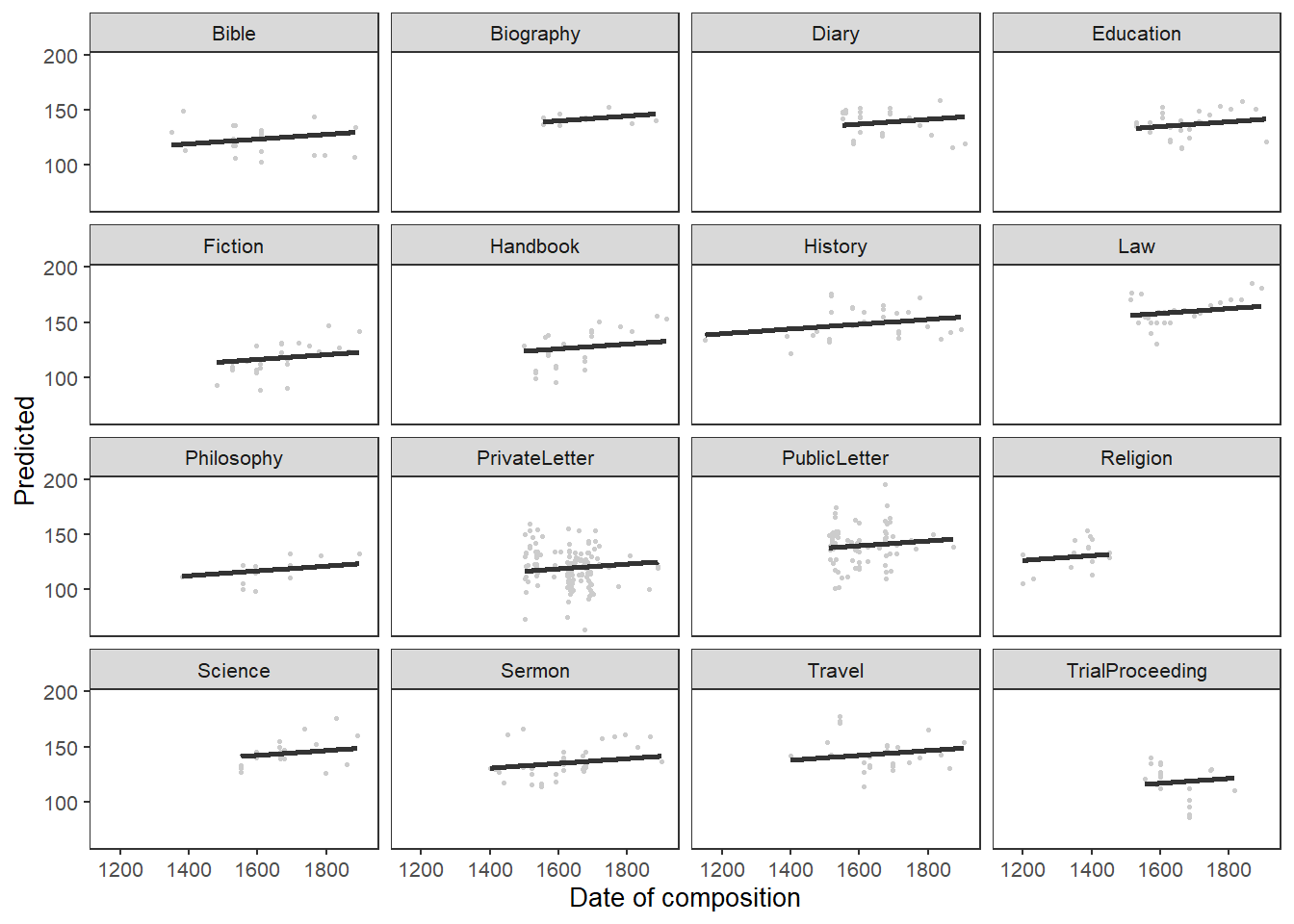

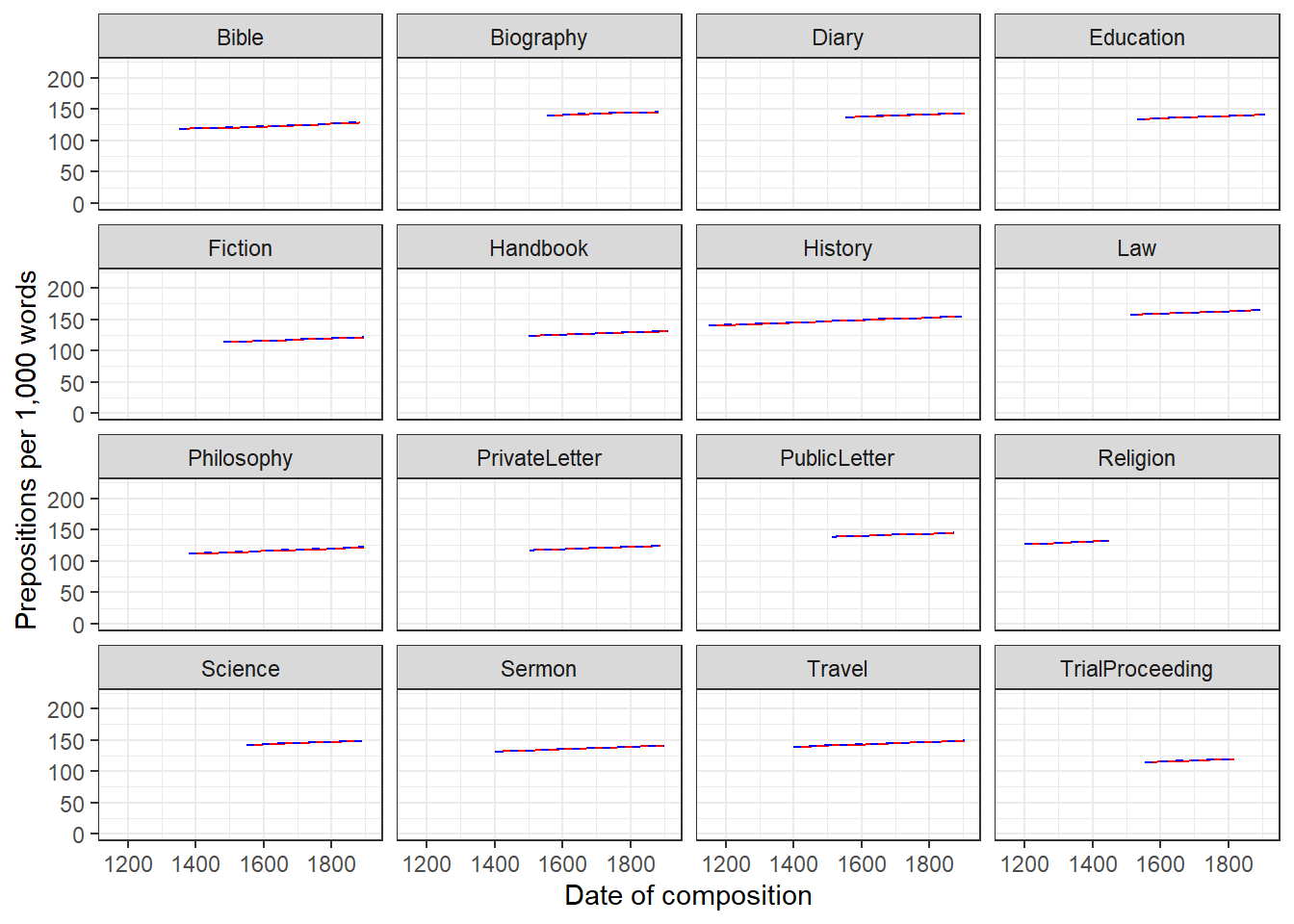

Example 1: Preposition Use across Real-Time

We will now turn to our first example. In this example, we will investigate whether the frequency of prepositions has changed from Middle English to Late Modern English. The reasoning behind this example is that Old English was highly synthetic compared with Present-Day English which comparatively analytic. In other words, while Old English speakers used case to indicate syntactic relations, speakers of Present-Day English use word order and prepositions to indicate syntactic relationships. This means that the loss of case had to be compensated by different strategies and maybe these strategies continued to develop and increase in frequency even after the change from synthetic to analytic had been mostly accomplished. And this prolonged change in compensatory strategies is what this example will focus on.

The analysis is based on data extracted from the Penn Corpora of Historical English (see http://www.ling.upenn.edu/hist-corpora/), that consists of 603 texts written between 1125 and 1900. In preparation of this example, all elements that were part-of-speech tagged as prepositions were extracted from the PennCorpora.

Then, the relative frequencies (per 1,000 words) of prepositions per text were calculated. This frequency of prepositions per 1,000 words represents our dependent variable. In a next step, the date when each letter had been written was extracted. The resulting two vectors were combined into a table which thus contained for each text, when it was written (independent variable) and its relative frequency of prepositions (dependent or outcome variable).

A regression analysis will follow the steps described below:

Extraction and processing of the data

Data visualization

Applying the regression analysis to the data

Diagnosing the regression model and checking whether or not basic model assumptions have been violated.

In a first step, we load functions that we may need (which in this case is a function that we will use to summarize the results of the analysis).

# load functions

source("https://slcladal.github.io/rscripts/slrsummary.r")After preparing our session, we can now load and inspect the data to get a first impression of its properties.

# load data

slrdata <- base::readRDS(url("https://slcladal.github.io/data/sld.rda", "rb"))Date | Genre | Text | Prepositions | Region |

|---|---|---|---|---|

1,736 | Science | albin | 166.01 | North |

1,711 | Education | anon | 139.86 | North |

1,808 | PrivateLetter | austen | 130.78 | North |

1,878 | Education | bain | 151.29 | North |

1,743 | Education | barclay | 145.72 | North |

1,908 | Education | benson | 120.77 | North |

1,906 | Diary | benson | 119.17 | North |

1,897 | Philosophy | boethja | 132.96 | North |

1,785 | Philosophy | boethri | 130.49 | North |

1,776 | Diary | boswell | 135.94 | North |

1,905 | Travel | bradley | 154.20 | North |

1,711 | Education | brightland | 149.14 | North |

1,762 | Sermon | burton | 159.71 | North |

1,726 | Sermon | butler | 157.49 | North |

1,835 | PrivateLetter | carlyle | 124.16 | North |

Inspecting the data is very important because it can happen that a data set may not load completely or that variables which should be numeric have been converted to character variables. If unchecked, then such issues could go unnoticed and cause trouble.

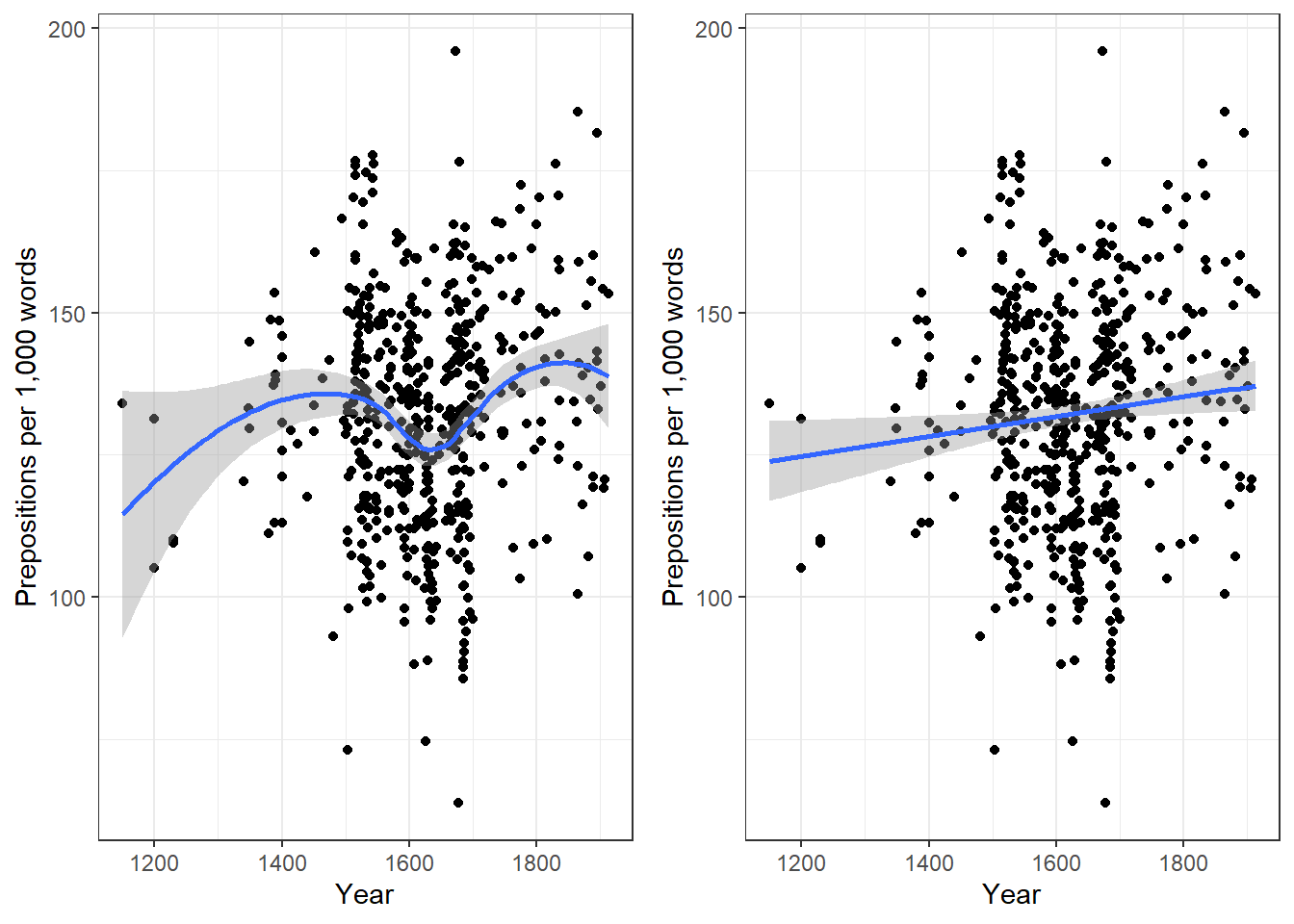

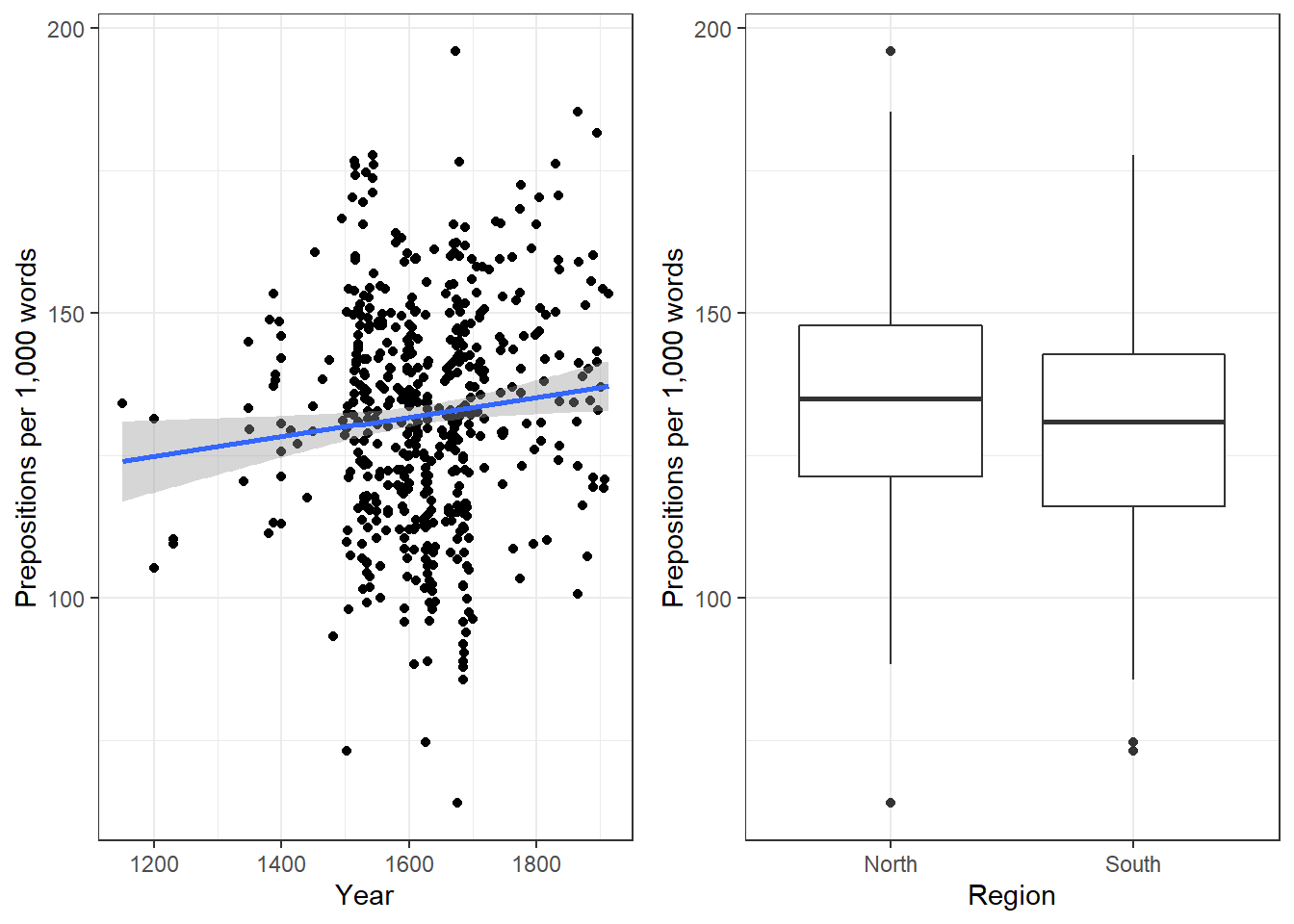

We will now plot the data to get a better understanding of what the data looks like.

p1 <- ggplot(slrdata, aes(Date, Prepositions)) +

geom_point() +

theme_bw() +

labs(x = "Year") +

labs(y = "Prepositions per 1,000 words") +

geom_smooth()

p2 <- ggplot(slrdata, aes(Date, Prepositions)) +

geom_point() +

theme_bw() +

labs(x = "Year") +

labs(y = "Prepositions per 1,000 words") +

geom_smooth(method = "lm") # with linear model smoothing!

# display plots

ggpubr::ggarrange(p1, p2, ncol = 2, nrow = 1)

Before beginning with the regression analysis, we will center the year. We center the values of year by subtracting each value from the mean of year. This can be useful when dealing with numeric variables because if we did not center year, we would get estimated values for year 0 (a year when English did not even exist yet). If a variable is centered, the regression provides estimates of the model refer to the mean of that numeric variable. In other words, centering can be very helpful, especially with respect to the interpretation of the results that regression models report.

# center date

slrdata$Date <- slrdata$Date - mean(slrdata$Date) We will now begin the regression analysis by generating a first regression model and inspect its results.

# create initial model

m1.lm <- lm(Prepositions ~ Date, data = slrdata)

# inspect results

summary(m1.lm)##

## Call:

## lm(formula = Prepositions ~ Date, data = slrdata)

##

## Residuals:

## Min 1Q Median 3Q Max

## -69.1012471 -13.8549421 0.5779091 13.3208913 62.8580401

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 132.19009310987 0.83863748040 157.62483 < 0.0000000000000002 ***

## Date 0.01732180307 0.00726746646 2.38347 0.017498 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 19.4339648 on 535 degrees of freedom

## Multiple R-squared: 0.010507008, Adjusted R-squared: 0.00865748837

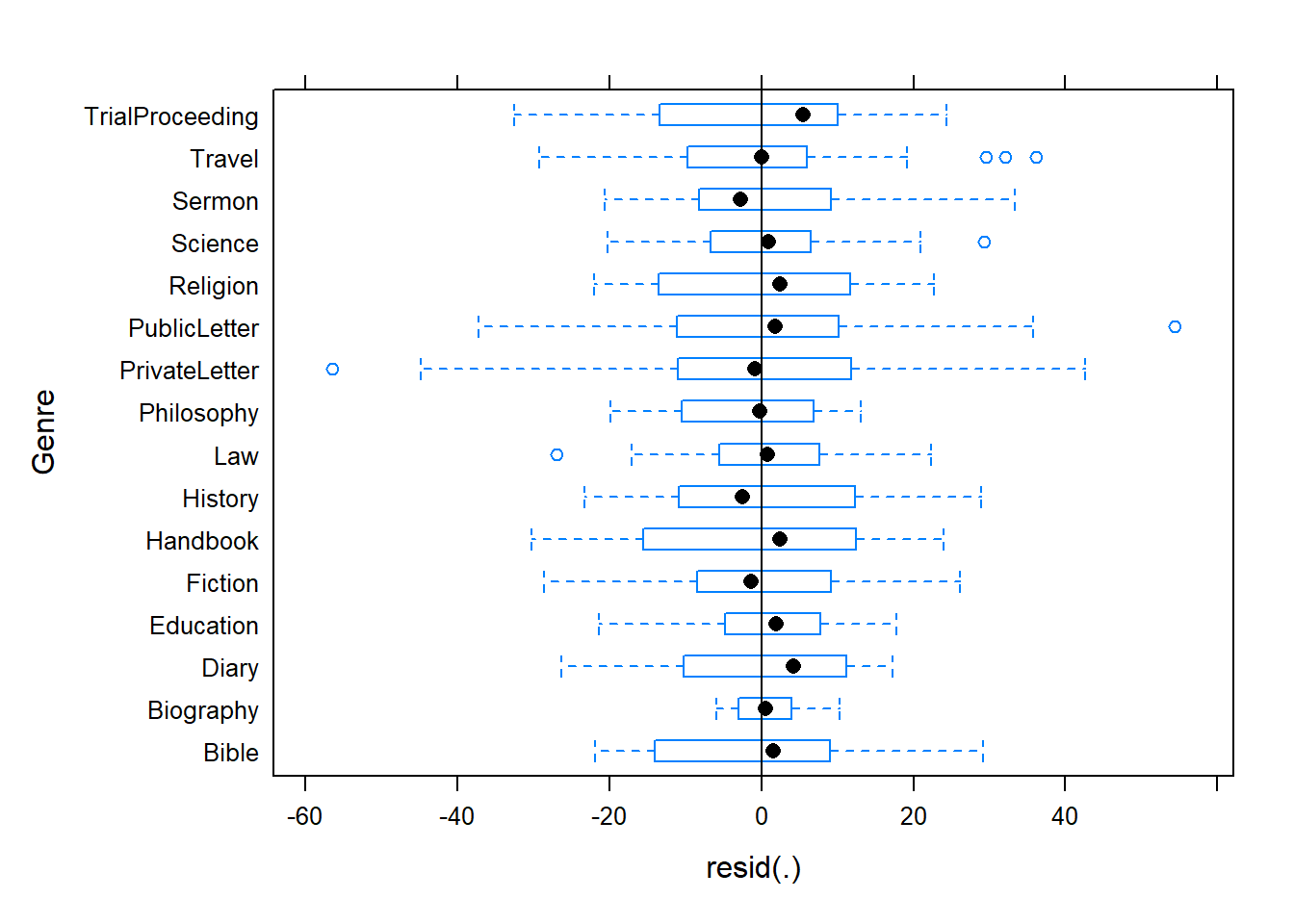

## F-statistic: 5.68093894 on 1 and 535 DF, p-value: 0.017498081The summary output starts by repeating the regression equation. Then, the model provides the distribution of the residuals. The residuals should be distributed normally with the absolute values of the Min and Max as well as the 1Q (first quartile) and 3Q (third quartile) being similar or ideally identical. In our case, the values are very similar which suggests that the residuals are distributed evenly and follow a normal distribution. The next part of the report is the coefficients table. The estimate for the intercept is the value of y at x = 0. The estimate for Date represents the slope of the regression line and tells us that with each year, the predicted frequency of prepositions increase by .01732 prepositions. The t-value is the Estimate divided by the standard error (Std. Error). Based on the t-value, the p-value can be calculated manually as shown below.

# use pt function (which uses t-values and the degrees of freedom)

2*pt(-2.383, nrow(slrdata)-1)## [1] 0.0175196401501The R2-values tell us how much variance is explained by our model. The baseline value represents a model that uses merely the mean. 0.0105 means that our model explains only 1.05 percent of the variance (0.010 x 100) - which is a tiny amount. The problem of the multiple R2 is that it will increase even if we add variables that explain almost no variance. Hence, multiple R2 encourages the inclusion of junk variables.

\[\begin{equation} R^2 = R^2_{multiple} = 1 - \frac{\sum (y_i - \hat{y_i})^2}{\sum (y_i - \bar y)^2} \end{equation}\]

The adjusted R2-value takes the number of predictors into account and, thus, the adjusted R2 will always be lower than the multiple R2. This is so because the adjusted R2 penalizes models for having predictors. The equation for the adjusted R2 below shows that the amount of variance that is explained by all the variables in the model (the top part of the fraction) must outweigh the inclusion of the number of variables (k) (lower part of the fraction). Thus, the adjusted R2 will decrease when variables are added that explain little or even no variance while it will increase if variables are added that explain a lot of variance.

\[\begin{equation} R^2_{adjusted} = 1 - (\frac{(1 - R^2)(n - 1)}{n - k - 1}) \end{equation}\]

If there is a big difference between the two R2-values, then the model contains (many) predictors that do not explain much variance which is not good. The F-statistic and the associated p-value tell us that the model, despite explaining almost no variance, is still significantly better than an intercept-only base-line model (or using the overall mean to predict the frequency of prepositions per text).

We can test this and also see where the F-values comes from by comparing the

# create intercept-only base-line model

m0.lm <- lm(Prepositions ~ 1, data = slrdata)

# compare the base-line and the more saturated model

anova(m1.lm, m0.lm, test = "F")## Analysis of Variance Table

##

## Model 1: Prepositions ~ Date

## Model 2: Prepositions ~ 1

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 535 202058.2576

## 2 536 204203.8289 -1 -2145.57126 5.68094 0.017498 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The F- and p-values are exactly those reported by the summary which shows where the F-values comes from and what it means; namely it denote the difference between the base-line and the more saturated model.

The degrees of freedom associated with the residual standard error are the number of cases in the model minus the number of predictors (including the intercept). The residual standard error is square root of the sum of the squared residuals of the model divided by the degrees of freedom. Have a look at he following to clear this up:

# DF = N - number of predictors (including intercept)

DegreesOfFreedom <- nrow(slrdata)-length(coef(m1.lm))

# sum of the squared residuals

SumSquaredResiduals <- sum(resid(m1.lm)^2)

# Residual Standard Error

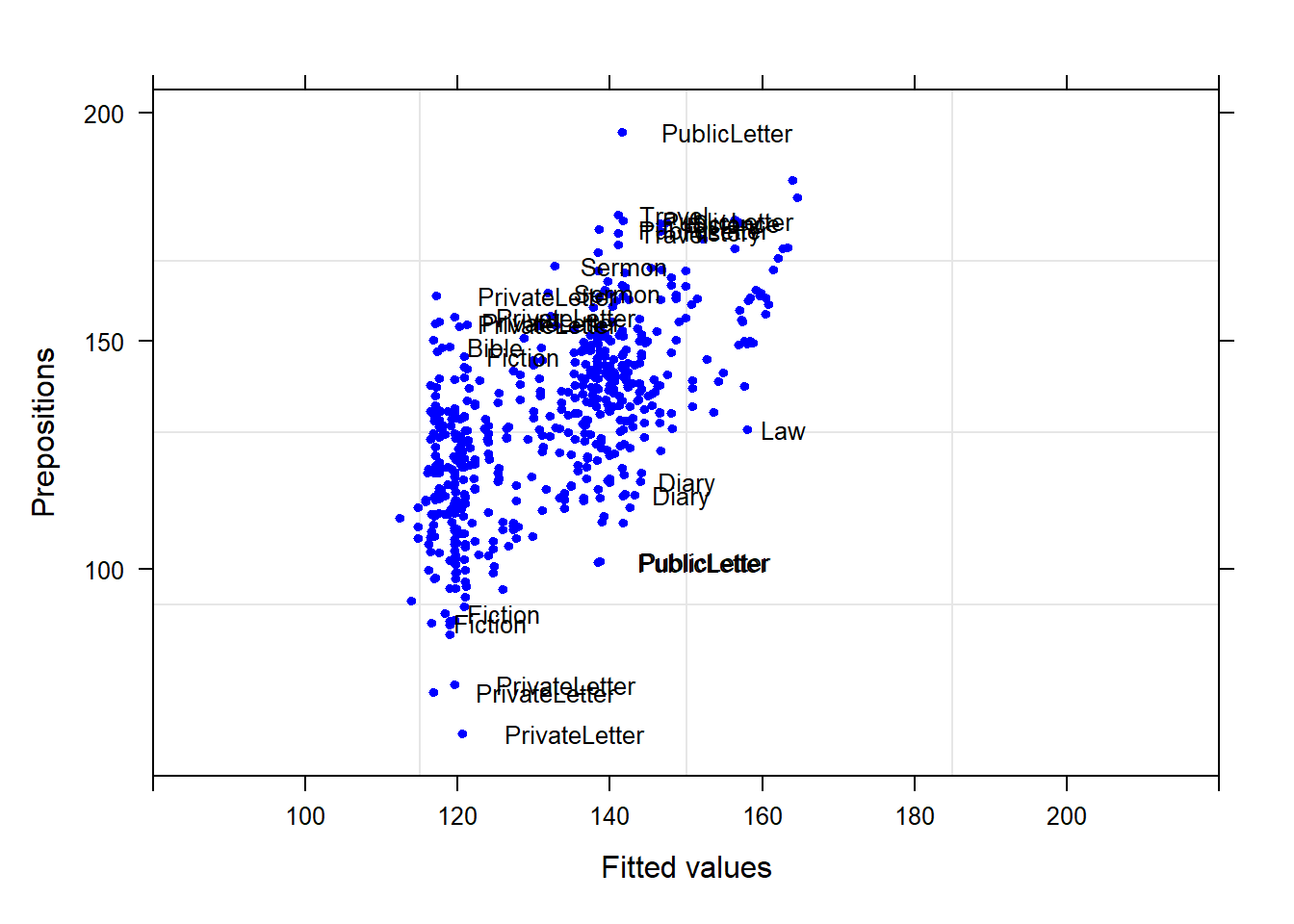

sqrt(SumSquaredResiduals/DegreesOfFreedom); DegreesOfFreedom## [1] 19.4339647585## [1] 535We will now check if mathematical assumptions have been violated (homogeneity of variance) or whether the data contains outliers. We check this using diagnostic plots.

# generate data

df2 <- data.frame(id = 1:length(resid(m1.lm)),

residuals = resid(m1.lm),

standard = rstandard(m1.lm),

studend = rstudent(m1.lm))

# generate plots

p1 <- ggplot(df2, aes(x = id, y = residuals)) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank()) +

geom_point() +

labs(y = "Residuals", x = "Index")

p2 <- ggplot(df2, aes(x = id, y = standard)) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank()) +

geom_point() +

labs(y = "Standardized Residuals", x = "Index")

p3 <- ggplot(df2, aes(x = id, y = studend)) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank()) +

geom_point() +

labs(y = "Studentized Residuals", x = "Index")

# display plots

ggpubr::ggarrange(p1, p2, p3, ncol = 3, nrow = 1)

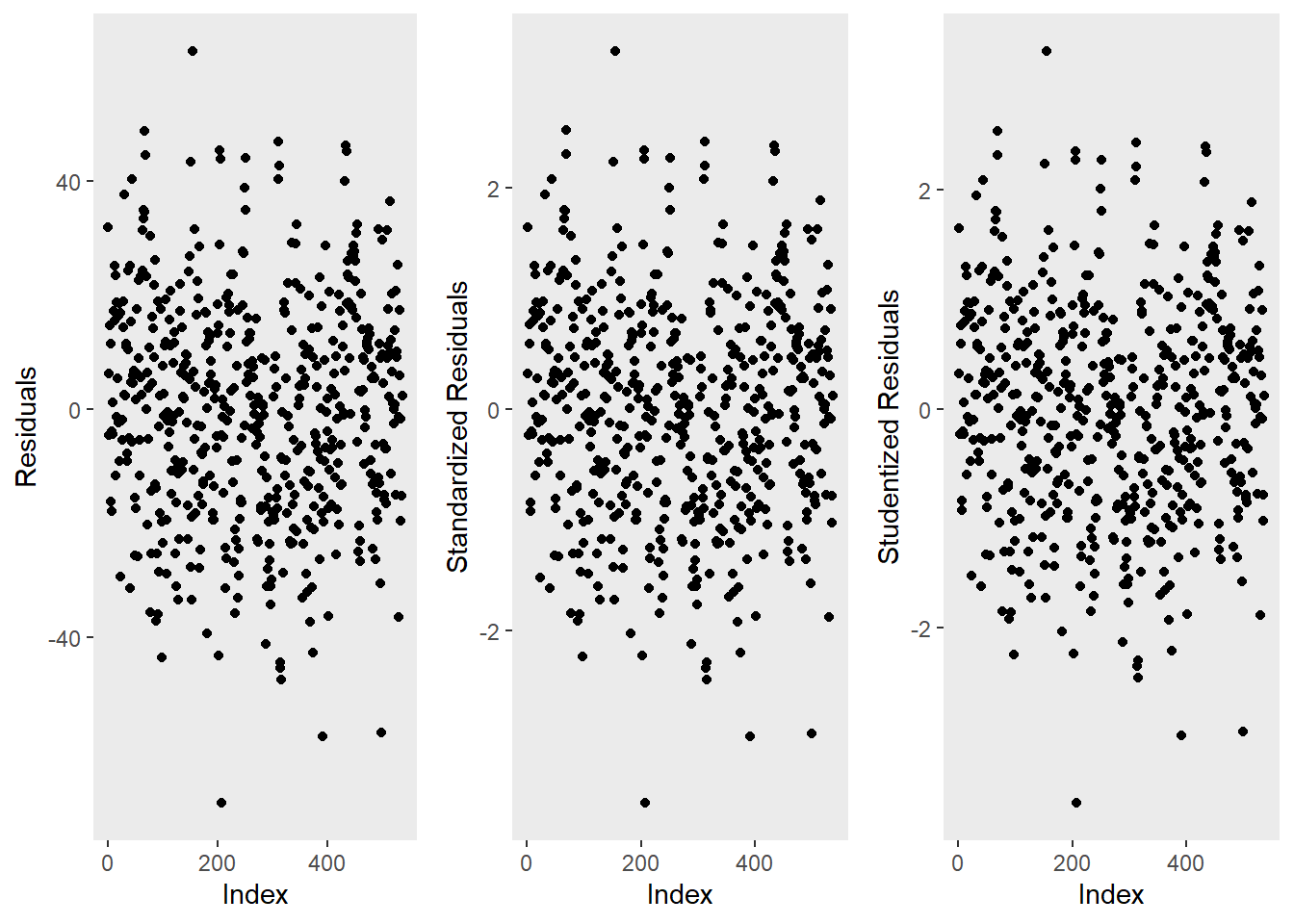

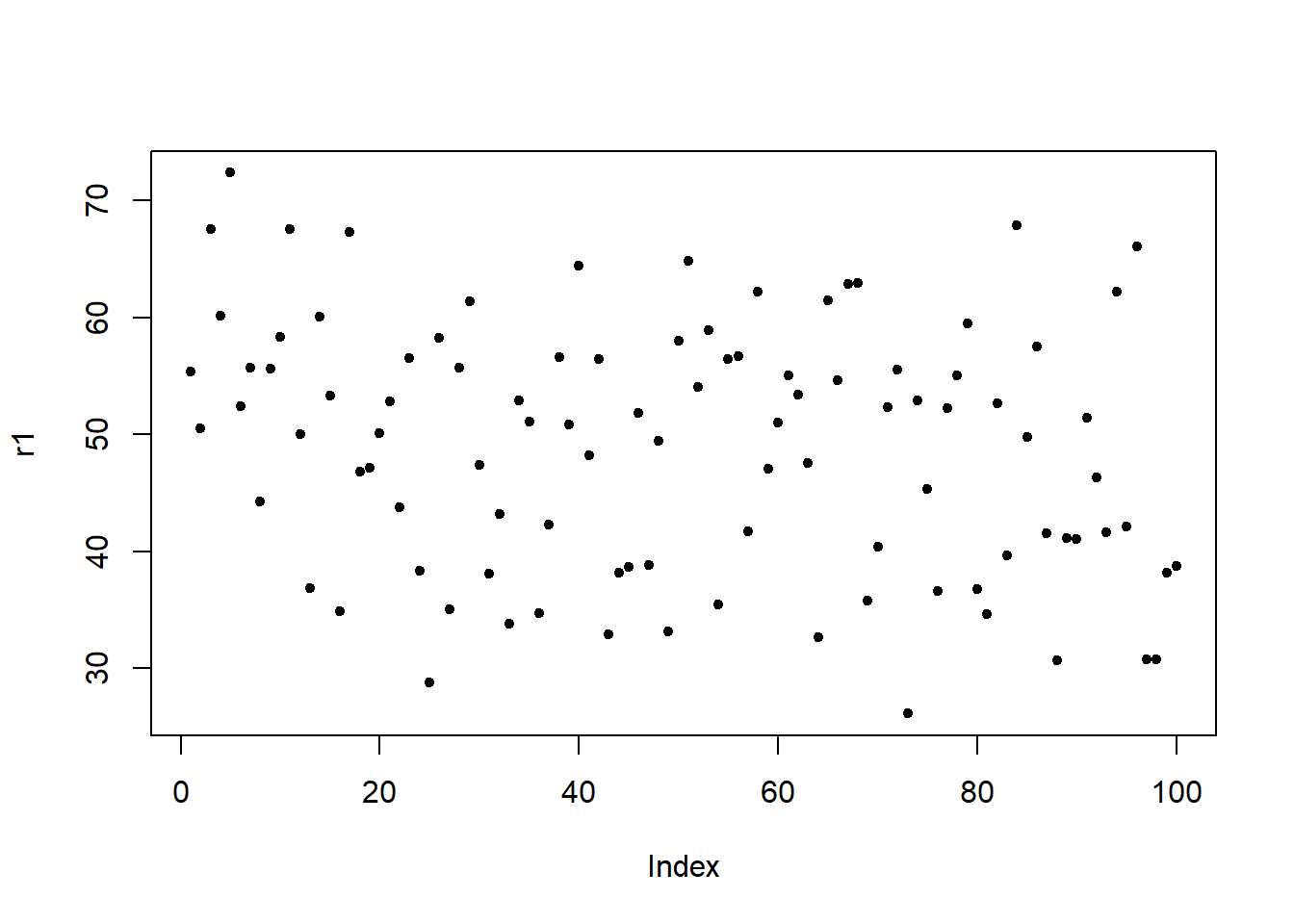

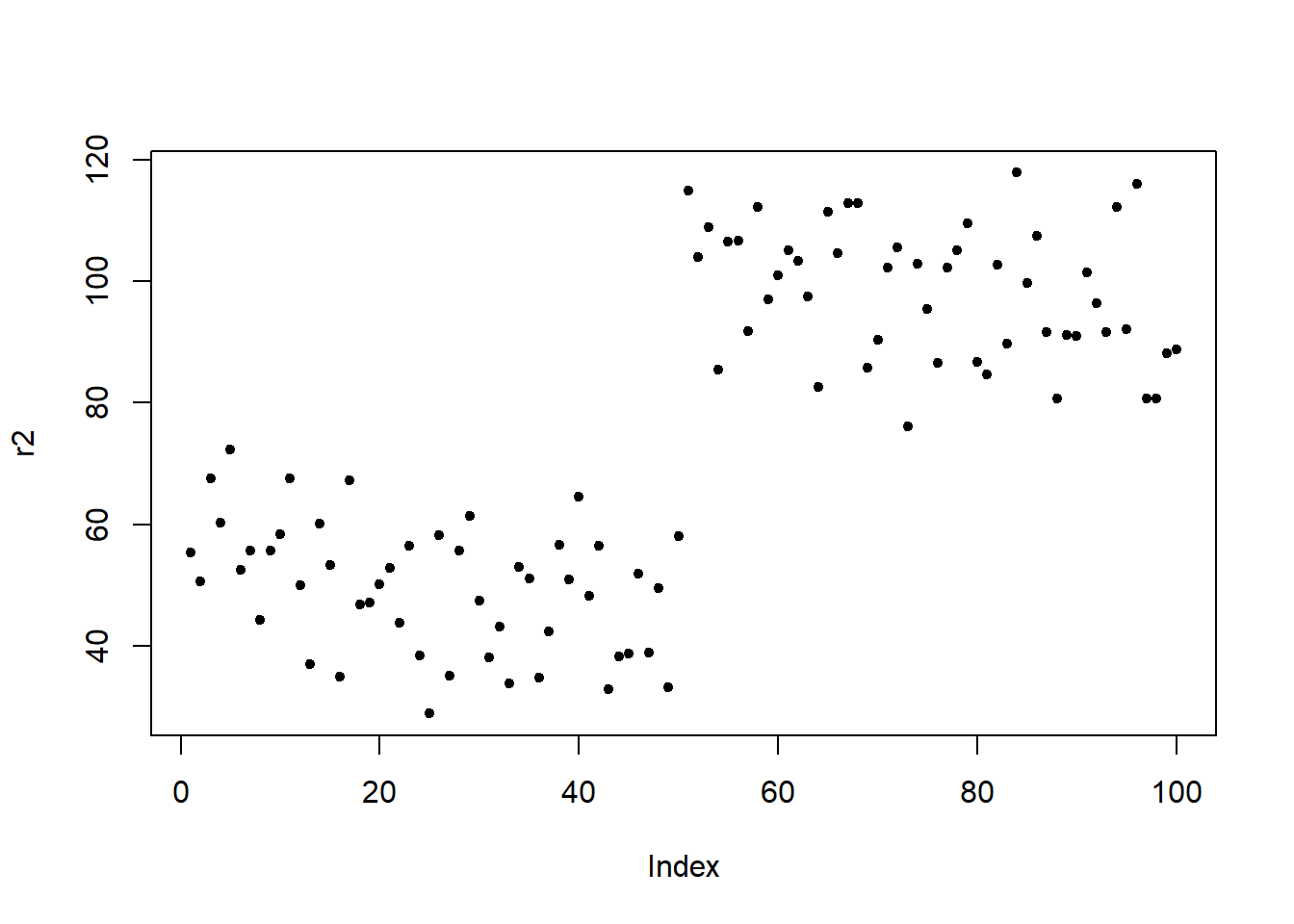

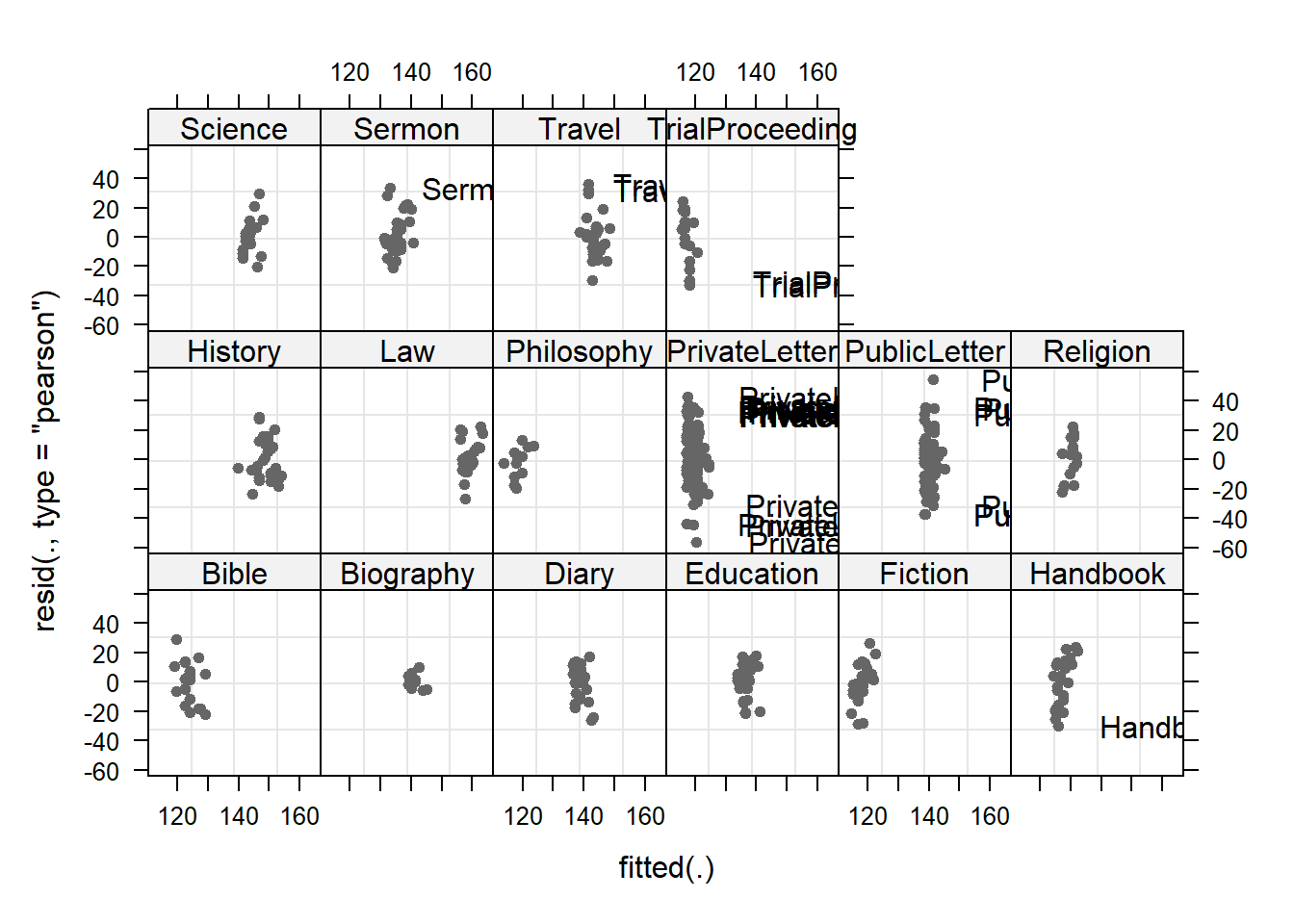

The left graph shows the residuals of the model (i.e., the differences between the observed and the values predicted by the regression model). The problem with this plot is that the residuals are not standardized and so they cannot be compared to the residuals of other models. To remedy this deficiency, residuals are normalized by dividing the residuals by their standard deviation. Then, the normalized residuals can be plotted against the observed values (center panel). In this way, not only are standardized residuals obtained, but the values of the residuals are transformed into z-values, and one can use the z-distribution to find problematic data points. There are three rules of thumb regarding finding problematic data points through standardized residuals (Field, Miles, and Field 2012, 268–69):

Points with values higher than 3.29 should be removed from the data.

If more than 1% of the data points have values higher than 2.58, then the error rate of our model is too high.

If more than 5% of the data points have values greater than 1.96, then the error rate of our model is too high.

The right panel shows the * studentized residuals* (adjusted predicted values: each data point is divided by the standard error of the residuals). In this way, it is possible to use Student’s t-distribution to diagnose our model.

Adjusted predicted values are residuals of a special kind: the model is calculated without a data point and then used to predict this data point. The difference between the observed data point and its predicted value is then called the adjusted predicted value. In summary, studentized residuals are very useful because they allow us to identify influential data points.

The plots show that there are two potentially problematic data points (the top-most and bottom-most point). These two points are clearly different from the other data points and may therefore be outliers. We will test later if these points need to be removed.

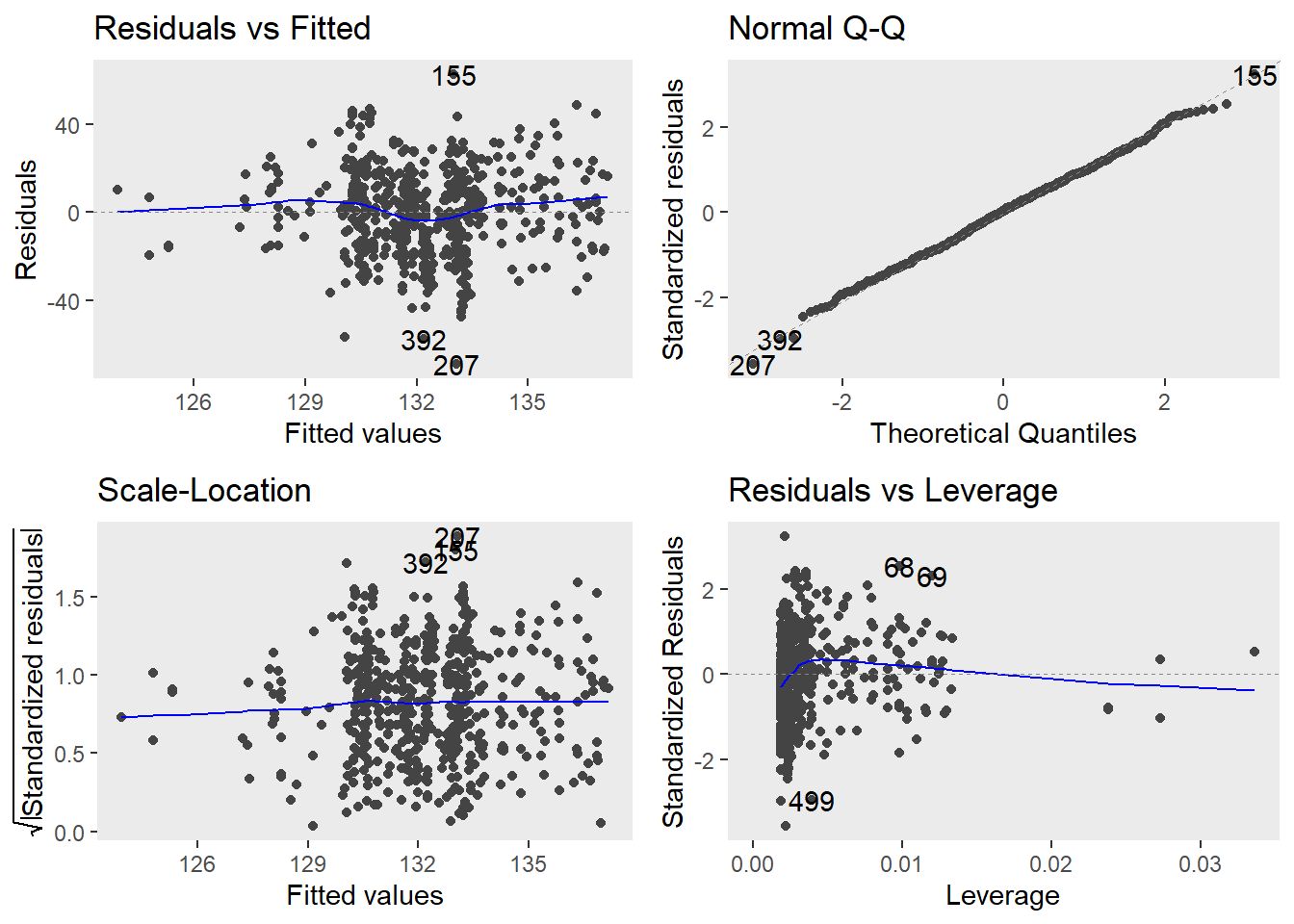

We will now generate more diagnostic plots.

# generate plots

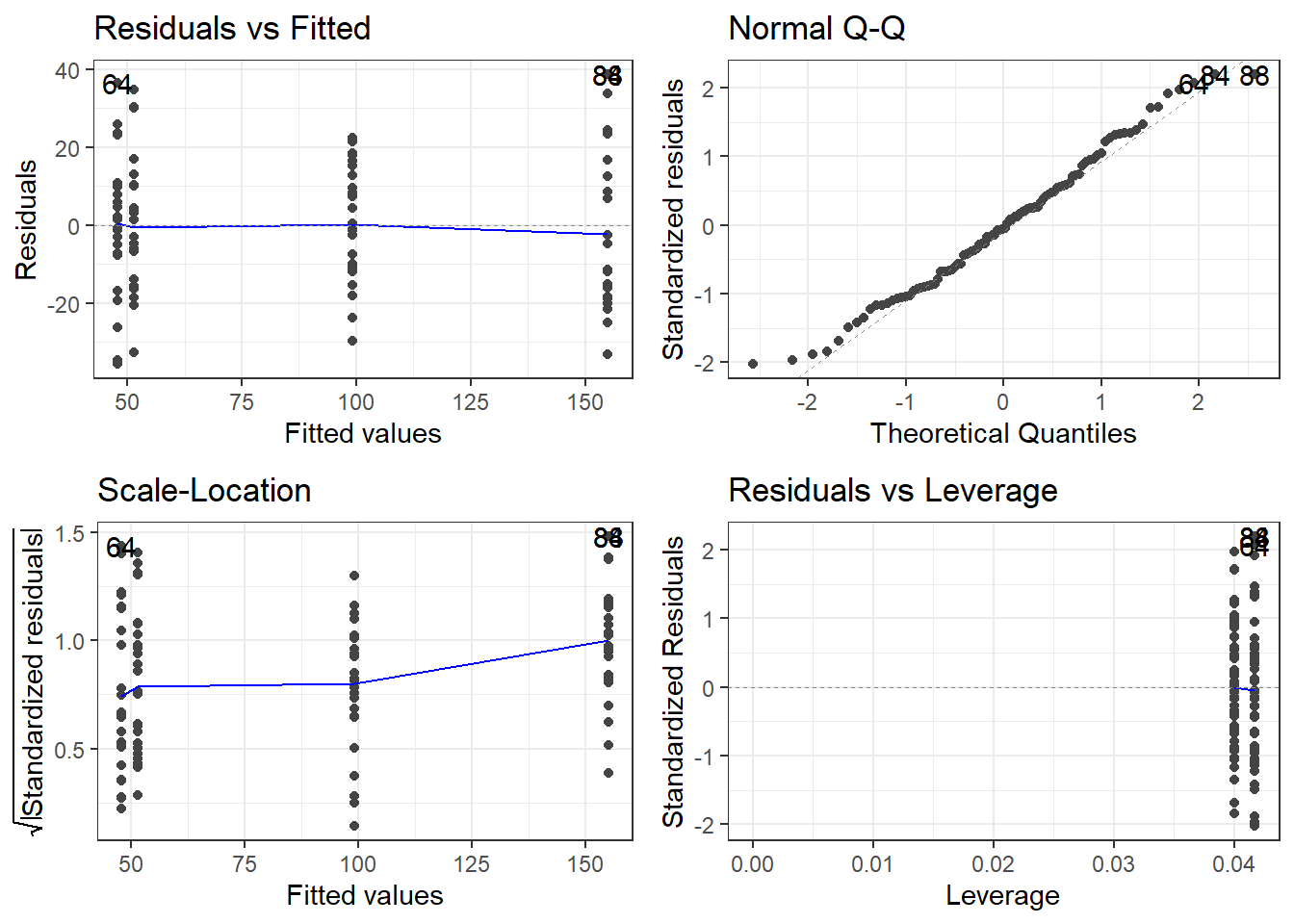

autoplot(m1.lm) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank())

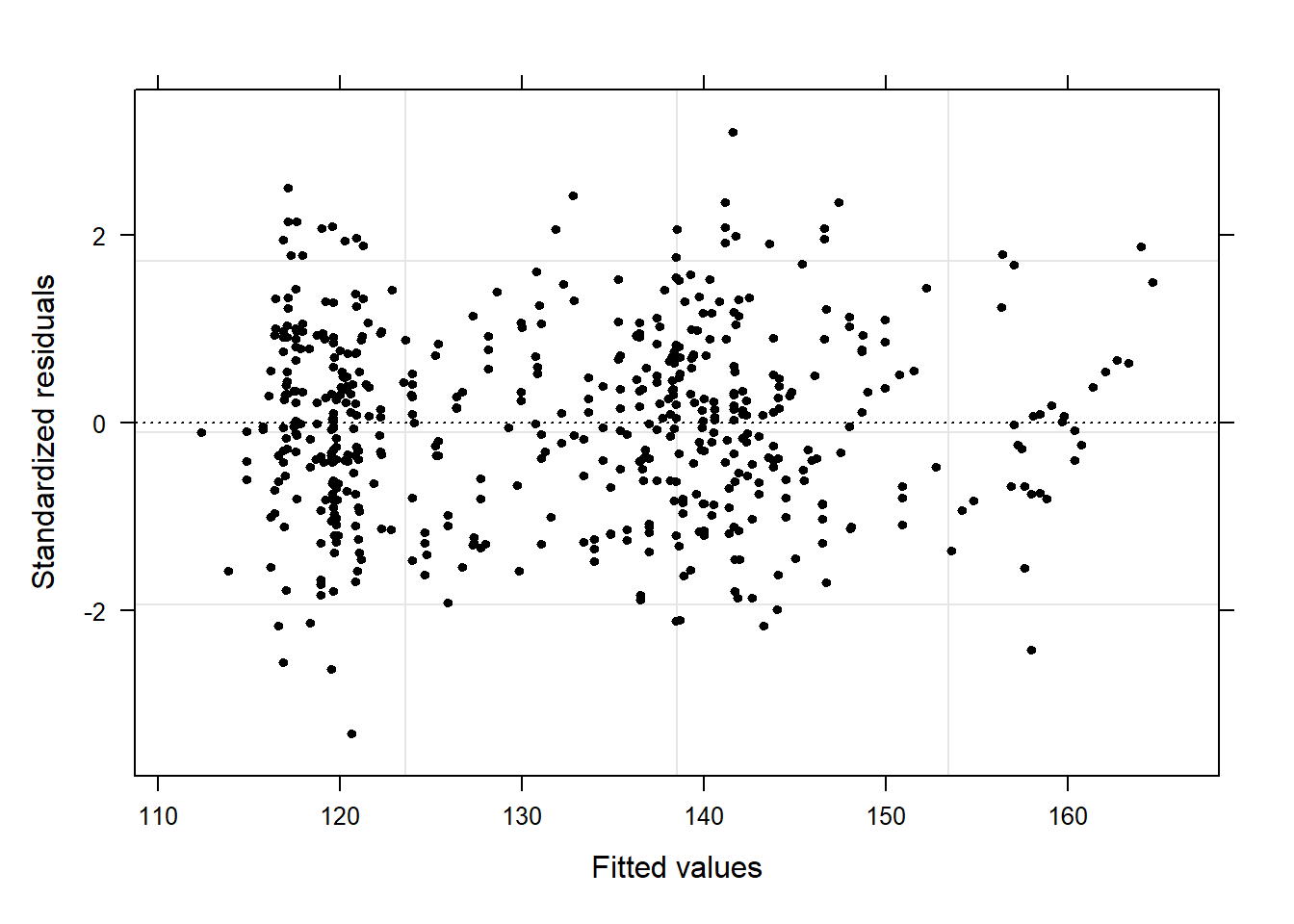

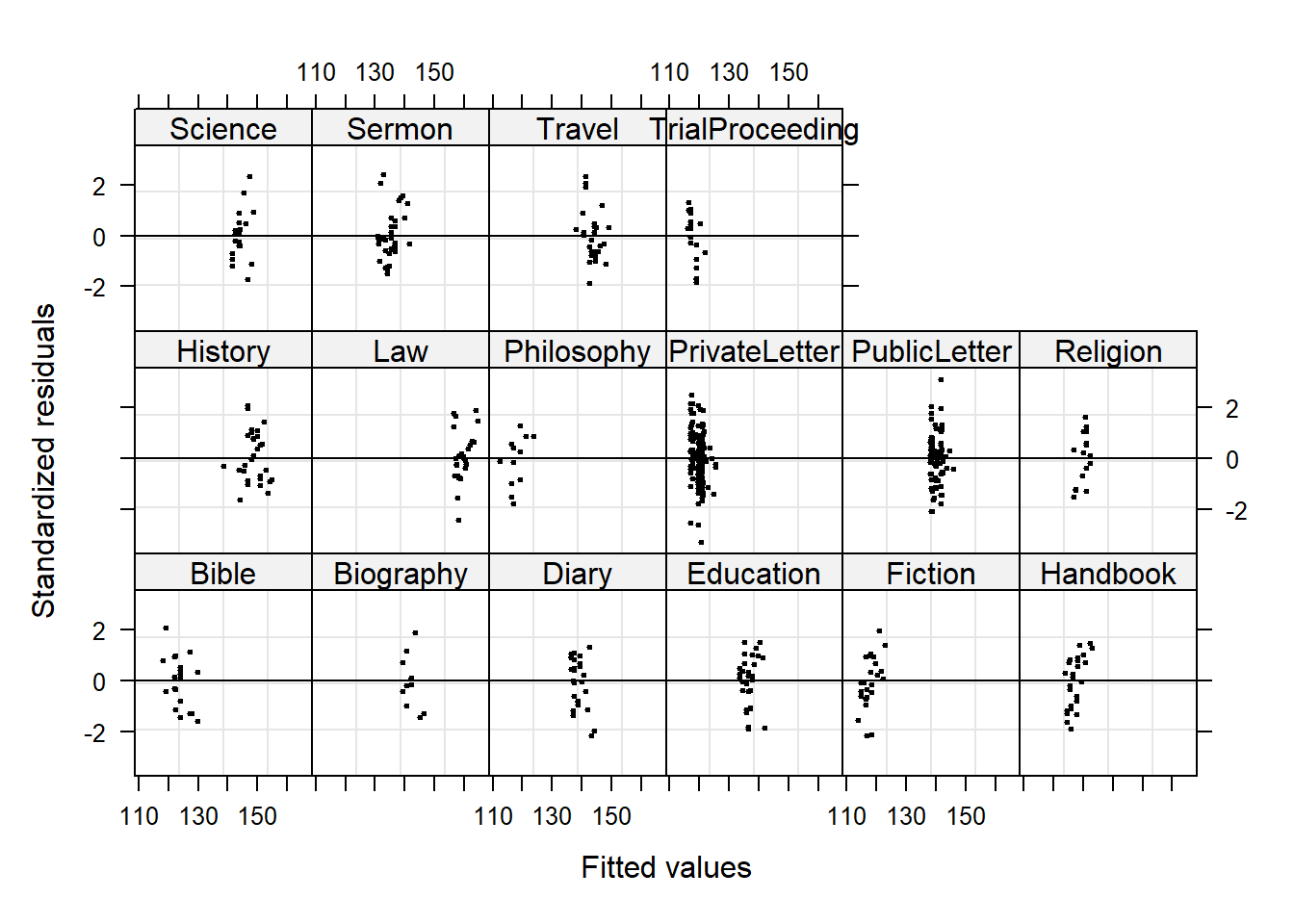

The diagnostic plots are very positive and we will go through why this is so for each panel. The graph in the upper left panel is useful for finding outliers or for determining the correlation between residuals and predicted values: when a trend becomes visible in the line or points (e.g., a rising trend or a zigzag line), then this would indicate that the model would be problematic (in such cases, it can help to remove data points that are too influential (outliers)).

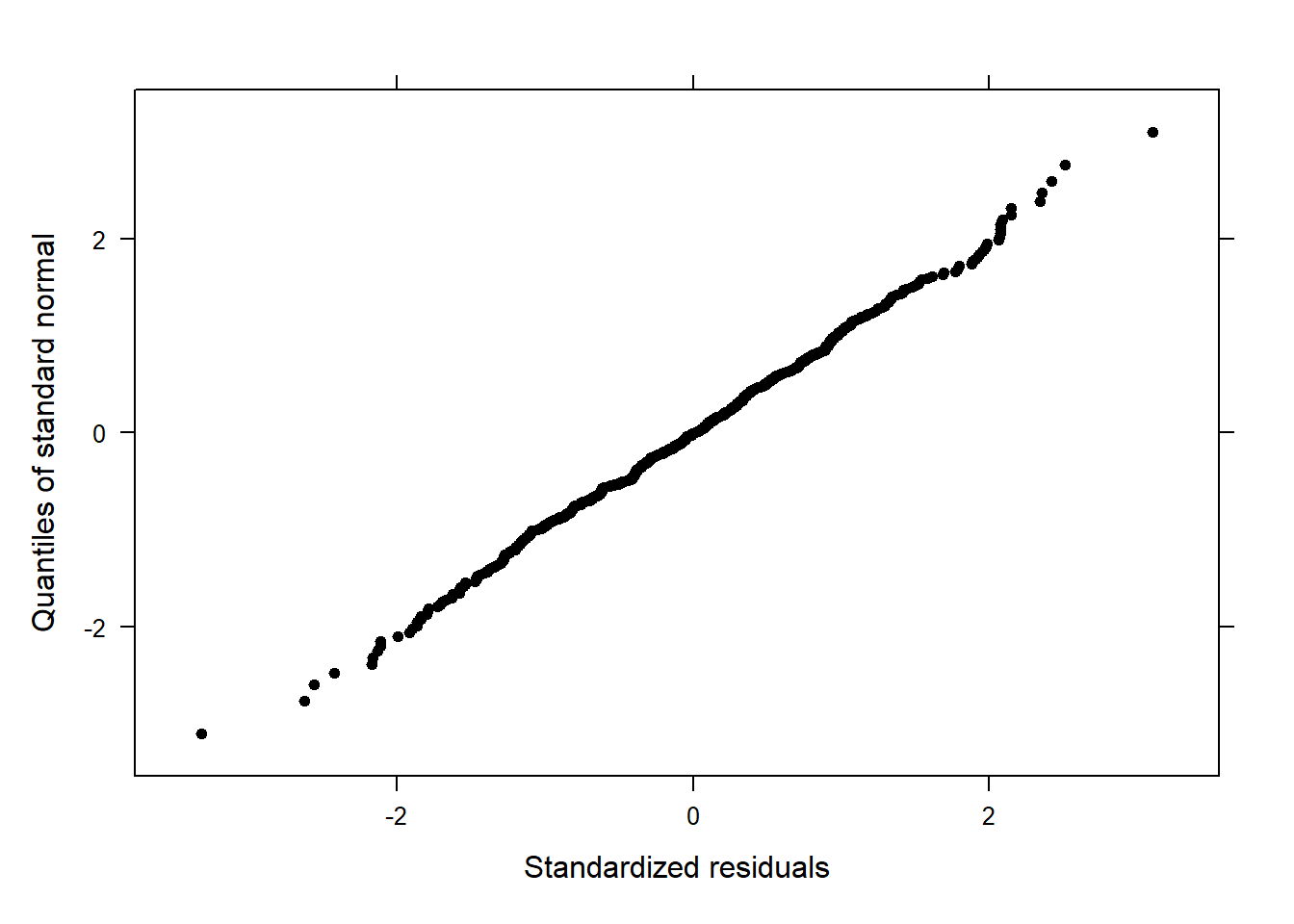

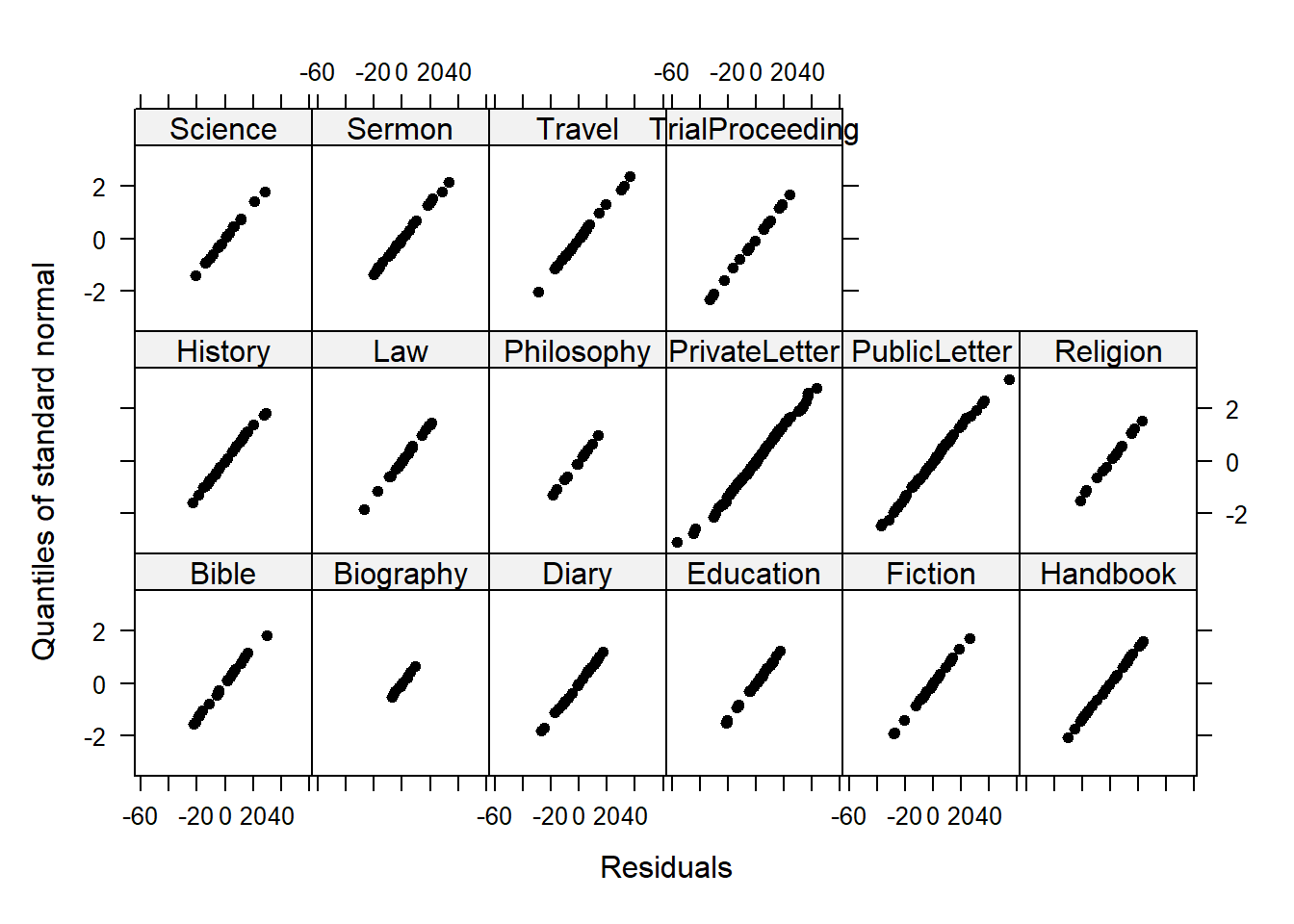

The graphic in the upper right panel indicates whether the residuals are normally distributed (which is desirable) or whether the residuals do not follow a normal distribution. If the points lie on the line, the residuals follow a normal distribution. For example, if the points are not on the line at the top and bottom, it shows that the model does not predict small and large values well and that it therefore does not have a good fit.

The graphic in the lower left panel provides information about homoscedasticity. Homoscedasticity means that the variance of the residuals remains constant and does not correlate with any independent variable. In unproblematic cases, the graphic shows a flat line. If there is a trend in the line, we are dealing with heteroscedasticity, that is, a correlation between independent variables and the residuals, which is very problematic for regressions.

The graph in the lower right panel shows problematic influential data points that disproportionately affect the regression (this would be problematic). If such influential data points are present, they should be either weighted (one could generate a robust rather than a simple linear regression) or they must be removed. The graph displays Cook’s distance, which shows how the regression changes when a model without this data point is calculated. The cook distance thus shows the influence a data point has on the regression as a whole. Data points that have a Cook’s distance value greater than 1 are problematic (Field, Miles, and Field 2012, 269).

The so-called leverage is also a measure that indicates how strongly a data point affects the accuracy of the regression. Leverage values range between 0 (no influence) and 1 (strong influence: suboptimal!). To test whether a specific data point has a high leverage value, we calculate a cut-off point that indicates whether the leverage is too strong or still acceptable. The following two formulas are used for this:

\[\begin{equation} Leverage = \frac{3(k + 1)}{n} | \frac{2(k + 1)}{n} \end{equation}\]

We will look more closely at leverage in the context of multiple linear regression and will therefore end the current analysis by summarizing the results of the regression analysis in a table.

# create summary table

slrsummary(m1.lm) Parameters | Estimate | Pearson's r | Std. Error | t value | Pr(>|t|) | P-value sig. |

|---|---|---|---|---|---|---|

(Intercept) | 132.19 | 0.84 | 157.62 | 0 | p < .001*** | |

Date | 0.02 | 0.1 | 0.01 | 2.38 | 0.0175 | p < .05* |

Model statistics | Value | |||||

Number of cases in model | 537 | |||||

Residual standard error on 535 DF | 19.43 | |||||

Multiple R-squared | 0.0105 | |||||

Adjusted R-squared | 0.0087 | |||||

F-statistic (1, 535) | 5.68 | |||||

Model p-value | 0.0175 |

An alternative but less informative summary table of the results of a

regression analysis can be generated using the tab_model

function from the sjPlot package (Lüdecke 2021) (as is shown below).

# generate summary table

sjPlot::tab_model(m1.lm) | Prepositions | |||

|---|---|---|---|

| Predictors | Estimates | CI | p |

| (Intercept) | 132.19 | 130.54 – 133.84 | <0.001 |

| Date | 0.02 | 0.00 – 0.03 | 0.017 |

| Observations | 537 | ||

| R2 / R2 adjusted | 0.011 / 0.009 | ||

Typically, the results of regression analyses are presented in such tables as they include all important measures of model quality and significance, as well as the magnitude of the effects.

In addition, the results of simple linear regressions should be summarized in writing.

We can use the reports package (Makowski et al. 2021)

to summarize the analysis.

report::report(m1.lm)## We fitted a linear model (estimated using OLS) to predict Prepositions with

## Date (formula: Prepositions ~ Date). The model explains a statistically

## significant and very weak proportion of variance (R2 = 0.01, F(1, 535) = 5.68,

## p = 0.017, adj. R2 = 8.66e-03). The model's intercept, corresponding to Date =

## 0, is at 132.19 (95% CI [130.54, 133.84], t(535) = 157.62, p < .001). Within

## this model:

##

## - The effect of Date is statistically significant and positive (beta = 0.02,

## 95% CI [3.05e-03, 0.03], t(535) = 2.38, p = 0.017; Std. beta = 0.10, 95% CI

## [0.02, 0.19])

##

## Standardized parameters were obtained by fitting the model on a standardized

## version of the dataset. 95% Confidence Intervals (CIs) and p-values were

## computed using a Wald t-distribution approximation.We can use this output to write up a final report:

A simple linear regression has been fitted to the data. A visual assessment of the model diagnostic graphics did not indicate any problematic or disproportionately influential data points (outliers) and performed significantly better compared to an intercept-only base line model but only explained .87 percent of the variance (adjusted R2: .0087, F-statistic (1, 535): 5,68, p-value: 0.0175*). The final minimal adequate linear regression model is based on 537 data points and confirms a significant and positive correlation between the year in which the text was written and the relative frequency of prepositions (coefficient estimate: .02 (standardized : 0.10, 95% CI [0.02, 0.19]), SE: 0.01, t-value535: 2.38, p-value: .0175*). Standardized parameters were obtained by fitting the model on a standardized version of the dataset. 95% Confidence Intervals (CIs) and p-values were computed using the Wald approximation.

Example 2: Teaching Styles

In the previous example, we dealt with two numeric variables, while the following example deals with a categorical independent variable and a numeric dependent variable. The ability for regressions to handle very different types of variables makes regressions a widely used and robust method of analysis.

In this example, we are dealing with two groups of students that have been randomly assigned to be exposed to different teaching methods. Both groups undergo a language learning test after the lesson with a maximum score of 20 points.

The question that we will try to answer is whether the students in group A have performed significantly better than those in group B which would indicate that the teaching method to which group A was exposed works better than the teaching method to which group B was exposed.

Let’s move on to implementing the regression in R. In a first step, we load the data set and inspect its structure.

# load data

slrdata2 <- base::readRDS(url("https://slcladal.github.io/data/sgd.rda", "rb"))Group | Score |

|---|---|

A | 15 |

A | 12 |

A | 11 |

A | 18 |

A | 15 |

A | 15 |

A | 9 |

A | 19 |

A | 14 |

A | 13 |

A | 11 |

A | 12 |

A | 18 |

A | 15 |

A | 16 |

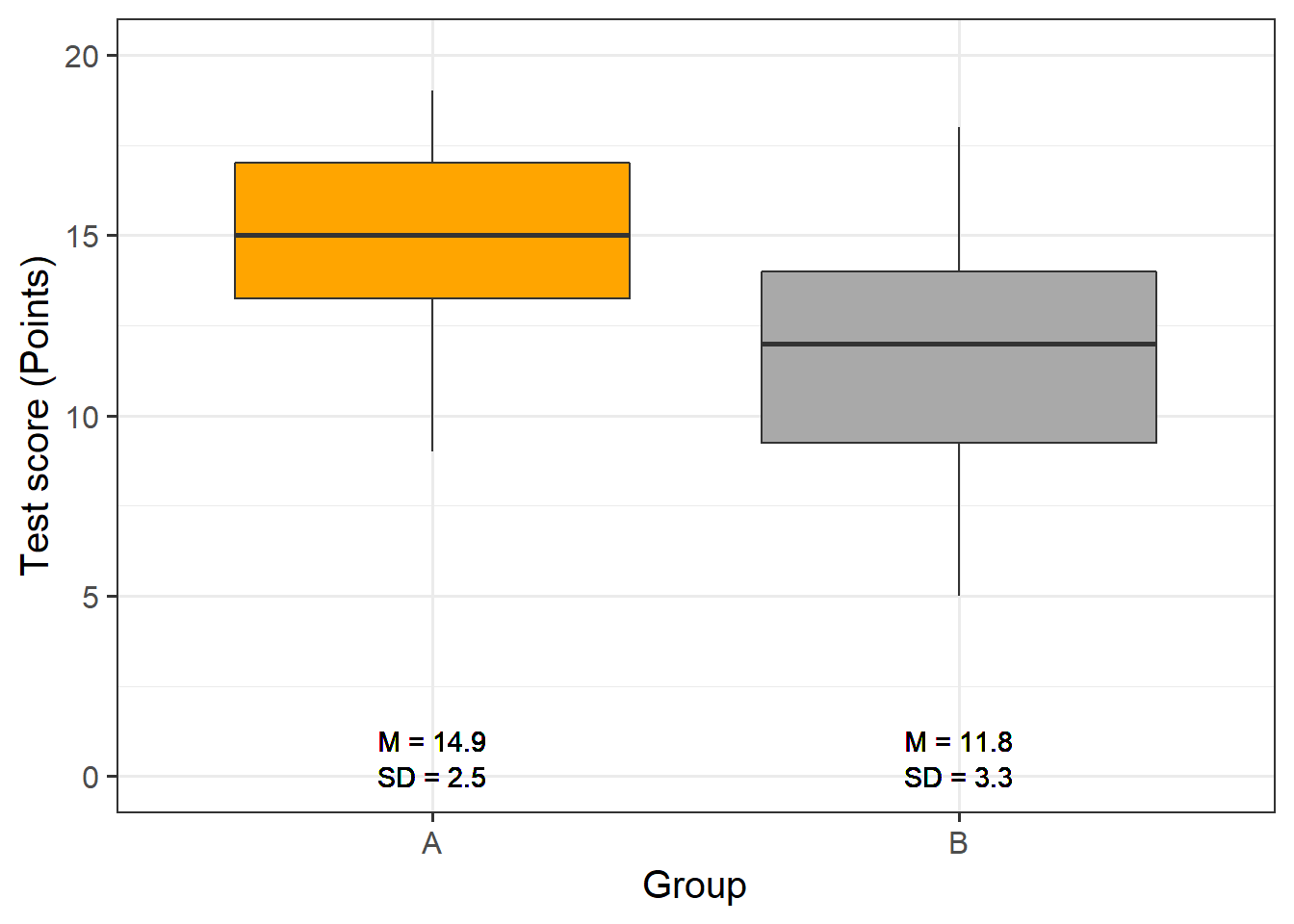

Now, we graphically display the data. In this case, a boxplot represents a good way to visualize the data.

# extract means

slrdata2 %>%

dplyr::group_by(Group) %>%

dplyr::mutate(Mean = round(mean(Score), 1), SD = round(sd(Score), 1)) %>%

ggplot(aes(Group, Score)) +

geom_boxplot(fill=c("orange", "darkgray")) +

geom_text(aes(label = paste("M = ", Mean, sep = ""), y = 1)) +

geom_text(aes(label = paste("SD = ", SD, sep = ""), y = 0)) +

theme_bw(base_size = 15) +

labs(x = "Group") +

labs(y = "Test score (Points)", cex = .75) +

coord_cartesian(ylim = c(0, 20)) +

guides(fill = FALSE)

The data indicate that group A did significantly better than group B. We will test this impression by generating the regression model and creating the model and extracting the model summary.

# generate regression model

m2.lm <- lm(Score ~ Group, data = slrdata2)

# inspect results

summary(m2.lm) ##

## Call:

## lm(formula = Score ~ Group, data = slrdata2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.76666667 -1.93333333 0.15000000 2.06666667 6.23333333

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 14.933333333 0.534571121 27.93517 < 0.000000000000000222 ***

## GroupB -3.166666667 0.755997730 -4.18873 0.000096692 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.92796662 on 58 degrees of freedom

## Multiple R-squared: 0.232249929, Adjusted R-squared: 0.219012859

## F-statistic: 17.545418 on 1 and 58 DF, p-value: 0.0000966923559The model summary reports that Group A performed significantly better compared with Group B. This is shown by the fact that the p-value (the value in the column with the header (Pr(>|t|)) is smaller than .001 as indicated by the three * after the p-values). Also, the negative Estimate for Group B indicates that Group B has lower scores than Group A. We will now generate the diagnostic graphics.3

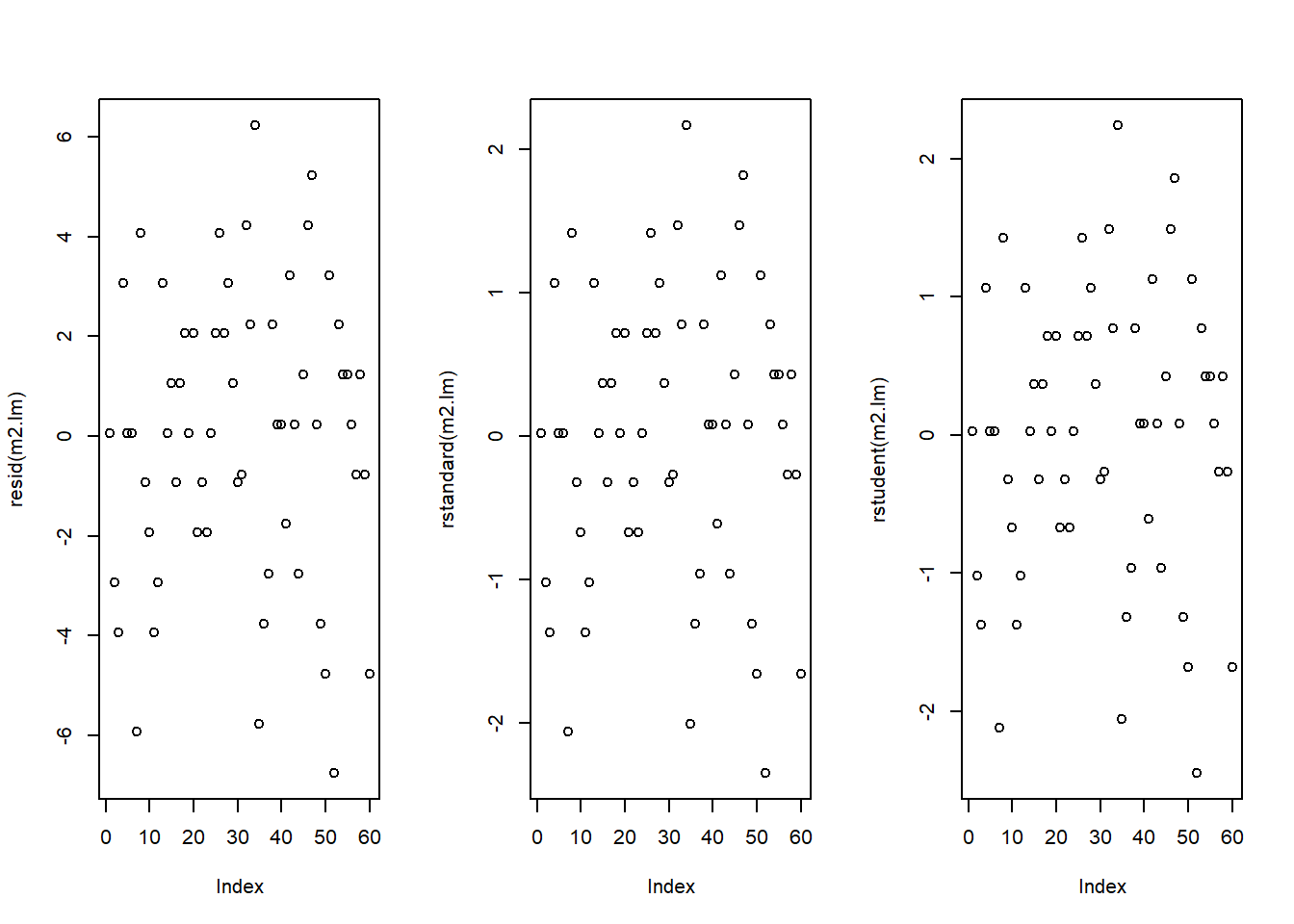

par(mfrow = c(1, 3)) # plot window: 1 plot/row, 3 plots/column

plot(resid(m2.lm)) # generate diagnostic plot

plot(rstandard(m2.lm)) # generate diagnostic plot

plot(rstudent(m2.lm)); par(mfrow = c(1, 1)) # restore normal plot window

The graphics do not indicate outliers or other issues, so we can continue with more diagnostic graphics.

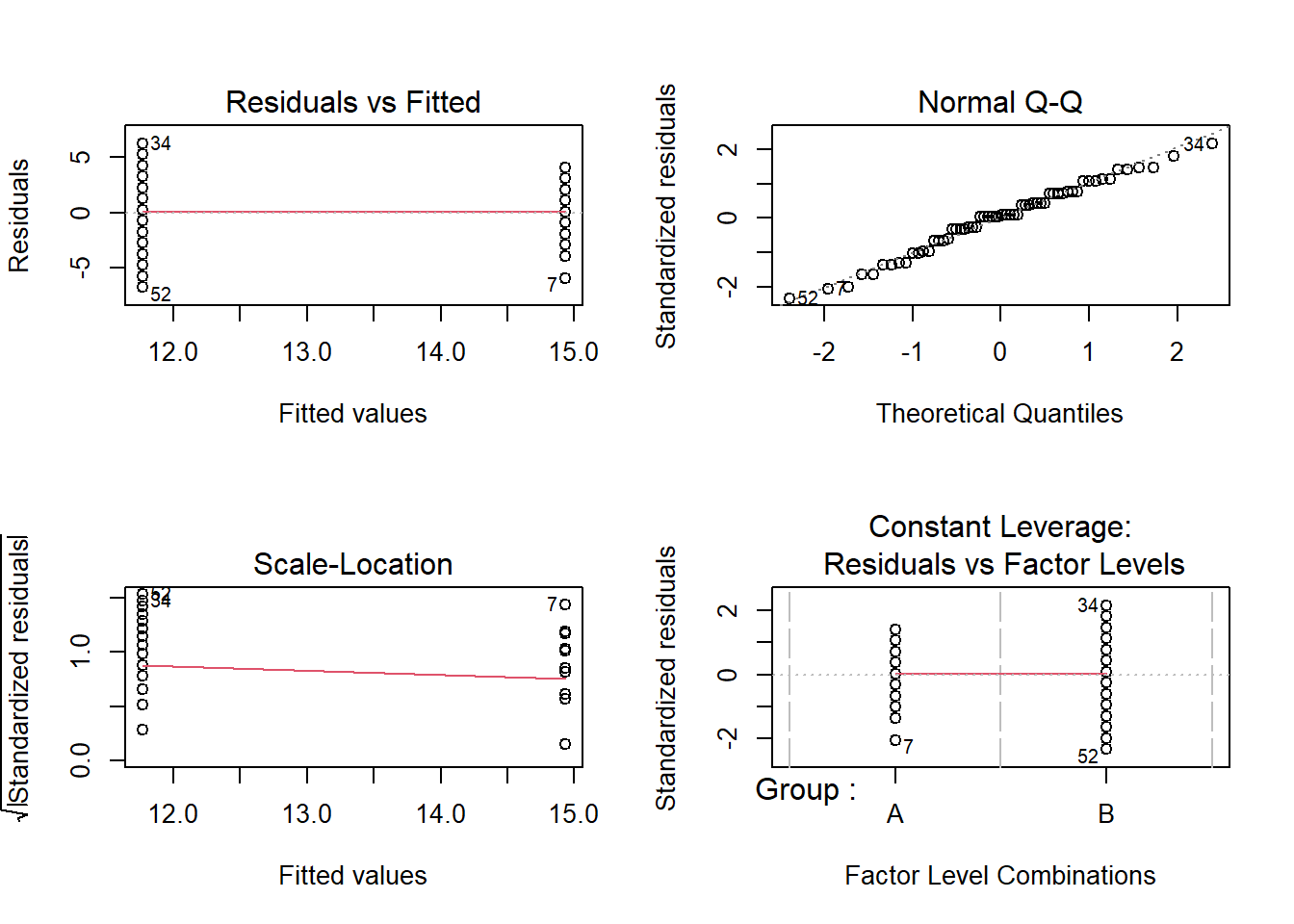

par(mfrow = c(2, 2)) # generate a plot window with 2x2 panels

plot(m2.lm); par(mfrow = c(1, 1)) # restore normal plot window

These graphics also show no problems. In this case, the data can be summarized in the next step.

# tabulate results

slrsummary(m2.lm)Parameters | Estimate | Pearson's r | Std. Error | t value | Pr(>|t|) | P-value sig. |

|---|---|---|---|---|---|---|

(Intercept) | 14.93 | 0.53 | 27.94 | 0 | p < .001*** | |

GroupB | -3.17 | 0.48 | 0.76 | -4.19 | 0.0001 | p < .001*** |

Model statistics | Value | |||||

Number of cases in model | 60 | |||||

Residual standard error on 58 DF | 2.93 | |||||

Multiple R-squared | 0.2322 | |||||

Adjusted R-squared | 0.219 | |||||

F-statistic (1, 58) | 17.55 | |||||

Model p-value | 0.0001 |

We can use the reports package (Makowski et al. 2021)

to summarize the analysis.

report::report(m2.lm)## We fitted a linear model (estimated using OLS) to predict Score with Group

## (formula: Score ~ Group). The model explains a statistically significant and

## moderate proportion of variance (R2 = 0.23, F(1, 58) = 17.55, p < .001, adj. R2

## = 0.22). The model's intercept, corresponding to Group = A, is at 14.93 (95% CI

## [13.86, 16.00], t(58) = 27.94, p < .001). Within this model:

##

## - The effect of Group [B] is statistically significant and negative (beta =

## -3.17, 95% CI [-4.68, -1.65], t(58) = -4.19, p < .001; Std. beta = -0.96, 95%

## CI [-1.41, -0.50])

##

## Standardized parameters were obtained by fitting the model on a standardized

## version of the dataset. 95% Confidence Intervals (CIs) and p-values were

## computed using a Wald t-distribution approximation.We can use this output to write up a final report:

A simple linear regression was fitted to the data. A visual assessment of the model diagnostics did not indicate any problematic or disproportionately influential data points (outliers). The final linear regression model is based on 60 data points, performed significantly better than an intercept-only base line model (F (1, 58): 17.55, p-value <. 001\(***\)), and reported that the model explained 21.9 percent of variance which confirmed a good model fit. According to this final model, group A scored significantly better on the language learning test than group B (coefficient: -3.17, 95% CI [-4.68, -1.65], Std. : -0.96, 95% CI [-1.41, -0.50], SE: 0.48, t-value58: -4.19, p-value <. 001\(***\)). Standardized parameters were obtained by fitting the model on a standardized version of the dataset. 95% Confidence Intervals (CIs) and p-values were computed using the Wald approximation.

Multiple Linear Regression

In contrast to simple linear regression, which estimates the effect of a single predictor, multiple linear regression estimates the effect of various predictor (see the equation below). A multiple linear regression can thus test the effects of various predictors simultaneously.

\[\begin{equation} f_{(x)} = \alpha + \beta_{1}x_{i} + \beta_{2}x_{i+1} + \dots + \beta_{n}x_{i+n} + \epsilon \end{equation}\]

There exists a wealth of literature focusing on multiple linear regressions and the concepts it is based on. For instance, there are Achen (1982), Bortz (2006), Crawley (2005), Faraway (2002), Field, Miles, and Field (2012), Gries (2021), Levshina (2015), Winter (2019) and Wilcox (2009) to name just a few. Introductions to regression modeling in R are Baayen (2008), Crawley (2012), Gries (2021), or Levshina (2015).

The model diagnostics we are dealing with here are partly identical to the diagnostic methods discussed in the section on simple linear regression. Because of this overlap, diagnostics will only be described in more detail if they have not been described in the section on simple linear regression.

EXCURSION

`

A note on sample size and power

A brief note on minimum necessary sample or data set size appears necessary here. Although there appears to be a general assumption that 25 data points per group are sufficient, this is not necessarily correct (it is merely a general rule of thumb that is actually often incorrect). Such rules of thumb are inadequate because the required sample size depends on the number of variables in a given model, the size of the effect and the variance of the effect - in other words, the minimum necessary sample size relates to statistical power (see here for a tutorial on power). If a model contains many variables, then this requires a larger sample size than a model which only uses very few predictors.

Also, to detect an effect with a very minor effect size, one needs a substantially larger sample compared to cases where the effect is very strong. In fact, when dealing with small effects, model require a minimum of 600 cases to reliably detect these effects. Finally, effects that are very robust and do not vary much require a much smaller sample size compared with effects that are spurious and vary substantially. Since the sample size depends on the effect size and variance as well as the number of variables, there is no final one-size-fits-all answer to what the best sample size is.

Another, slightly better but still incorrect, rule of thumb is that the more data, the better. This is not correct because models based on too many cases are prone for overfitting and thus report correlations as being significant that are not. However, given that there are procedures that can correct for overfitting, larger data sets are still preferable to data sets that are simply too small to warrant reliable results. In conclusion, it remains true that the sample size depends on the effect under investigation.`

Despite there being no ultimate rule of thumb, Field, Miles, and Field (2012, 273–75), based on Green (1991), provide data-driven suggestions for the minimal size of data required for regression models that aim to find medium sized effects (k = number of predictors; categorical variables with more than two levels should be transformed into dummy variables):

If one is merely interested in the overall model fit (something I have not encountered), then the sample size should be at least 50 + k (k = number of predictors in model).

If one is only interested in the effect of specific variables, then the sample size should be at least 104 + k (k = number of predictors in model).

If one is only interested in both model fit and the effect of specific variables, then the sample size should be at least the higher value of 50 + k or 104 + k (k = number of predictors in model).

You will see in the R code below that there is already a function that tests whether the sample size is sufficient.

Example: Gifts and Availability

The example we will go through here is taken from Field, Miles, and Field (2012). In this example, the research question is if the money that men spend on presents for women depends on the women’s attractiveness and their relationship status. To answer this research question, we will implement a multiple linear regression and start by loading the data and inspect its structure and properties.

# load data

mlrdata <- base::readRDS(url("https://slcladal.github.io/data/mld.rda", "rb"))status | attraction | money |

|---|---|---|

Relationship | NotInterested | 86.33 |

Relationship | NotInterested | 45.58 |

Relationship | NotInterested | 68.43 |

Relationship | NotInterested | 52.93 |

Relationship | NotInterested | 61.86 |

Relationship | NotInterested | 48.47 |

Relationship | NotInterested | 32.79 |

Relationship | NotInterested | 35.91 |

Relationship | NotInterested | 30.98 |

Relationship | NotInterested | 44.82 |

Relationship | NotInterested | 35.05 |

Relationship | NotInterested | 64.49 |

Relationship | NotInterested | 54.50 |

Relationship | NotInterested | 61.48 |

Relationship | NotInterested | 55.51 |

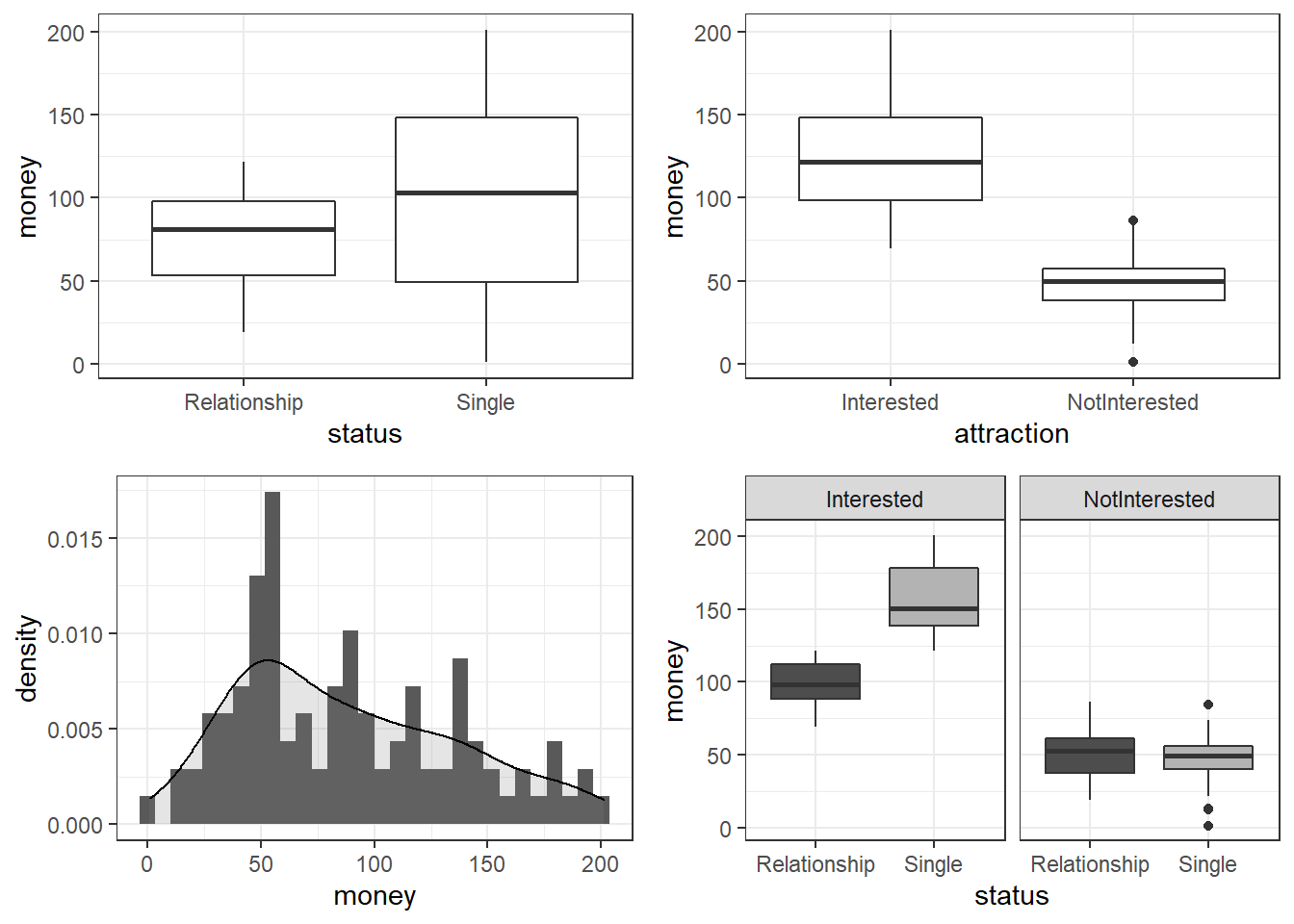

The data set consist of three variables stored in three columns. The first column contains the relationship status of the present giver (in this study this were men), the second whether the man is interested in the woman (the present receiver in this study), and the third column represents the money spend on the present. The data set represents 100 cases and the mean amount of money spend on a present is 88.38 dollars. In a next step, we visualize the data to get a more detailed impression of the relationships between variables.

# create plots

p1 <- ggplot(mlrdata, aes(status, money)) +

geom_boxplot() +

theme_bw()

# plot 2

p2 <- ggplot(mlrdata, aes(attraction, money)) +

geom_boxplot() +

theme_bw()

# plot 3

p3 <- ggplot(mlrdata, aes(x = money)) +

geom_histogram(aes(y=..density..)) +

theme_bw() +

geom_density(alpha=.2, fill = "gray50")

# plot 4

p4 <- ggplot(mlrdata, aes(status, money)) +

geom_boxplot(aes(fill = factor(status))) +

scale_fill_manual(values = c("grey30", "grey70")) +

facet_wrap(~ attraction) +

guides(fill = "none") +

theme_bw()

# show plots

gridExtra::grid.arrange(grobs = list(p1, p2, p3, p4), widths = c(1, 1), layout_matrix = rbind(c(1, 2), c(3, 4)))

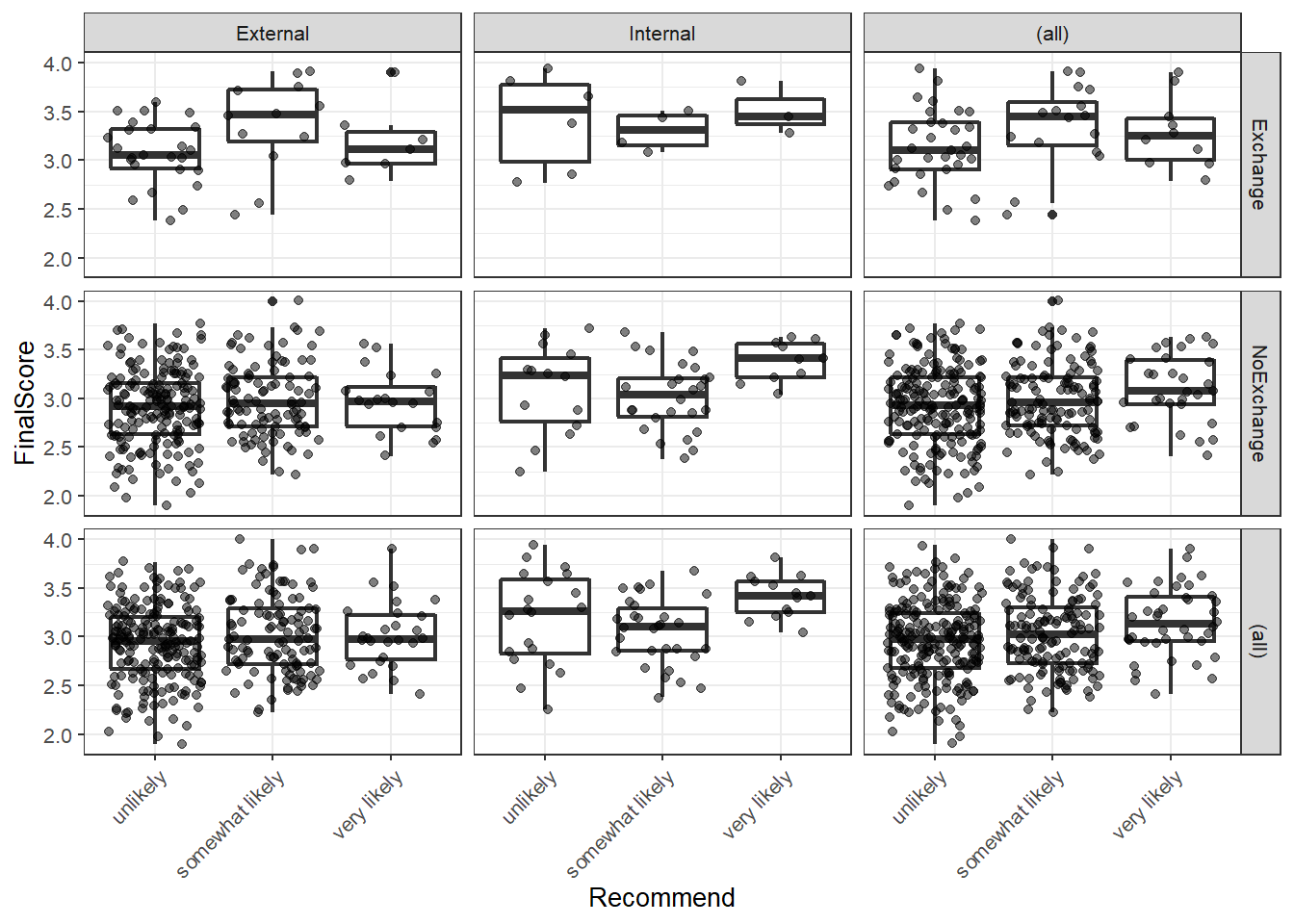

The upper left figure consists of a boxplot which shows how much money was spent by relationship status. The figure suggests that men spend more on women if they are not in a relationship. The next figure shows the relationship between the money spend on presents and whether or not the men were interested in the women.

The boxplot in the upper right panel suggests that men spend substantially more on women if the men are interested in them. The next figure depicts the distribution of the amounts of money spend on the presents for the women. In addition, the figure indicates the existence of two outliers (dots in the boxplot)

The histogram in the lower left panel shows that, although the mean amount of money spent on presents is 88.38 dollars, the distribution peaks around 50 dollars indicating that on average, men spend about 50 dollars on presents. Finally, we will plot the amount of money spend on presents against relationship status by attraction in order to check whether the money spent on presents is affected by an interaction between attraction and relationship status.

The boxplot in the lower right panel confirms the existence of an interaction (a non-additive term) as men only spend more money on women if the men single and they are interested in the women. If men are not interested in the women, then the relationship has no effect as they spend an equal amount of money on the women regardless of whether they are in a relationship or not.

We will now start to implement the regression model. In a first step,

we create two saturated models that contain all possible predictors

(main effects and interactions). The two models are identical but one is

generated with the lm and the other with the

glm function as these functions offer different model

parameters in their output.

m1.mlr = lm( # generate lm regression object

money ~ 1 + attraction*status, # def. regression formula (1 = intercept)

data = mlrdata) # def. data

m1.glm = glm( # generate glm regression object

money ~ 1 + attraction*status, # def. regression formula (1 = intercept)

family = gaussian, # def. linkage function

data = mlrdata) # def. dataAfter generating the saturated models we can now start with the model fitting. Model fitting refers to a process that aims at find the model that explains a maximum of variance with a minimum of predictors (see Field, Miles, and Field 2012, 318). Model fitting is therefore based on the principle of parsimony which is related to Occam’s razor according to which explanations that require fewer assumptions are more likely to be true.

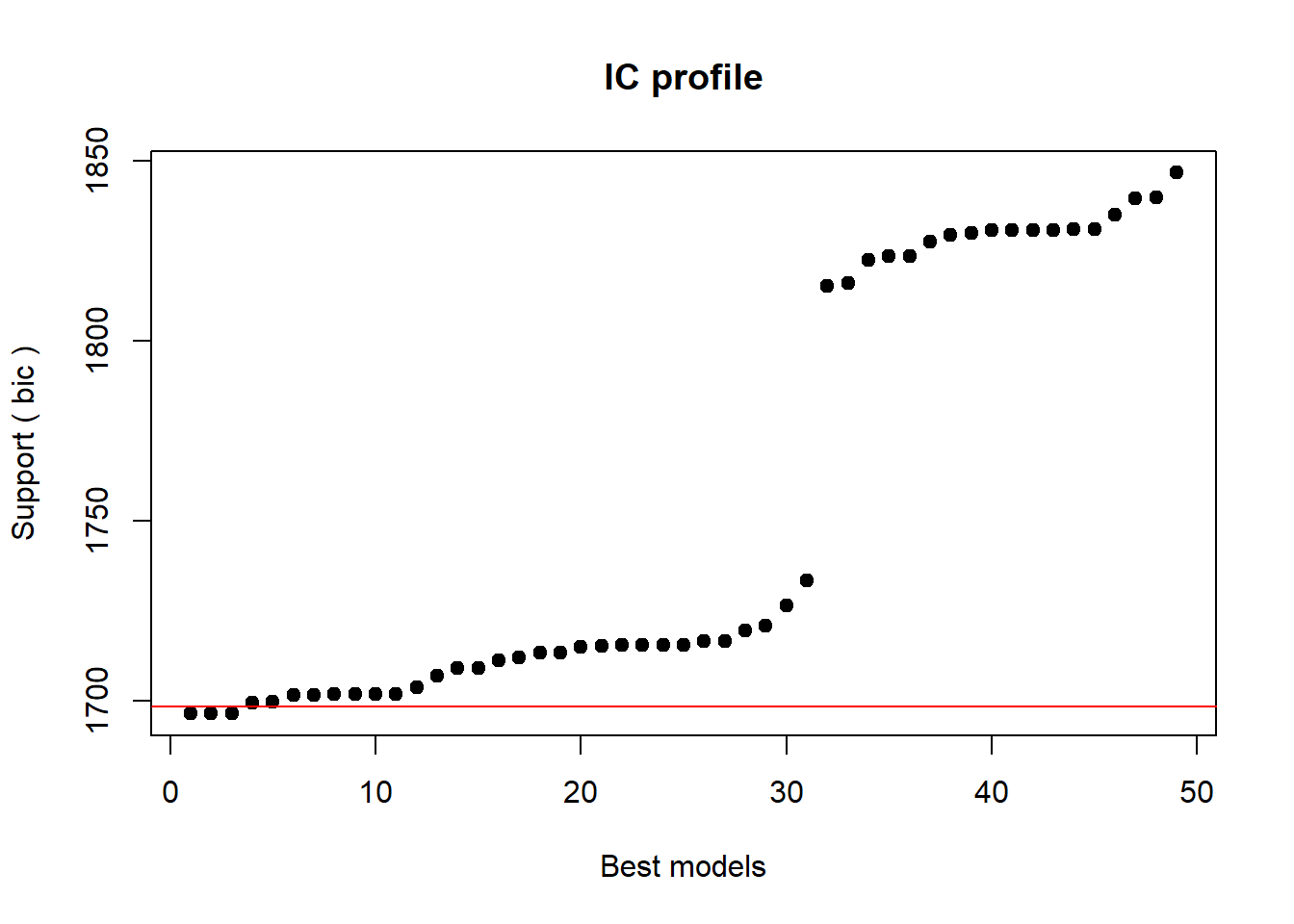

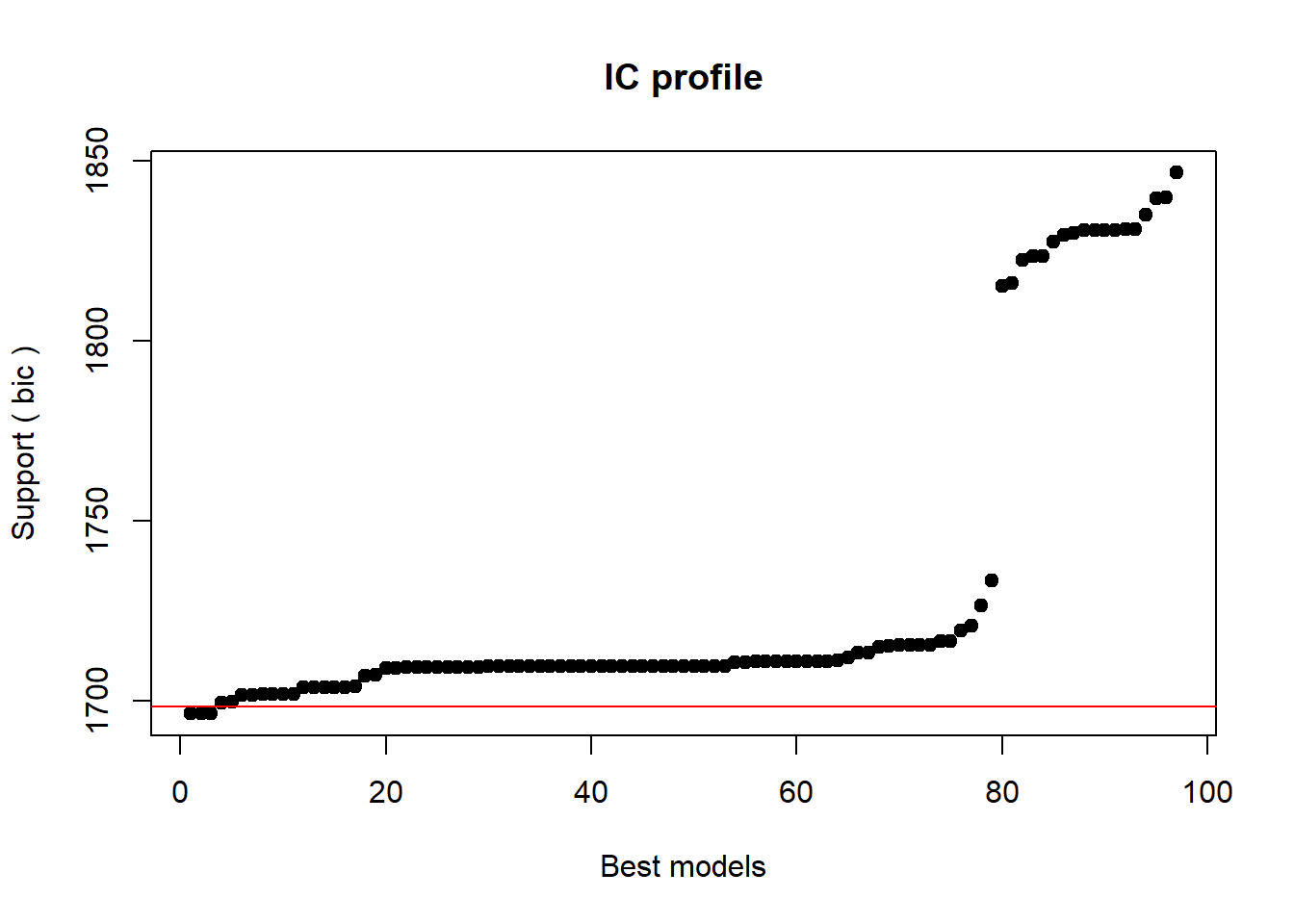

Automatic Model Fitting and Why You Should Not Use It

In this section, we will use a step-wise step-down procedure that uses decreases in AIC (Akaike Information Criterion) as the criterion to minimize the model in a step-wise manner. This procedure aims at finding the model with the lowest AIC values by evaluating - step-by-step - whether the removal of a predictor (term) leads to a lower AIC value.

We use this method here just so that you know it exists and how to implement it but you should rather avoid using automated model fitting. The reason for avoiding automated model fitting is that the algorithm only checks if the AIC has decreased but not if the model is stable or reliable. Thus, automated model fitting has the problem that you can never be sure that the way that lead you to the final model is reliable and that all models were indeed stable. Imagine you want to climb down from a roof top and you have a ladder. The problem is that you do not know if and how many steps are broken. This is similar to using automated model fitting. In other sections, we will explore better methods to fit models (manual step-wise step-up and step-down procedures, for example).

The AIC is calculated using the equation below. The lower the AIC value, the better the balance between explained variance and the number of predictors. AIC values can and should only be compared for models that are fit on the same data set with the same (number of) cases (LL stands for logged likelihood or LogLikelihood and k represents the number of predictors in the model (including the intercept); the LL represents a measure of how good the model fits the data).

\[\begin{equation} Akaike Information Criterion (AIC) = -2LL + 2k \end{equation}\]

An alternative to the AIC is the BIC (Bayesian Information Criterion). Both AIC and BIC penalize models for including variables in a model. The penalty of the BIC is bigger than the penalty of the AIC and it includes the number of cases in the model (LL stands for logged likelihood or LogLikelihood, k represents the number of predictors in the model (including the intercept), and N represents the number of cases in the model).

\[\begin{equation} Bayesian Information Criterion (BIC) = -2LL + 2k * log(N) \end{equation}\]

Interactions are evaluated first and only if all insignificant interactions have been removed would the procedure start removing insignificant main effects (that are not part of significant interactions). Other model fitting procedures (forced entry, step-wise step up, hierarchical) are discussed during the implementation of other regression models. We cannot discuss all procedures here as model fitting is rather complex and a discussion of even the most common procedures would to lengthy and time consuming at this point. It is important to note though that there is not perfect model fitting procedure and automated approaches should be handled with care as they are likely to ignore violations of model parameters that can be detected during manual - but time consuming - model fitting procedures. As a general rule of thumb, it is advisable to fit models as carefully and deliberately as possible. We will now begin to fit the model.

# automated AIC based model fitting

step(m1.mlr, direction = "both")## Start: AIC=592.52

## money ~ 1 + attraction * status

##

## Df Sum of Sq RSS AIC

## <none> 34557.56428 592.5211556

## - attraction:status 1 24947.25481 59504.81909 644.8642395##

## Call:

## lm(formula = money ~ 1 + attraction * status, data = mlrdata)

##

## Coefficients:

## (Intercept) attractionNotInterested

## 99.1548 -47.6628

## statusSingle attractionNotInterested:statusSingle

## 57.6928 -63.1788The automated model fitting procedure informs us that removing predictors has not caused a decrease in the AIC. The saturated model is thus also the final minimal adequate model. We will now inspect the final minimal model and go over the model report.

m2.mlr = lm( # generate lm regression object

money ~ (status + attraction)^2, # def. regression formula

data = mlrdata) # def. data

m2.glm = glm( # generate glm regression object

money ~ (status + attraction)^2, # def. regression formula

family = gaussian, # def. linkage function

data = mlrdata) # def. data

# inspect final minimal model

summary(m2.mlr)##

## Call:

## lm(formula = money ~ (status + attraction)^2, data = mlrdata)

##

## Residuals:

## Min 1Q Median 3Q Max

## -45.0760 -14.2580 0.4596 11.9315 44.1424

##

## Coefficients:

## Estimate Std. Error t value

## (Intercept) 99.15480000 3.79459947 26.13050

## statusSingle 57.69280000 5.36637403 10.75080

## attractionNotInterested -47.66280000 5.36637403 -8.88175

## statusSingle:attractionNotInterested -63.17880000 7.58919893 -8.32483

## Pr(>|t|)

## (Intercept) < 0.000000000000000222 ***

## statusSingle < 0.000000000000000222 ***

## attractionNotInterested 0.00000000000003751 ***

## statusSingle:attractionNotInterested 0.00000000000058085 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 18.9729973 on 96 degrees of freedom

## Multiple R-squared: 0.852041334, Adjusted R-squared: 0.847417626

## F-statistic: 184.276619 on 3 and 96 DF, p-value: < 0.0000000000000002220446The first element of the report is called Call and it reports the regression formula of the model. Then, the report provides the residual distribution (the range, median and quartiles of the residuals) which allows drawing inferences about the distribution of differences between observed and expected values. If the residuals are distributed non-normally, then this is a strong indicator that the model is unstable and unreliable because mathematical assumptions on which the model is based are violated.

Next, the model summary reports the most important part: a table with model statistics of the fixed-effects structure of the model. The table contains the estimates (coefficients of the predictors), standard errors, t-values, and the p-values which show whether a predictor significantly correlates with the dependent variable that the model investigates.

All main effects (status and attraction) as well as the interaction between status and attraction is reported as being significantly correlated with the dependent variable (money). An interaction occurs if a correlation between the dependent variable and a predictor is affected by another predictor.

The top most term is called intercept and has a value of 99.15 which represents the base estimate to which all other estimates refer. To exemplify what this means, let us consider what the model would predict that a man would spend on a present if he interested in the woman but he is also in a relationship. The amount he would spend (based on the model would be 99.15 dollars (which is the intercept). This means that the intercept represents the predicted value if all predictors take the base or reference level. And since being in relationship but being interested are the case, and because the interaction does not apply, the predicted value in our example is exactly the intercept (see below).

#intercept Single NotInterested Single:NotInterested

99.15 + 57.69 + 0 + 0 # 156.8 single + interested## [1] 156.8499.15 + 57.69 - 47.66 - 63.18 # 46.00 single + not interested## [1] 4699.15 - 0 + 0 - 0 # 99.15 relationship + interested## [1] 99.1599.15 - 0 - 47.66 - 0 # 51.49 relationship + not interested## [1] 51.49Now, let us consider what a man would spend if he is in a relationship and he is not attracted to the women. In that case, the model predicts that the man would spend only 51.49 dollars on a present: the intercept (99.15) minus 47.66 because the man is not interested (and no additional subtraction because the interaction does not apply).

We can derive the same results easier using the predict

function.

# make prediction based on the model for original data

prediction <- predict(m2.mlr, newdata = mlrdata)

# inspect predictions

table(round(prediction,2))##

## 46.01 51.49 99.15 156.85

## 25 25 25 25Below the table of coefficients, the regression summary reports model statistics that provide information about how well the model performs. The difference between the values and the values in the coefficients table is that the model statistics refer to the model as a whole rather than focusing on individual predictors.

The multiple R2-value is a measure of how much variance the model explains. A multiple R2-value of 0 would inform us that the model does not explain any variance while a value of .852 mean that the model explains 85.2 percent of the variance. A value of 1 would inform us that the model explains 100 percent of the variance and that the predictions of the model match the observed values perfectly. Multiplying the multiple R2-value thus provides the percentage of explained variance. Models that have a multiple R2-value equal or higher than .05 are deemed substantially significant (see Szmrecsanyi 2006, 55). It has been claimed that models should explain a minimum of 5 percent of variance but this is problematic as it is not uncommon for models to have very low explanatory power while still performing significantly and systematically better than chance. In addition, the total amount of variance is negligible in cases where one is interested in very weak but significant effects. It is much more important for model to perform significantly better than minimal base-line models because if this is not the case, then the model does not have any predictive and therefore no explanatory power.

The adjusted R2-value considers the amount of explained variance in light of the number of predictors in the model (it is thus somewhat similar to the AIC and BIC) and informs about how well the model would perform if it were applied to the population that the sample is drawn from. Ideally, the difference between multiple and adjusted R2-value should be very small as this means that the model is not overfitted. If, however, the difference between multiple and adjusted R2-value is substantial, then this would strongly suggest that the model is unstable and overfitted to the data while being inadequate for drawing inferences about the population. Differences between multiple and adjusted R2-values indicate that the data contains outliers that cause the distribution of the data on which the model is based to differ from the distributions that the model mathematically requires to provide reliable estimates. The difference between multiple and adjusted R2-value in our model is very small (85.2-84.7=.05) and should not cause concern.

Before continuing, we will calculate the confidence intervals of the coefficients.

# extract confidence intervals of the coefficients

confint(m2.mlr)## 2.5 % 97.5 %

## (Intercept) 91.6225795890 106.6870204110

## statusSingle 47.0406317400 68.3449682600

## attractionNotInterested -58.3149682600 -37.0106317400

## statusSingle:attractionNotInterested -78.2432408219 -48.1143591781# create and compare baseline- and minimal adequate model

m0.mlr <- lm(money ~1, data = mlrdata)

anova(m0.mlr, m2.mlr)## Analysis of Variance Table

##

## Model 1: money ~ 1

## Model 2: money ~ (status + attraction)^2

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 99 233562.28650

## 2 96 34557.56428 3 199004.7222 184.27662 < 0.000000000000000222 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Now, we compare the final minimal adequate model to the base-line model to test whether then final model significantly outperforms the baseline model.

# compare baseline- and minimal adequate model

Anova(m0.mlr, m2.mlr, type = "III")## Anova Table (Type III tests)

##

## Response: money

## Sum Sq Df F value Pr(>F)

## (Intercept) 781015.8300 1 2169.64133 < 0.000000000000000222 ***

## Residuals 34557.5643 96

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The comparison between the two model confirms that the minimal adequate model performs significantly better (makes significantly more accurate estimates of the outcome variable) compared with the baseline model.

Model diagnostics

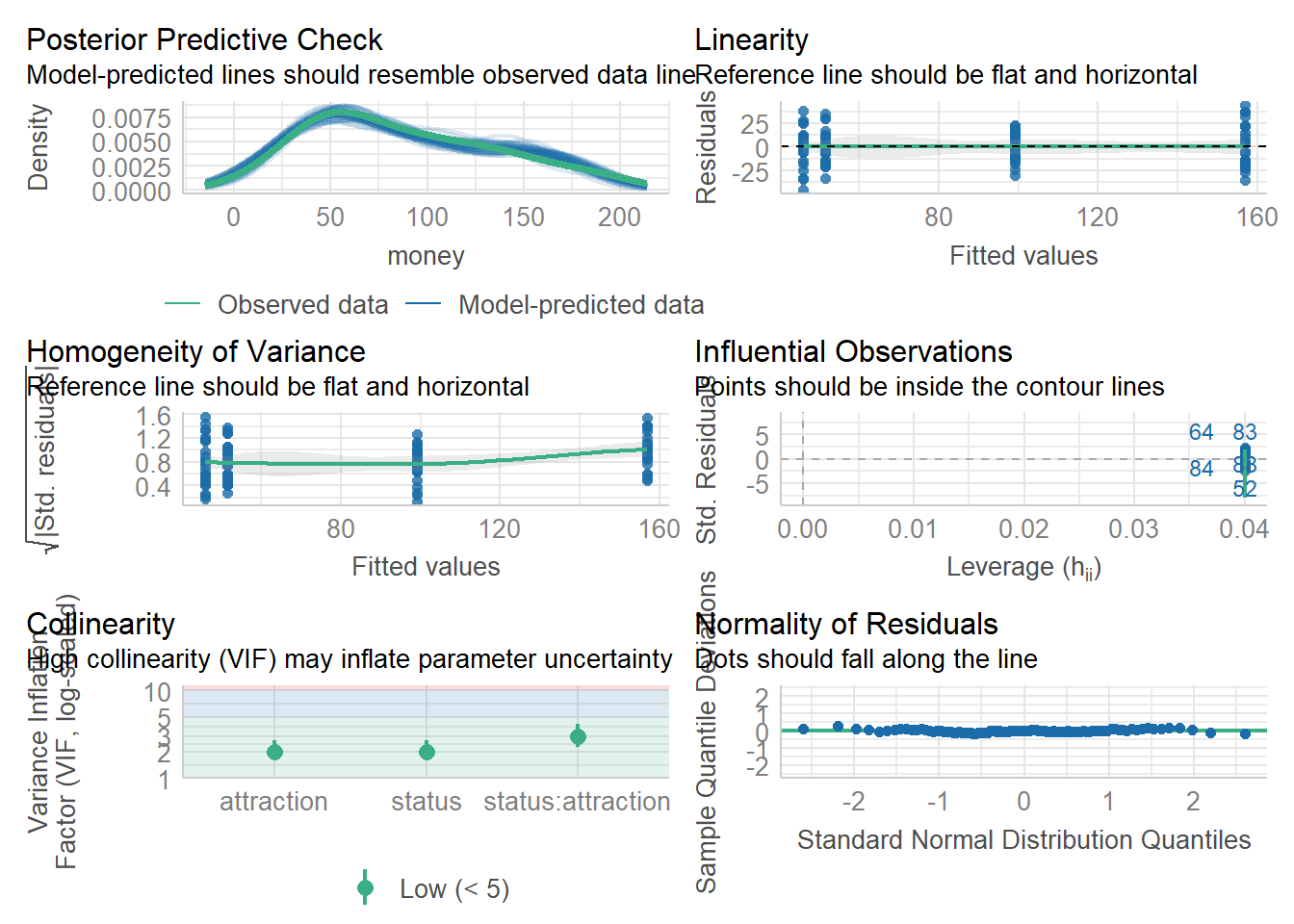

We now check the model performance using the

model_performance() function and to diagnose the model, we

are using the check_model() function from the

performance package (Lüdecke et al. 2021).

We start by checking the performance of the null-model against the performance values of the final minimal adequate model. Ideally, the final minimal adequate model would show higher explanatory power (higher R2 values) and better parsimony (lower AIC and AICc as well as BIC values).

performance::model_performance(m0.mlr)## # Indices of model performance

##

## AIC | AICc | BIC | R2 | R2 (adj.) | RMSE | Sigma

## --------------------------------------------------------------------

## 1063.391 | 1063.515 | 1068.601 | 0.000 | 0.000 | 48.328 | 48.572performance::model_performance(m2.mlr)## # Indices of model performance

##

## AIC | AICc | BIC | R2 | R2 (adj.) | RMSE | Sigma

## -----------------------------------------------------------------

## 878.309 | 878.947 | 891.335 | 0.852 | 0.847 | 18.590 | 18.973The output confirms that the final minimal adequate model performs

substantively better than the null model (higher explanatory power and

better parsimony). We now diagnose the model using the

check_model() function.

performance::check_model(m2.mlr)

Outlier Detection

In a next step, we now need to look for outliers check whether removing data points disproportionately decreases model fit. To begin with, we generate diagnostic plots.

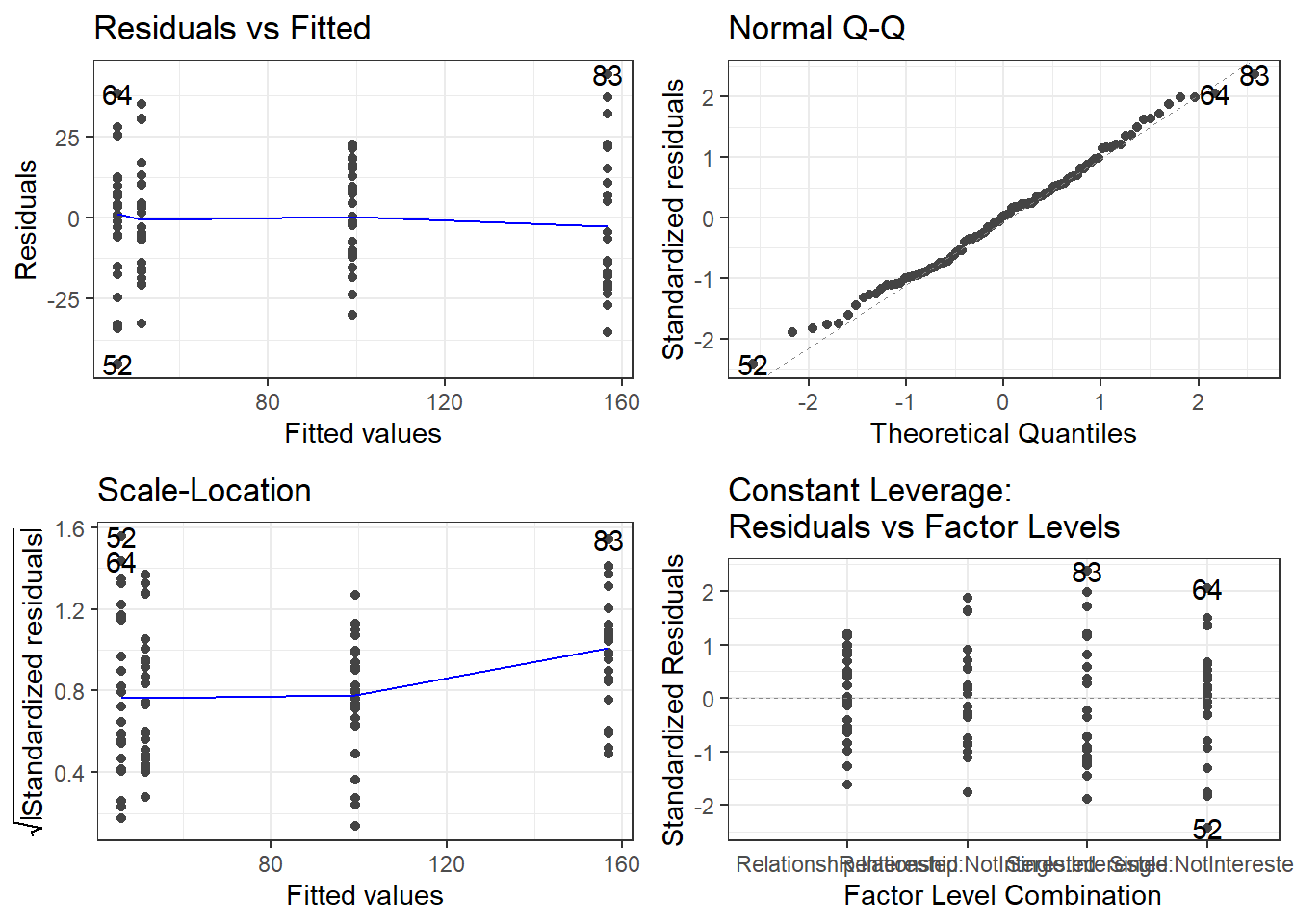

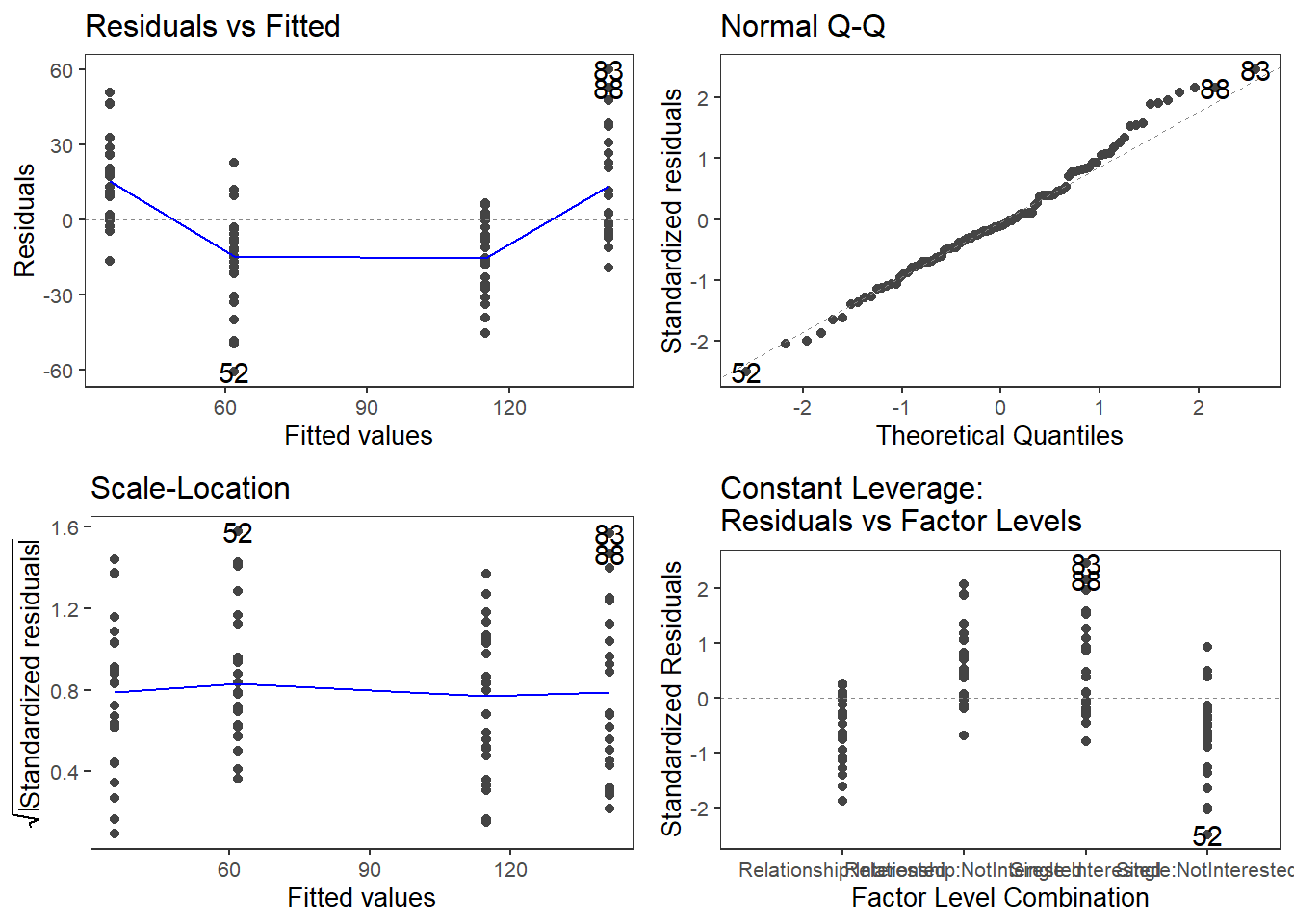

# generate plots

autoplot(m2.mlr) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank()) +

theme_bw()

The plots do not show severe problems such as funnel shaped patterns or drastic deviations from the diagonal line in Normal Q-Q plot (have a look at the explanation of what to look for and how to interpret these diagnostic plots in the section on simple linear regression) but data points 52, 64, and 83 are repeatedly indicated as potential outliers.

# determine a cutoff for data points that have D-values higher than 4/(n-k-1)

cutoff <- 4/((nrow(mlrdata)-length(m2.mlr$coefficients)-2))

# start plotting

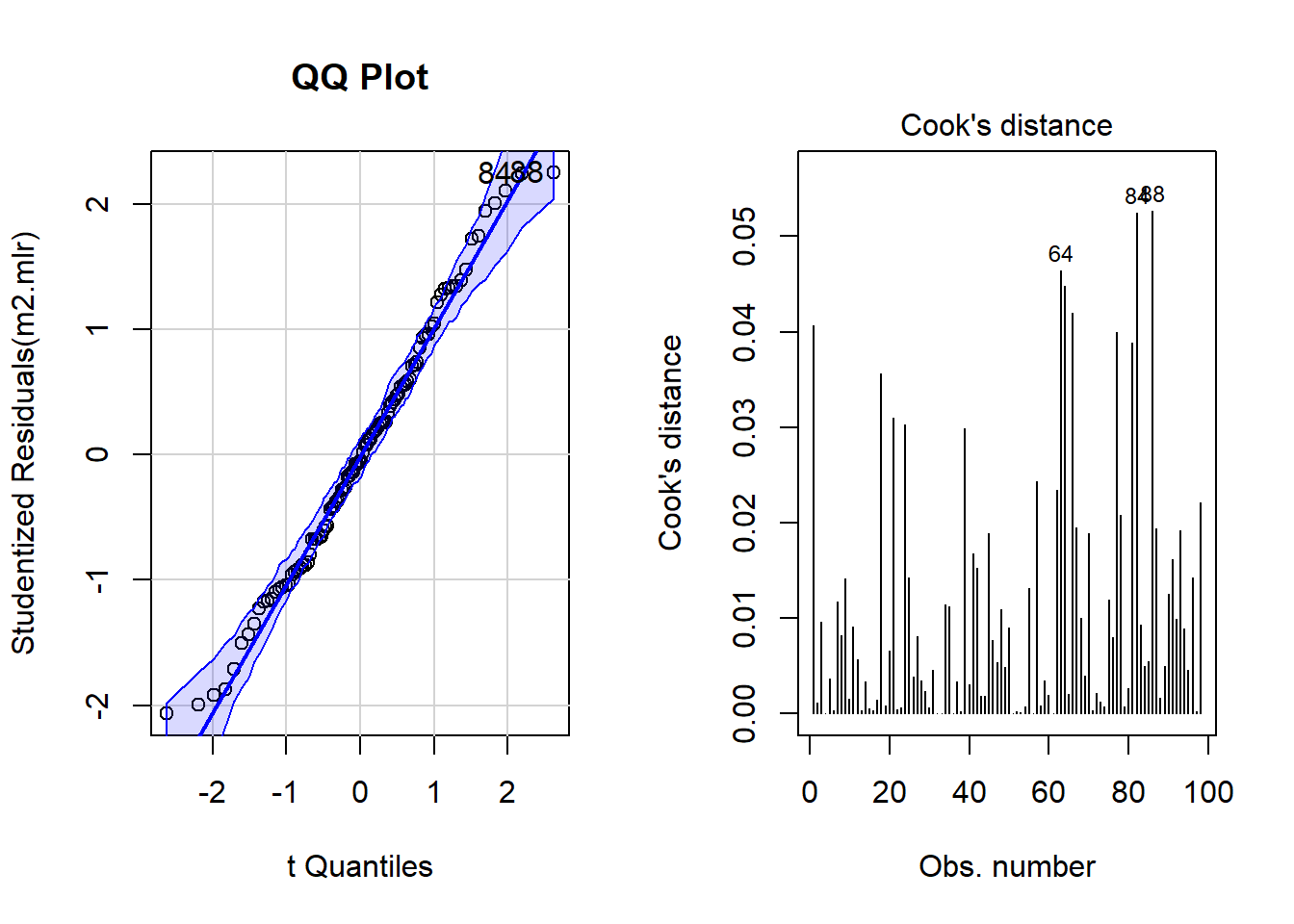

par(mfrow = c(1, 2)) # display plots in 3 rows/2 columns

qqPlot(m2.mlr, main="QQ Plot") # create qq-plot## [1] 52 83plot(m2.mlr, which=4, cook.levels = cutoff); par(mfrow = c(1, 1))

The graphs indicate that data points 52, 64, and 83 may be problematic. We will therefore statistically evaluate whether these data points need to be removed. In order to find out which data points require removal, we extract the influence measure statistics and add them to out data set.

# extract influence statistics

infl <- influence.measures(m2.mlr)

# add infl. statistics to data

mlrdata <- data.frame(mlrdata, infl[[1]], infl[[2]])

# annotate too influential data points

remove <- apply(infl$is.inf, 1, function(x) {

ifelse(x == TRUE, return("remove"), return("keep")) } )

# add annotation to data

mlrdata <- data.frame(mlrdata, remove)

# number of rows before removing outliers

nrow(mlrdata)## [1] 100# remove outliers

mlrdata <- mlrdata[mlrdata$remove == "keep", ]

# number of rows after removing outliers

nrow(mlrdata)## [1] 98The difference in row in the data set before and after removing data points indicate that two data points which represented outliers have been removed.

NOTE

In general, outliers should not simply be removed

unless there are good reasons for it (this could be that the outliers

represent measurement errors). If a data set contains outliers, one

should rather switch to methods that are better at handling outliers,

e.g. by using weights to account for data points with high leverage. One

alternative would be to switch to a robust regression (see here).

However, here we show how to proceed by removing outliers as this is a

common, though potentially problematic, method of dealing with outliers.

Rerun Regression

As we have decided to remove the outliers which means that we are now dealing with a different data set, we need to rerun the regression analysis. As the steps are identical to the regression analysis performed above, the steps will not be described in greater detail.

# recreate regression models on new data

m0.mlr = lm(money ~ 1, data = mlrdata)

m0.glm = glm(money ~ 1, family = gaussian, data = mlrdata)

m1.mlr = lm(money ~ (status + attraction)^2, data = mlrdata)

m1.glm = glm(money ~ status * attraction, family = gaussian,

data = mlrdata)

# automated AIC based model fitting

step(m1.mlr, direction = "both")## Start: AIC=570.29

## money ~ (status + attraction)^2

##

## Df Sum of Sq RSS AIC

## <none> 30411.31714 570.2850562

## - status:attraction 1 21646.86199 52058.17914 620.9646729##

## Call:

## lm(formula = money ~ (status + attraction)^2, data = mlrdata)

##

## Coefficients:

## (Intercept) statusSingle

## 99.1548000 55.8535333

## attractionNotInterested statusSingle:attractionNotInterested

## -47.6628000 -59.4613667# create new final models

m2.mlr = lm(money ~ (status + attraction)^2, data = mlrdata)

m2.glm = glm(money ~ status * attraction, family = gaussian,

data = mlrdata)

# inspect final minimal model

summary(m2.mlr)##

## Call:

## lm(formula = money ~ (status + attraction)^2, data = mlrdata)

##

## Residuals:

## Min 1Q Median 3Q Max

## -35.76416667 -13.50520000 -0.98948333 10.59887500 38.77166667

##

## Coefficients:

## Estimate Std. Error t value

## (Intercept) 99.15480000 3.59735820 27.56323

## statusSingle 55.85353333 5.14015367 10.86612

## attractionNotInterested -47.66280000 5.08743275 -9.36873

## statusSingle:attractionNotInterested -59.46136667 7.26927504 -8.17982

## Pr(>|t|)

## (Intercept) < 0.000000000000000222 ***

## statusSingle < 0.000000000000000222 ***

## attractionNotInterested 0.0000000000000040429 ***

## statusSingle:attractionNotInterested 0.0000000000013375166 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 17.986791 on 94 degrees of freedom

## Multiple R-squared: 0.857375902, Adjusted R-squared: 0.852824069

## F-statistic: 188.358387 on 3 and 94 DF, p-value: < 0.0000000000000002220446# extract confidence intervals of the coefficients

confint(m2.mlr)## 2.5 % 97.5 %

## (Intercept) 92.0121609656 106.2974390344

## statusSingle 45.6476377202 66.0594289465

## attractionNotInterested -57.7640169936 -37.5615830064

## statusSingle:attractionNotInterested -73.8946826590 -45.0280506744# compare baseline with final model

anova(m0.mlr, m2.mlr)## Analysis of Variance Table

##

## Model 1: money ~ 1

## Model 2: money ~ (status + attraction)^2

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 97 213227.06081

## 2 94 30411.31714 3 182815.7437 188.35839 < 0.000000000000000222 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# compare baseline with final model

Anova(m0.mlr, m2.mlr, type = "III")## Anova Table (Type III tests)

##

## Response: money

## Sum Sq Df F value Pr(>F)

## (Intercept) 760953.2107 1 2352.07181 < 0.000000000000000222 ***

## Residuals 30411.3171 94

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Additional Model Diagnostics

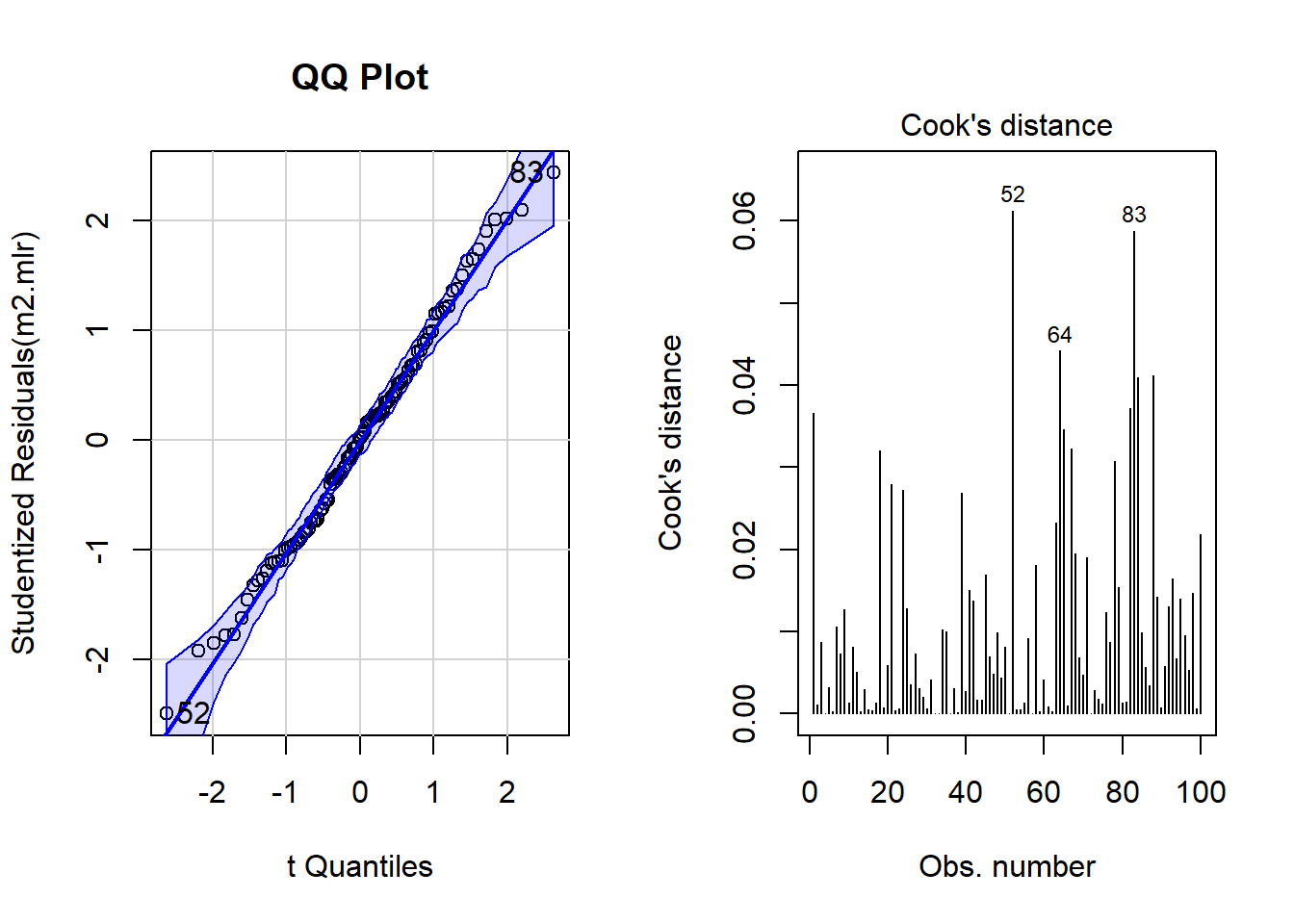

After rerunning the regression analysis on the updated data set, we again create diagnostic plots in order to check whether there are potentially problematic data points.

# generate plots

autoplot(m2.mlr) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank()) +

theme_bw()

# determine a cutoff for data points that have

# D-values higher than 4/(n-k-1)

cutoff <- 4/((nrow(mlrdata)-length(m2.mlr$coefficients)-2))

# start plotting

par(mfrow = c(1, 2)) # display plots in 1 row/2 columns

qqPlot(m2.mlr, main="QQ Plot") # create qq-plot## 84 88

## 82 86plot(m2.mlr, which=4, cook.levels = cutoff); par(mfrow = c(1, 1))

Although the diagnostic plots indicate that additional points may be problematic, but these data points deviate substantially less from the trend than was the case with the data points that have already been removed. To make sure that retaining the data points that are deemed potentially problematic by the diagnostic plots, is acceptable, we extract diagnostic statistics and add them to the data.

# add model diagnostics to the data

mlrdata <- mlrdata %>%

dplyr::mutate(residuals = resid(m2.mlr),

standardized.residuals = rstandard(m2.mlr),

studentized.residuals = rstudent(m2.mlr),

cooks.distance = cooks.distance(m2.mlr),

dffit = dffits(m2.mlr),

leverage = hatvalues(m2.mlr),

covariance.ratios = covratio(m2.mlr),

fitted = m2.mlr$fitted.values)We can now use these diagnostic statistics to create more precise diagnostic plots.

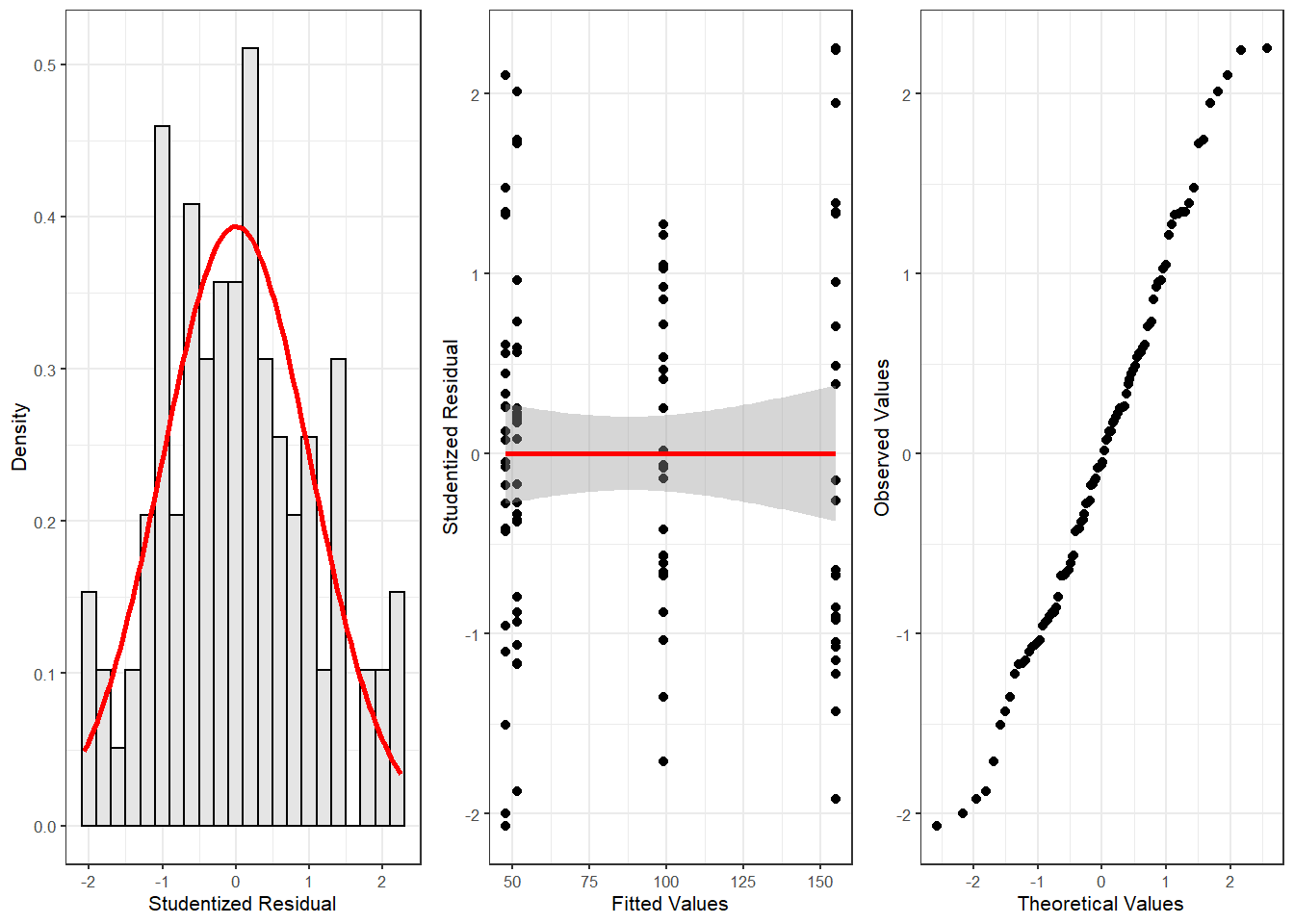

# plot 5

p5 <- ggplot(mlrdata,

aes(studentized.residuals)) +

theme(legend.position = "none")+

geom_histogram(aes(y=..density..),

binwidth = .2,

colour="black",

fill="gray90") +

labs(x = "Studentized Residual", y = "Density") +

stat_function(fun = dnorm,

args = list(mean = mean(mlrdata$studentized.residuals, na.rm = TRUE),

sd = sd(mlrdata$studentized.residuals, na.rm = TRUE)),

colour = "red", size = 1) +

theme_bw(base_size = 8)

# plot 6

p6 <- ggplot(mlrdata, aes(fitted, studentized.residuals)) +

geom_point() +

geom_smooth(method = "lm", colour = "Red")+

theme_bw(base_size = 8)+

labs(x = "Fitted Values",

y = "Studentized Residual")

# plot 7

p7 <- qplot(sample = mlrdata$studentized.residuals) +

theme_bw(base_size = 8) +

labs(x = "Theoretical Values",

y = "Observed Values")

gridExtra::grid.arrange(p5, p6, p7, nrow = 1)

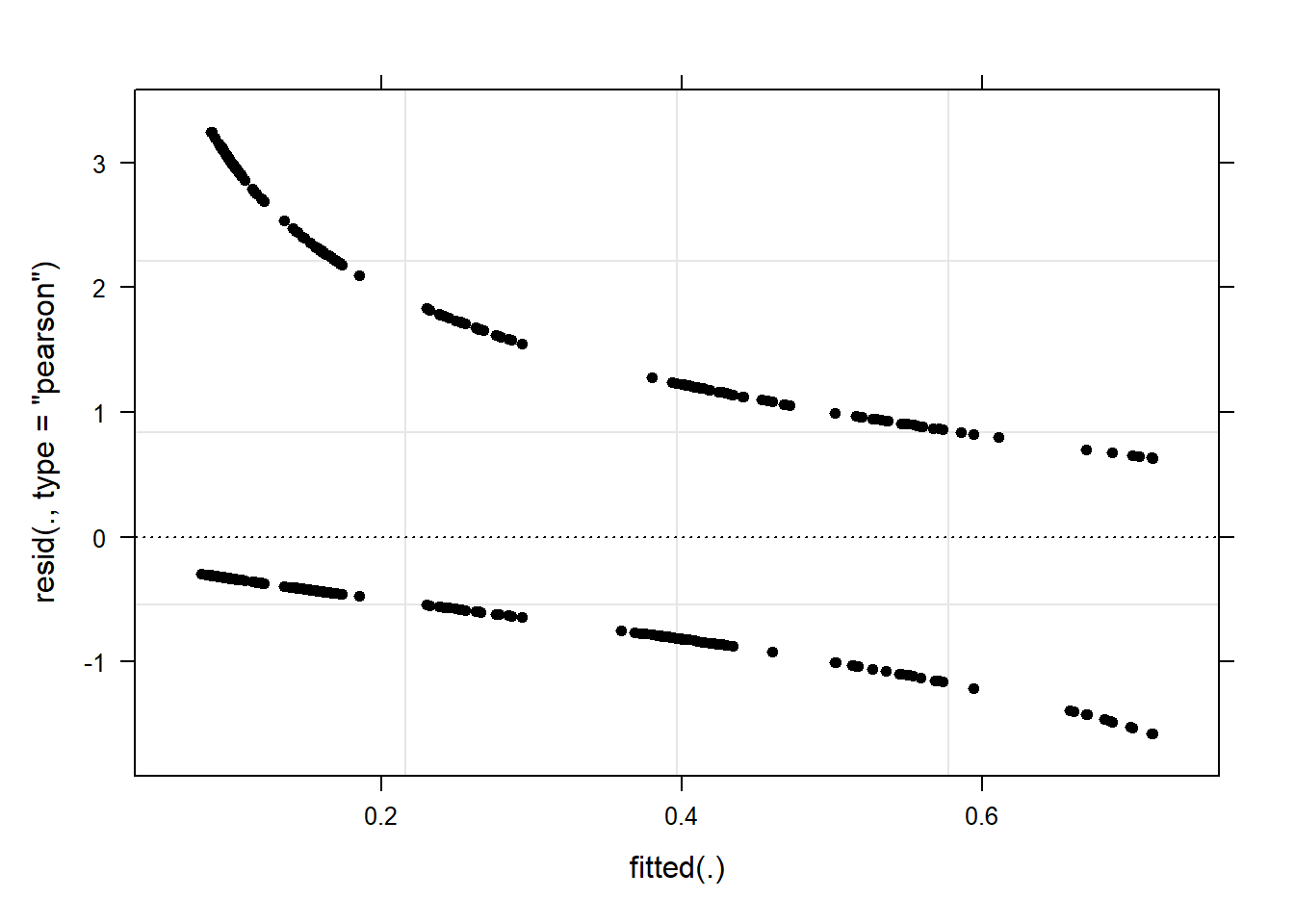

The new diagnostic plots do not indicate outliers that require removal. With respect to such data points the following parameters should be considered:

Data points with standardized residuals > 3.29 should be removed (Field, Miles, and Field 2012, 269)

If more than 1 percent of data points have standardized residuals exceeding values > 2.58, then the error rate of the model is unacceptable (Field, Miles, and Field 2012, 269).

If more than 5 percent of data points have standardized residuals exceeding values > 1.96, then the error rate of the model is unacceptable (Field, Miles, and Field 2012, 269)

In addition, data points with Cook’s D-values > 1 should be removed (Field, Miles, and Field 2012, 269)

Also, data points with leverage values higher than \(3(k + 1)/N\) or \(2(k + 1)/N\) (k = Number of predictors, N = Number of cases in model) should be removed (Field, Miles, and Field 2012, 270)

There should not be (any) autocorrelation among predictors. This means that independent variables cannot be correlated with itself (for instance, because data points come from the same subject). If there is autocorrelation among predictors, then a Repeated Measures Design or a (hierarchical) mixed-effects model should be implemented instead.

Predictors cannot substantially correlate with each other (multicollinearity) (see the subsection on (multi-)collinearity in the section of multiple binomial logistic regression for more details about (multi-)collinearity). If a model contains predictors that have variance inflation factors (VIF) > 10 the model is unreliable (Myers 1990) and predictors causing such VIFs should be removed. Indeed, even VIFs of 2.5 can be problematic (Szmrecsanyi 2006, 215) Indeed, Zuur, Ieno, and Elphick (2010) propose that variables with VIFs exceeding 3 should be removed!

NOTE

However, (multi-)collinearity is only an issue if one

is interested in interpreting regression results! If the interpretation

is irrelevant because what is relevant is prediction(!), then it does

not matter if the model contains collinear predictors! See Gries (2021) for a more elaborate

explanation.

- The mean value of VIFs should be ~ 1 (Bowerman and O’Connell 1990).

The following code chunk evaluates these criteria.

# 1: optimal = 0

# (listed data points should be removed)

which(mlrdata$standardized.residuals > 3.29)## named integer(0)# 2: optimal = 1

# (listed data points should be removed)

stdres_258 <- as.vector(sapply(mlrdata$standardized.residuals, function(x) {

ifelse(sqrt((x^2)) > 2.58, 1, 0) } ))

(sum(stdres_258) / length(stdres_258)) * 100## [1] 0# 3: optimal = 5

# (listed data points should be removed)

stdres_196 <- as.vector(sapply(mlrdata$standardized.residuals, function(x) {

ifelse(sqrt((x^2)) > 1.96, 1, 0) } ))

(sum(stdres_196) / length(stdres_196)) * 100## [1] 6.12244897959# 4: optimal = 0

# (listed data points should be removed)

which(mlrdata$cooks.distance > 1)## named integer(0)# 5: optimal = 0

# (data points should be removed if cooks distance is close to 1)

which(mlrdata$leverage >= (3*mean(mlrdata$leverage)))## named integer(0)# 6: checking autocorrelation:

# Durbin-Watson test (optimal: high p-value)

dwt(m2.mlr)## lag Autocorrelation D-W Statistic p-value

## 1 -0.0143324675649 1.9680423527 0.696

## Alternative hypothesis: rho != 0# 7: test multicollinearity 1

vif(m2.mlr)## statusSingle attractionNotInterested

## 2.00 1.96

## statusSingle:attractionNotInterested

## 2.96# 8: test multicollinearity 2

1/vif(m2.mlr)## statusSingle attractionNotInterested

## 0.500000000000 0.510204081633

## statusSingle:attractionNotInterested

## 0.337837837838# 9: mean vif should not exceed 1

mean(vif(m2.mlr))## [1] 2.30666666667Except for the mean VIF value (2.307) which should not exceed 1, all diagnostics are acceptable. We will now test whether the sample size is sufficient for our model. With respect to the minimal sample size and based on Green (1991), Field, Miles, and Field (2012, 273–74) offer the following rules of thumb for an adequate sample size (k = number of predictors; categorical predictors with more than two levels should be recoded as dummy variables):

if you are interested in the overall model: 50 + 8k (k = number of predictors)

if you are interested in individual predictors: 104 + k

if you are interested in both: take the higher value!

Evaluation of Sample Size

After performing the diagnostics, we will now test whether the sample size is adequate and what the values of R would be based on a random distribution in order to be able to estimate how likely a \(\beta\)-error is given the present sample size (see Field, Miles, and Field 2012, 274). Beta errors (or \(\beta\)-errors) refer to the erroneous assumption that a predictor is not significant (based on the analysis and given the sample) although it does have an effect in the population. In other words, \(\beta\)-error means to overlook a significant effect because of weaknesses of the analysis. The test statistics ranges between 0 and 1 where lower values are better. If the values approximate 1, then there is serious concern as the model is not reliable given the sample size. In such cases, unfortunately, the best option is to increase the sample size.

# load functions

source("https://slcladal.github.io/rscripts/SampleSizeMLR.r")

source("https://slcladal.github.io/rscripts/ExpR.r")

# check if sample size is sufficient

smplesz(m2.mlr)## [1] "Sample too small: please increase your sample by 9 data points"# check beta-error likelihood

expR(m2.mlr)## [1] "Based on the sample size expect a false positive correlation of 0.0309 between the predictors and the predicted"The function smplesz reports that the sample size is

insufficient by 9 data points according to Green

(1991). The likelihood of \(\beta\)-errors, however, is very small

(0.0309). As a last step, we summarize the results of the regression

analysis.

# tabulate model results

sjPlot::tab_model(m0.glm, m2.glm)| money | money | |||||

|---|---|---|---|---|---|---|

| Predictors | Estimates | CI | p | Estimates | CI | p |

| (Intercept) | 88.12 | 78.72 – 97.52 | <0.001 | 99.15 | 92.10 – 106.21 | <0.001 |

| status [Single] | 55.85 | 45.78 – 65.93 | <0.001 | |||

|

attraction [NotInterested] |

-47.66 | -57.63 – -37.69 | <0.001 | |||

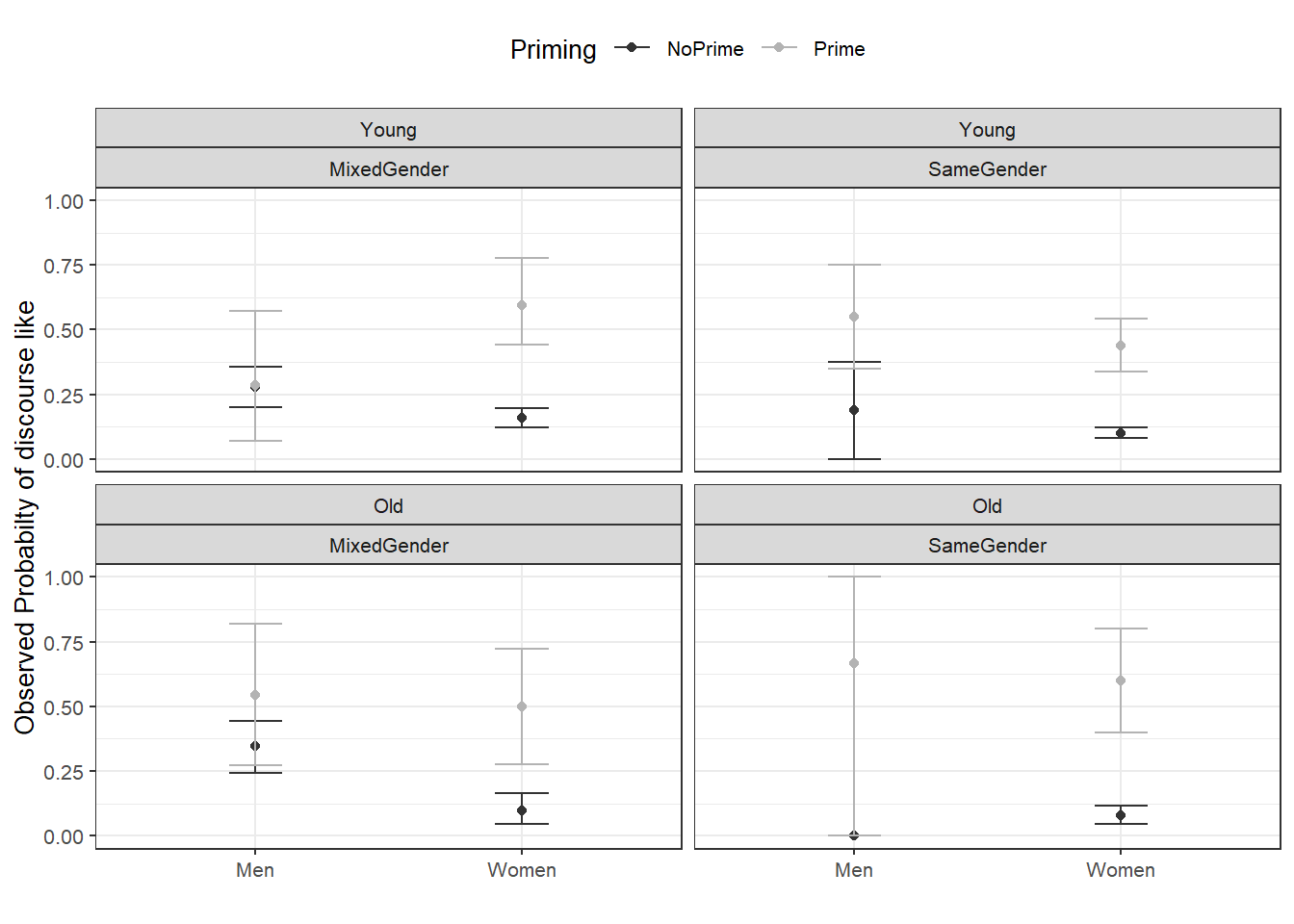

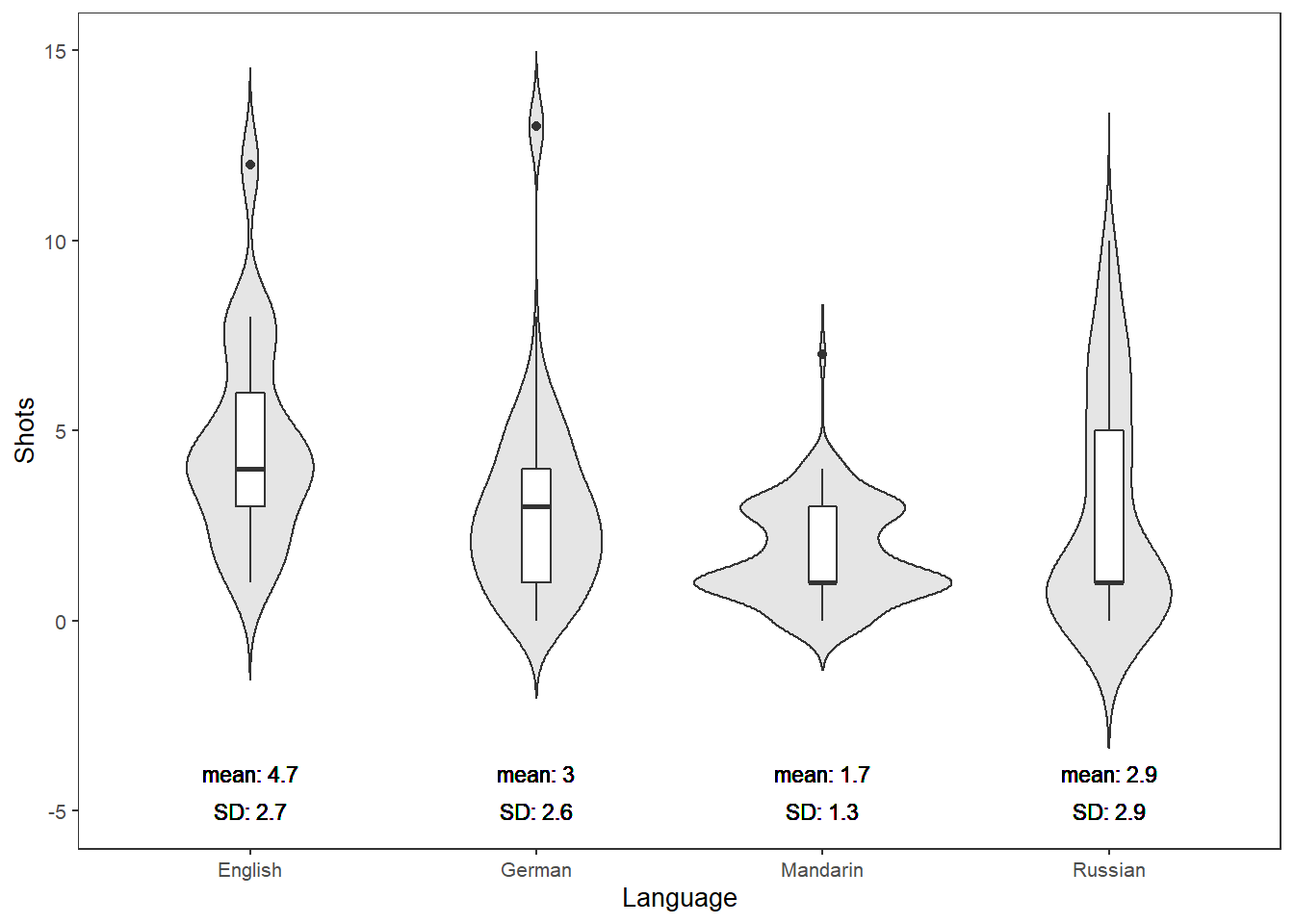

|