Computational Literary Stylistics with R

Dattatreya Majumdar and Martin Schweinberger

2024-11-08

Introduction

This tutorial focuses on computational literary stylistics (also digital literary stylistics) and shows how fictional texts can be analyzed by using computational means.

This tutorial is aimed at beginners and intermediate users of R with the aim of showcasing selected methods that are commonly used in or useful for computational literary stylistics with R. As such, the aim is not to provide a fully-fledged analysis but rather to show and exemplify selected useful methods. Another highly recommendable tutorial on computational literary stylistics with R is Silge and Robinson (2017) (see here), but the present tutorial substantially expands Silge and Robinson (2017) in terms of the range of methods that are presented and the data sources that are used.

To be able to follow this tutorial, we suggest you check out and

familiarize yourself with the content of the following R

Basics tutorials:

- Getting started with R

- Loading, saving, and generating data in R

- String Processing in R

- Regular Expressions in R

Click here1 to

download the entire R Notebook for this

tutorial.

Click

here

to open an interactive Jupyter notebook that allows you to execute,

change, and edit the code as well as to upload your own data.

What is computational literary stylistics?

Computational literary stylistics refers to analyses of the language of literary texts by computational means using linguistic concepts and categories, with the goal of finding patters among the literary texts and explaining how literary meaning/s is/are created by specific language choices. Computational literary stylistics has been linked with distant reading which aims to find patterns in large amounts of literary data that would not be detectable by traditional close reading techniques.

Preparation and session set up

This tutorial is based on R. If you have not installed R or are new to it, you will find an introduction to and more information how to use R here. For this tutorials, we need to install certain packages from an R library so that the scripts shown below are executed without errors. Before turning to the code below, please install the packages by running the code below this paragraph. If you have already installed the packages mentioned below, then you can skip ahead and ignore this section. To install the necessary packages, simply run the following code - it may take some time (between 1 and 5 minutes to install all of the packages so you do not need to worry if it takes some time).

# install packages

install.packages("tidytext")

install.packages("janeaustenr")

install.packages("tidyverse")

install.packages("quanteda")

install.packages("forcats")

install.packages("gutenbergr")

install.packages("flextable")

install.packages("quanteda.textstats")

install.packages("quanteda.textplots")

install.packages("lexRankr")

# install klippy for copy-to-clipboard button in code chunks

install.packages("remotes")

remotes::install_github("rlesur/klippy")We now activate these packages as shown below.

# set options

options(stringsAsFactors = F) # no automatic data transformation

options("scipen" = 100, "digits" = 12) # suppress math annotation

library(tidyverse)

library(janeaustenr)

library(tidytext)

library(forcats)

library(quanteda)

library(gutenbergr)

library(flextable)

# activate klippy for copy-to-clipboard button

klippy::klippy()Once you have installed R and RStudio and initiated the session by executing the code shown above, you are good to go.

Getting started

To explore different methods used in literary stylistics, we will analyze selected works from the Project Gutenberg. For this tutorial, we will download William Shakespeare’s Romeo and Juliet, Charles Darwin’s On the Origin of Species, Edgar Allan Poe’s The Raven, Jane Austen’s Pride and Prejudice, Athur Conan Doyle’s The Adventures of Sherlock Holmes, and Mark Twain’s The Adventures of Tom Sawyer (to see how to download data from Project Gutenberg, check out this tutorial).

The code below downloads the data from a server that mirrors the content of Project Gutenberg (which is more stable than the Project itself).

shakespeare <- gutenbergr::gutenberg_works(gutenberg_id == "1513") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")

darwin <- gutenbergr::gutenberg_works(gutenberg_id == "1228") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")

twain <- gutenbergr::gutenberg_works(gutenberg_id == "74") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")

poe <- gutenbergr::gutenberg_works(gutenberg_id == "1065") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")

austen <- gutenbergr::gutenberg_works(gutenberg_id == "1342") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")

doyle <- gutenbergr::gutenberg_works(gutenberg_id == "1661") %>%

gutenbergr::gutenberg_download(mirror = "http://mirror.csclub.uwaterloo.ca/gutenberg/")gutenberg_id | text |

|---|---|

1,513 | THE TRAGEDY OF ROMEO AND JULIET |

1,513 | |

1,513 | |

1,513 | |

1,513 | by William Shakespeare |

1,513 | |

1,513 | |

1,513 | Contents |

1,513 | |

1,513 | THE PROLOGUE. |

1,513 | |

1,513 | ACT I |

1,513 | Scene I. A public place. |

1,513 | Scene II. A Street. |

1,513 | Scene III. Room in Capulet’s House. |

We can now begin to load and process the data.

Extracting words

The most basic but also most common task is to extract instances of individual words and seeing how they are used in context. This is also called concordancing. When extracting words, they are typically displayed in context which is why their display is called a keyword in context concordance or kwic, for short.

The code below extracts the word pride from the novel Pride and Prejudice and displays the resulting instances of this keyword in a kwic.

# extract text

austen_text <- austen %>%

dplyr::summarise(text = paste0(text, collapse = " ")) %>%

stringr::str_squish()

# give text a name

names(austen_text) <- "Pride & Prejudice"

# extract instances of pride

pride <- quanteda::kwic(quanteda::tokens(austen_text), "pride") %>%

as.data.frame()docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

Pride & Prejudice | 28 | 28 | _Chap 34 . _ ] | PRIDE | . and PREJUDICE by Jane | pride |

Pride & Prejudice | 580 | 580 | my part , declare for_ | Pride | and Prejudice _unhesitatingly . It | pride |

Pride & Prejudice | 1,009 | 1,009 | has ever been laid upon_ | Pride | and Prejudice ; _and I | pride |

Pride & Prejudice | 1,240 | 1,240 | I for one should put_ | Pride | and Prejudice _far lower if | pride |

Pride & Prejudice | 3,526 | 3,526 | of the minor characters in_ | Pride | and Prejudice _has been already | pride |

Pride & Prejudice | 4,028 | 4,028 | , been urged that his | pride | is unnatural at first in | pride |

Pride & Prejudice | 4,072 | 4,072 | the way in which his | pride | had been pampered , is | pride |

Pride & Prejudice | 5,871 | 5,871 | 476 [ Illustration : · | PRIDE | AND PREJUDICE · Chapter I | pride |

Pride & Prejudice | 12,178 | 12,178 | he is eat up with | pride | , and I dare say | pride |

Pride & Prejudice | 12,285 | 12,285 | him . ” “ His | pride | , ” said Miss Lucas | pride |

Pride & Prejudice | 12,300 | 12,300 | offend _me_ so much as | pride | often does , because there | pride |

Pride & Prejudice | 12,372 | 12,372 | I could easily forgive _his_ | pride | , if he had not | pride |

Pride & Prejudice | 12,383 | 12,383 | mortified _mine_ . ” “ | Pride | , ” observed Mary , | pride |

Pride & Prejudice | 12,467 | 12,467 | or imaginary . Vanity and | pride | are different things , though | pride |

Pride & Prejudice | 12,489 | 12,489 | proud without being vain . | Pride | relates more to our opinion | pride |

The kwic display could now be processed further or could be inspected to see how the keyword in question (pride) is used in this novel.

We can also inspect the use of phrases, for example natural selection, expand the context window size, and clean the output (as shown below).

# extract text

darwin_text <- darwin %>%

dplyr::summarise(text = paste0(text, collapse = " ")) %>%

stringr::str_squish()

# generate kwics

ns <- quanteda::kwic(quanteda::tokens(darwin_text), phrase("natural selection"), window = 10) %>%

as.data.frame() %>%

dplyr::select(-docname, -from, -to, -pattern)pre | keyword | post |

|---|---|---|

edition . On the Origin of Species BY MEANS OF | NATURAL SELECTION | , OR THE PRESERVATION OF FAVOURED RACES IN THE STRUGGLE |

NATURE . 3 . STRUGGLE FOR EXISTENCE . 4 . | NATURAL SELECTION | . 5 . LAWS OF VARIATION . 6 . DIFFICULTIES |

. CHAPTER 3 . STRUGGLE FOR EXISTENCE . Bears on | natural selection | . The term used in a wide sense . Geometrical |

the most important of all relations . CHAPTER 4 . | NATURAL SELECTION | . Natural Selection : its power compared with man’s selection |

of all relations . CHAPTER 4 . NATURAL SELECTION . | Natural Selection | : its power compared with man’s selection , its power |

of the same species . Circumstances favourable and unfavourable to | Natural Selection | , namely , intercrossing , isolation , number of individuals |

number of individuals . Slow action . Extinction caused by | Natural Selection | . Divergence of Character , related to the diversity of |

any small area , and to naturalisation . Action of | Natural Selection | , through Divergence of Character and Extinction , on the |

of external conditions . Use and disuse , combined with | natural selection | ; organs of flight and of vision . Acclimatisation . |

of the Conditions of Existence embraced by the theory of | Natural Selection | . CHAPTER 7 . INSTINCT . Instincts comparable with habits |

its cell-making instinct . Difficulties on the theory of the | Natural Selection | of instincts . Neuter or sterile insects . Summary . |

CONCLUSION . Recapitulation of the difficulties on the theory of | Natural Selection | . Recapitulation of the general and special circumstances in its |

the immutability of species . How far the theory of | natural selection | may be extended . Effects of its adoption on the |

its new and modified form . This fundamental subject of | Natural Selection | will be treated at some length in the fourth chapter |

the fourth chapter ; and we shall then see how | Natural Selection | almost inevitably causes much Extinction of the less improved forms |

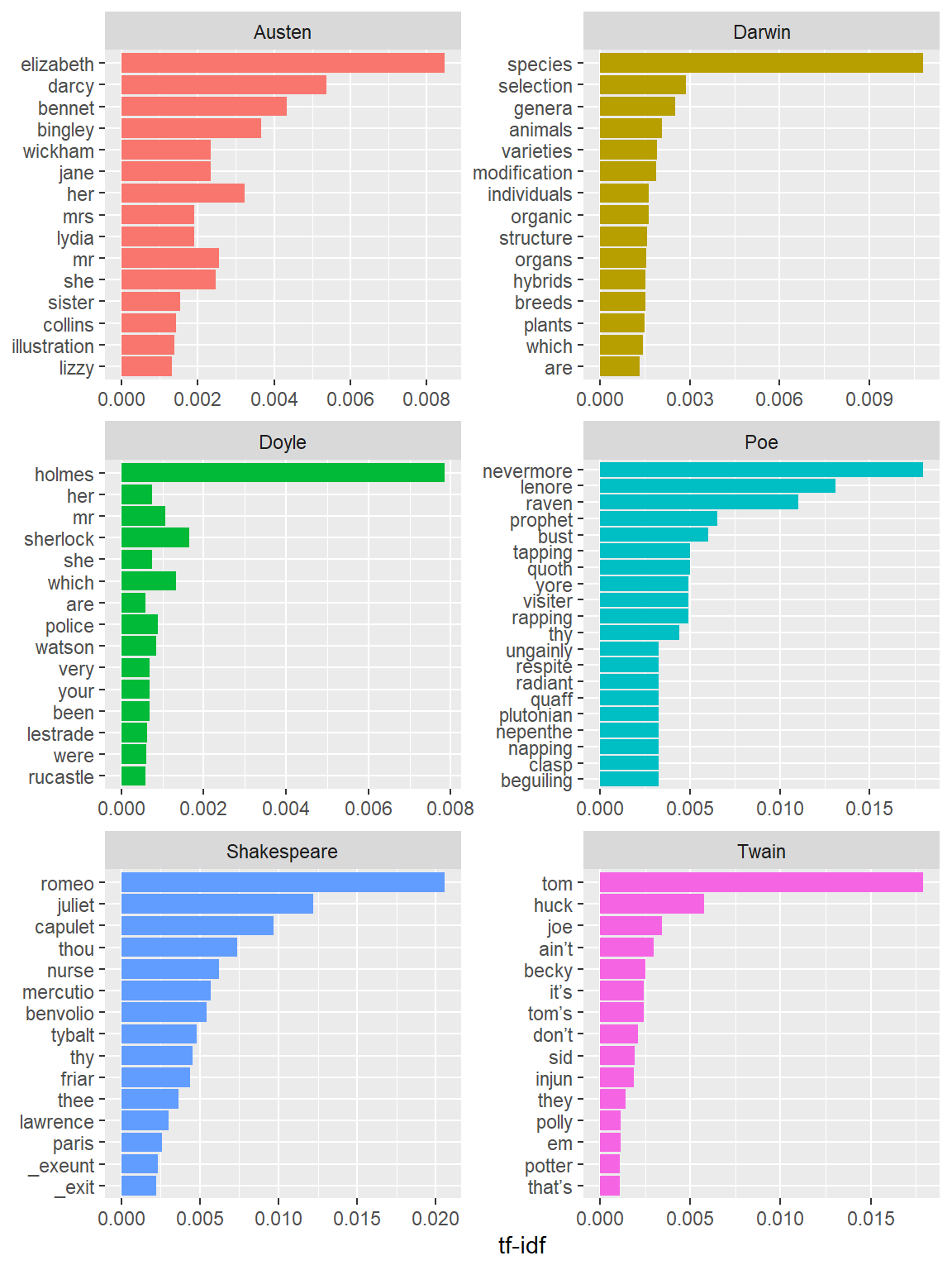

Identifying Keywords

Another common task in literary stylistics is to extract terms that

are particularly characteristic of a given text. The problem underlying

the identification of keywords is to figure out the importance of words

in each document. We can assign weights to words that are more

characteristic for a text if these terms are used more frequently than

expected in a given text. We can then show terms ordered by their

relative weight. Using the bind_tf_idf() function from the

tidytext package, we can extract the term frequency -

inverse document frequency, or tf-idf, scores which represent these

relative weights and we can also report other parameters such as number

of occurrences of that word, total number of words and term

frequency.

Before we continue, we need to define certain terms of concepts that are related to literary stylistics and that we will use repeatedly in this tutorials and that we need to define so that the analysis shown below makes sense.

Term Frequency is the measure of importance of a word in a document or how frequently it appears in that document. However there are some words such as the,is, of, etc. which appear frequently even though they might not be important. An approach of using a list of stop-words and removing them before analysis can be useful but in case of some documents these words might be highly relevant.

The Inverse Document Frequency decreases the weight for most used words and increases the weight for words that are not much used in a collection of documents. This together with the Term Frequency can be used to calculate a term’s tf-idf (the multiplication of both the terms) which adjusts the frequency of the term based on how rarely it is used. Mathematically idf can be expressed as follows:

\[\begin{equation} idf_{(term)}= ln (\frac{n_{documents}}{n_{documents\; containing\; term}}) \end{equation}\]

Before we calculate the tf-idf, we will collapse all the books into a single data frame though.

books <- rbind(shakespeare, darwin, twain, poe, austen, doyle)

# add names to books

books$book <- c(

rep("Shakespeare", nrow(shakespeare)),

rep("Darwin", nrow(darwin)),

rep("Twain", nrow(twain)),

rep("Poe", nrow(poe)),

rep("Austen", nrow(austen)),

rep("Doyle", nrow(doyle))

)

# clean data

books <- books %>%

dplyr::filter(text != "") %>%

dplyr::mutate(book = factor(book)) %>%

dplyr::select(-gutenberg_id)text | book |

|---|---|

THE TRAGEDY OF ROMEO AND JULIET | Shakespeare |

by William Shakespeare | Shakespeare |

Contents | Shakespeare |

THE PROLOGUE. | Shakespeare |

ACT I | Shakespeare |

Scene I. A public place. | Shakespeare |

Scene II. A Street. | Shakespeare |

Scene III. Room in Capulet’s House. | Shakespeare |

Scene IV. A Street. | Shakespeare |

Scene V. A Hall in Capulet’s House. | Shakespeare |

ACT II | Shakespeare |

CHORUS. | Shakespeare |

Scene I. An open place adjoining Capulet’s Garden. | Shakespeare |

Scene II. Capulet’s Garden. | Shakespeare |

Scene III. Friar Lawrence’s Cell. | Shakespeare |

Now, we continue by calculating the tf-idf for each term in each of the books.

book_words <- books %>%

tidytext::unnest_tokens(word, text) %>%

dplyr::count(book, word, sort = TRUE) %>%

dplyr::group_by(book) %>%

dplyr::mutate(total = sum(n))

book_tf_idf <- book_words %>%

tidytext::bind_tf_idf(word, book, n)book | word | n | total | tf | idf | tf_idf |

|---|---|---|---|---|---|---|

Darwin | the | 10,301 | 157,002 | 0.0656106291640 | 0.000000000000 | 0.00000000000000 |

Darwin | of | 7,864 | 157,002 | 0.0500885339040 | 0.000000000000 | 0.00000000000000 |

Austen | the | 4,656 | 127,996 | 0.0363761367543 | 0.000000000000 | 0.00000000000000 |

Darwin | and | 4,443 | 157,002 | 0.0282990025605 | 0.000000000000 | 0.00000000000000 |

Austen | to | 4,323 | 127,996 | 0.0337744929529 | 0.000000000000 | 0.00000000000000 |

Darwin | in | 4,017 | 157,002 | 0.0255856613292 | 0.000000000000 | 0.00000000000000 |

Austen | of | 3,838 | 127,996 | 0.0299853120410 | 0.000000000000 | 0.00000000000000 |

Austen | and | 3,763 | 127,996 | 0.0293993562299 | 0.000000000000 | 0.00000000000000 |

Darwin | to | 3,613 | 157,002 | 0.0230124457013 | 0.000000000000 | 0.00000000000000 |

Darwin | a | 2,471 | 157,002 | 0.0157386530108 | 0.000000000000 | 0.00000000000000 |

Austen | her | 2,260 | 127,996 | 0.0176568017751 | 0.287682072452 | 0.00507954532752 |

Austen | i | 2,095 | 127,996 | 0.0163676989906 | 0.000000000000 | 0.00000000000000 |

Darwin | that | 2,086 | 157,002 | 0.0132864549496 | 0.000000000000 | 0.00000000000000 |

Austen | a | 2,036 | 127,996 | 0.0159067470858 | 0.000000000000 | 0.00000000000000 |

Austen | in | 1,991 | 127,996 | 0.0155551735992 | 0.000000000000 | 0.00000000000000 |

From the above table it is evident that the extremely common words have a very low inverse document frequency and thus a low tf-idf score. The inverse document frequency will be a higher number for words that occur in fewer documents in the collection of novels.

book_tf_idf %>%

dplyr::select(-total) %>%

dplyr::arrange(desc(tf_idf))## # A tibble: 18,777 × 6

## # Groups: book [4]

## book word n tf idf tf_idf

## <fct> <chr> <int> <dbl> <dbl> <dbl>

## 1 Shakespeare romeo 300 0.0115 1.39 0.0159

## 2 Poe nevermore 11 0.0100 1.39 0.0139

## 3 Poe lenore 8 0.00730 1.39 0.0101

## 4 Shakespeare juliet 178 0.00681 1.39 0.00945

## 5 Shakespeare nurse 148 0.00567 1.39 0.00785

## 6 Poe bust 6 0.00547 1.39 0.00759

## 7 Shakespeare capulet 141 0.00540 1.39 0.00748

## 8 Shakespeare thou 277 0.0106 0.693 0.00735

## 9 Poe raven 11 0.0100 0.693 0.00696

## 10 Darwin species 1546 0.00985 0.693 0.00683

## # ℹ 18,767 more rows# inspect

head(book_tf_idf)## # A tibble: 6 × 7

## # Groups: book [2]

## book word n total tf idf tf_idf

## <fct> <chr> <int> <int> <dbl> <dbl> <dbl>

## 1 Darwin the 10301 157002 0.0656 0 0

## 2 Darwin of 7864 157002 0.0501 0 0

## 3 Austen the 4656 127996 0.0364 0 0

## 4 Darwin and 4443 157002 0.0283 0 0

## 5 Austen to 4323 127996 0.0338 0 0

## 6 Darwin in 4017 157002 0.0256 0 0Next, we plot the 15 words with the highest tf-idf scores for each novel to show which words are particularly characteristic of each of the novels.

book_tf_idf %>%

dplyr::group_by(book) %>%

slice_max(tf_idf, n = 15) %>%

dplyr::ungroup() %>%

ggplot(aes(tf_idf, fct_reorder(word, tf_idf), fill = book)) +

geom_col(show.legend = FALSE) +

facet_wrap(~book, ncol = 2, scales = "free") +

labs(x = "tf-idf", y = NULL)

Highest tf-idf words in each of Jane Austen’s Novels

As you can see, the method has indeed extracted words (and by extension concepts) that are characteristic of the texts.

Extracting Structural Features

Extracting structural features of texts is a very common and has a wide range of applications such as determining if texts belong to the same genre or if texts represent a real language or a made up nonsensical language, for example.

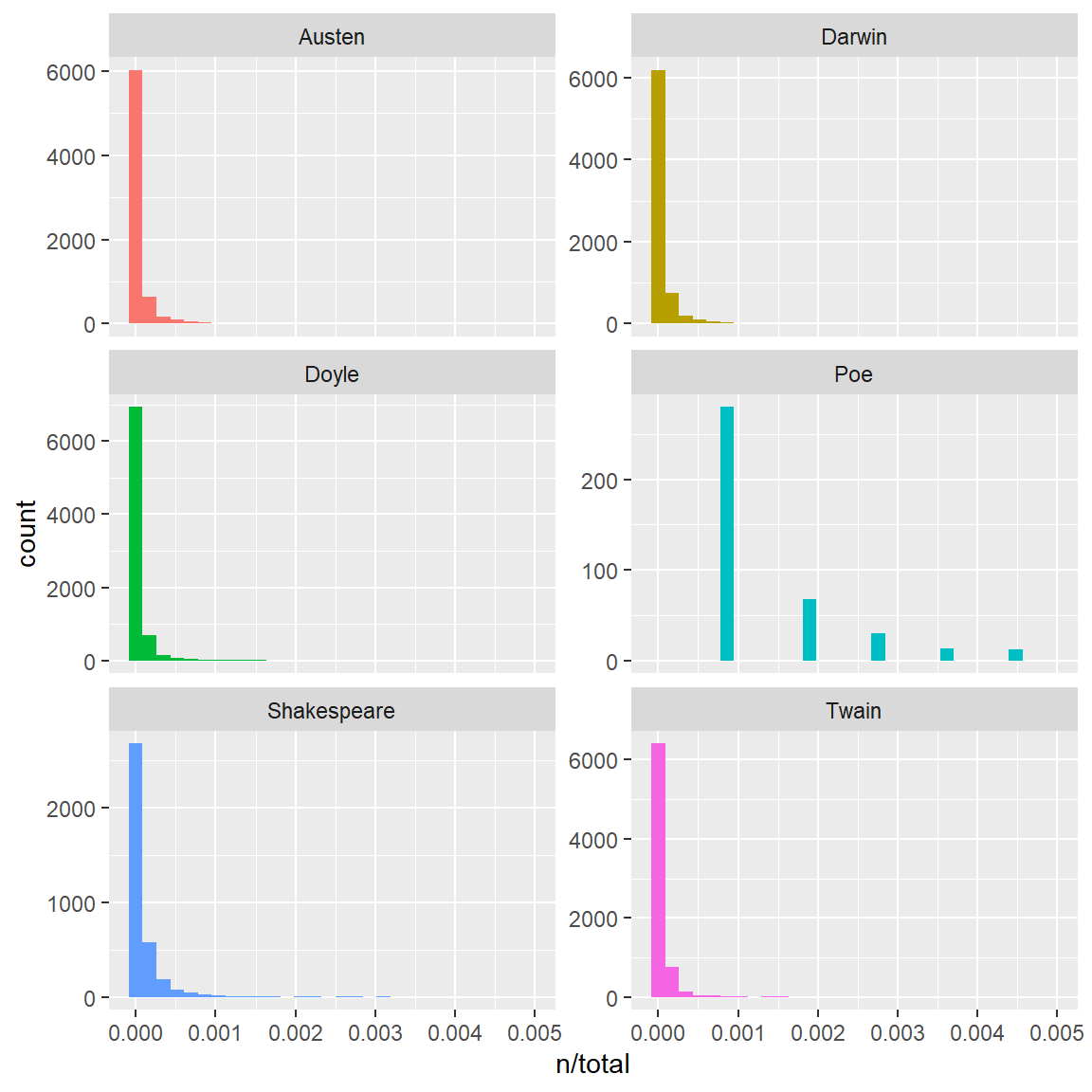

Word-Frequency Distributions

Word-frequency distributions can be used to determine if a text represents natural language (or a simple replacement cipher, for example) or if the text does not represent natural language (or a more complex cipher). In the following, we will check if the language used in texts we have downloaded from Project Gutenberg aligns with distributions that we would expect when dealing with natural language. In a first step, we determine both the term-frequency and the idf.

book_words <- books %>%

tidytext::unnest_tokens(word, text) %>%

dplyr::count(book, word, sort = TRUE) %>%

dplyr::group_by(book) %>%

dplyr::mutate(total = sum(n))book | word | n | total |

|---|---|---|---|

Darwin | the | 10,301 | 157,002 |

Darwin | of | 7,864 | 157,002 |

Austen | the | 4,656 | 127,996 |

Darwin | and | 4,443 | 157,002 |

Austen | to | 4,323 | 127,996 |

Darwin | in | 4,017 | 157,002 |

Austen | of | 3,838 | 127,996 |

Austen | and | 3,763 | 127,996 |

Darwin | to | 3,613 | 157,002 |

Darwin | a | 2,471 | 157,002 |

Austen | her | 2,260 | 127,996 |

Austen | i | 2,095 | 127,996 |

Darwin | that | 2,086 | 157,002 |

Austen | a | 2,036 | 127,996 |

Austen | in | 1,991 | 127,996 |

From the above table it is evident that the usual suspects the, and, to and so-forth are leading in terms of their usage frequencies in the novels. Now let us look at the distribution of n/total for each term in each of the novels (which represents the normalized term frequency).

ggplot(book_words, aes(n/total, fill = book)) +

geom_histogram(show.legend = FALSE) +

xlim(NA, 0.005) +

facet_wrap(~book, ncol = 2, scales = "free_y")

Term frequency distributions

From the plots it is clear that we are dealing with a negative exponential distribution and that many words occur only rarely and that only few words occur frequently. In other words, only few words occur frequently while most words occur rarely. This relationship represents a distribution that is captured by Zipf’s law.

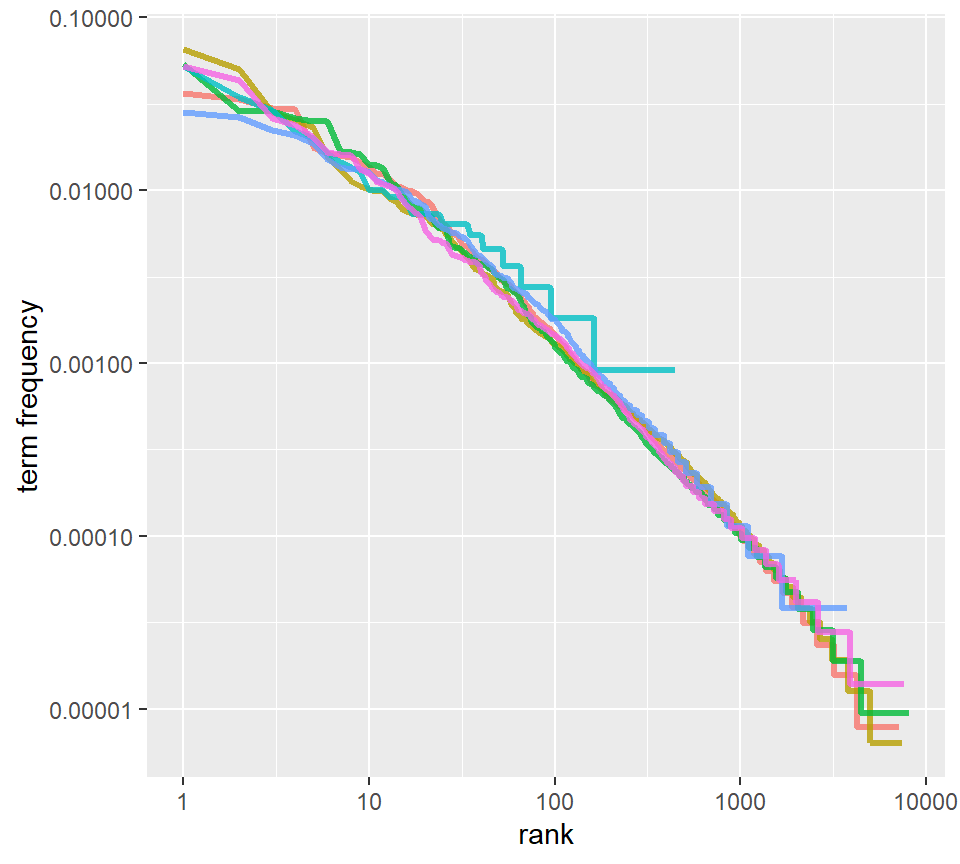

Zipf’s Law

Zipf’s Law represents an empirical power law or power function that was established in the 1930s. Zipf’s law is one of the most fundamental laws in linguistics (see Zipf 1935) and it states that the frequency of a word is inversely proportional to its rank in a text or collection of texts.

Let

N be the number of elements in a text (or collection of texts);

k be their rank;

s be the value of the exponent characterizing the distribution.

Zipf’s law then predicts that out of a population of N elements, the normalized frequency of the element of rank k, f(k;s,N), is:

\[\begin{equation} f(k;s,N)={\frac {1/k^{s}}{\sum \limits _{n=1}^{N}(1/n^{s})}} \end{equation}\]

In the code chunk below, we check if Zipf’s Law applies to the words that occur in texts that we have downloaded from Project Gutenberg.

freq_by_rank <- book_words %>%

dplyr::group_by(book) %>%

dplyr::mutate(rank = row_number(),

`term frequency` = n/total) %>%

dplyr::ungroup()book | word | n | total | rank | term frequency |

|---|---|---|---|---|---|

Darwin | the | 10,301 | 157,002 | 1 | 0.0656106291640 |

Darwin | of | 7,864 | 157,002 | 2 | 0.0500885339040 |

Austen | the | 4,656 | 127,996 | 1 | 0.0363761367543 |

Darwin | and | 4,443 | 157,002 | 3 | 0.0282990025605 |

Austen | to | 4,323 | 127,996 | 2 | 0.0337744929529 |

Darwin | in | 4,017 | 157,002 | 4 | 0.0255856613292 |

Austen | of | 3,838 | 127,996 | 3 | 0.0299853120410 |

Austen | and | 3,763 | 127,996 | 4 | 0.0293993562299 |

Darwin | to | 3,613 | 157,002 | 5 | 0.0230124457013 |

Darwin | a | 2,471 | 157,002 | 6 | 0.0157386530108 |

Austen | her | 2,260 | 127,996 | 5 | 0.0176568017751 |

Austen | i | 2,095 | 127,996 | 6 | 0.0163676989906 |

Darwin | that | 2,086 | 157,002 | 7 | 0.0132864549496 |

Austen | a | 2,036 | 127,996 | 7 | 0.0159067470858 |

Austen | in | 1,991 | 127,996 | 8 | 0.0155551735992 |

To get a better understanding of Zipf’s law, let us visualize the distribution by plotting on the logged rank of elements on the x-axis and logged frequency of the terms on the y-axis. If Zipf’s law holds, then we should see more or less straight lines that go from top left to bottom right.

freq_by_rank %>%

ggplot(aes(rank, `term frequency`, color = book)) +

geom_line(size = 1.1, alpha = 0.8, show.legend = FALSE) +

scale_x_log10() +

scale_y_log10()## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

Zipf’s law for a sample of literary texts

We can see that the plot has a negative slope which corroborates the inverse relationship of rank with respect to term frequency which shows that the words in the texts from Project Gutenberg follow Zipf’s law. This would ascertain that we are dealing with natural language and not a made up nonsense language or a complex cipher.

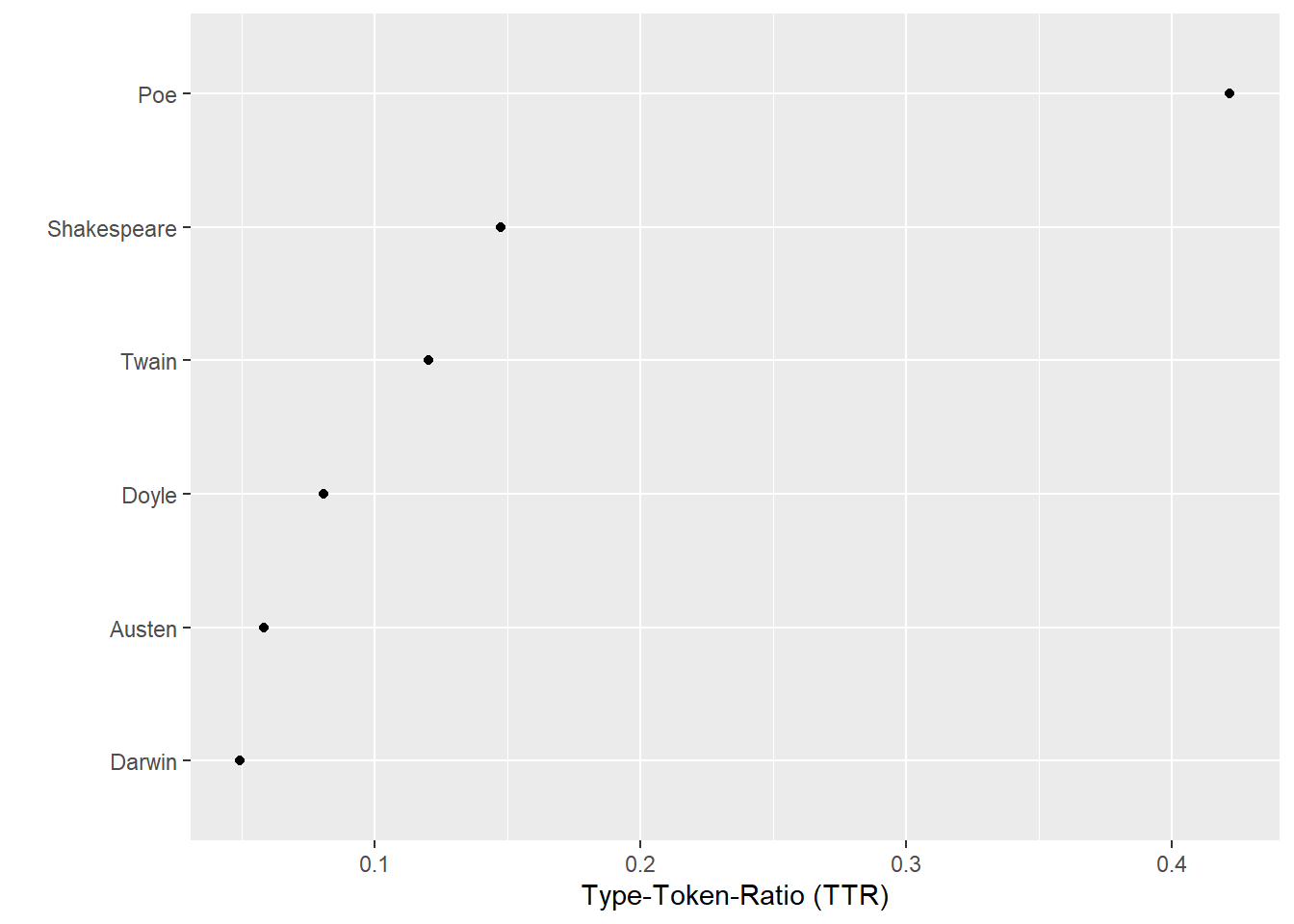

Lexical Diversity

Lexical diversity is a complexity measure that provides information about the lexicon size of a text, i.e. how many different words occur in a text given the size of the text. The Type-Token-Ratio (TTR) provides information about the number of word tokens (individual instances of a word) divided by the number of different word types (word forms). Let’s briefly elaborate on that and look a bit more closely at the terms types and tokens. The sentence The dog chased the cat contains five tokens but only 4 types because the occurs twice. Now, a text that is 100 words long and consist of 50 distinct words would have a TTR of .5 (50/100) while a text that is 100 words long but consist of 80 distinct words would have a TTR of .8 (80/100). Thus, typically, higher values indicate higher lexical diversity and more complex texts or more advanced learners of a language commonly have higher TTRs compared to simpler texts or less advanced language learners.

As such, we can use lexical diversity measures to analyze the complexity of the language in which a text is written which can be used to inspect the advances a language learner makes when acquiring a language: initially, the learner will have high TTR as they do not have a large vocabulary. The TTRs will increase as lexicon of the learner grows.

In the following example, we calculate the TTRs for the literary texts we have downloaded from Project Gutenberg. Ina first step, we tokenize the texts, i.e. we split the texts into individual words (tokens).

books_texts <- books %>%

dplyr::group_by(book) %>%

dplyr::summarise(text = paste(text, collapse = " "))

texts <- books_texts %>%

dplyr::pull(text)

names(texts) <- books_texts %>%

dplyr::pull(book)

tokens_texts <- texts %>%

quanteda::corpus() %>%

quanteda::tokens()

# inspect data

head(tokens_texts)## Tokens consisting of 4 documents.

## Austen :

## [1] "[" "Illustration" ":" "GEORGE" "ALLEN"

## [6] "PUBLISHER" "156" "CHARING" "CROSS" "ROAD"

## [11] "LONDON" "RUSKIN"

## [ ... and 152,253 more ]

##

## Darwin :

## [1] "Click" "on" "any" "of" "the"

## [6] "filenumbers" "below" "to" "quickly" "view"

## [11] "each" "ebook"

## [ ... and 176,904 more ]

##

## Poe :

## [1] "The" "Raven" "by" "Edgar" "Allan" "Poe"

## [7] "Once" "upon" "a" "midnight" "dreary" ","

## [ ... and 1,327 more ]

##

## Shakespeare :

## [1] "THE" "TRAGEDY" "OF" "ROMEO" "AND"

## [6] "JULIET" "by" "William" "Shakespeare" "Contents"

## [11] "THE" "PROLOGUE"

## [ ... and 32,877 more ]Next, we calculate the TTR using the textstat_lexdiv

function from the quanteda package and visualize the

resulting TTRs for the literary texts that we have downloaded from

Project Gutenberg.

dfm(tokens_texts) %>%

quanteda.textstats::textstat_lexdiv(measure = "TTR") %>%

ggplot(aes(x = TTR, y = reorder(document, TTR))) +

geom_point() +

xlab("Type-Token-Ratio (TTR)") +

ylab("")

We can see that Darwin’s On the Origin of Species has the lowest lexical diversity while Edgar Allan Poe’s The Raven has the highest. This would suggest that the language in The Raven is more complex than the language of On the Origin of Species. However, this is too simplistic and shows that simple Type-Token Ratios are severely affected by text length (as well as orthographic errors) and should only be used to compare texts

- that are comparatively long (at least 200 words)

- that are approximately of the same length

- that were error corrected so that orthographic errors do not confound the ratios

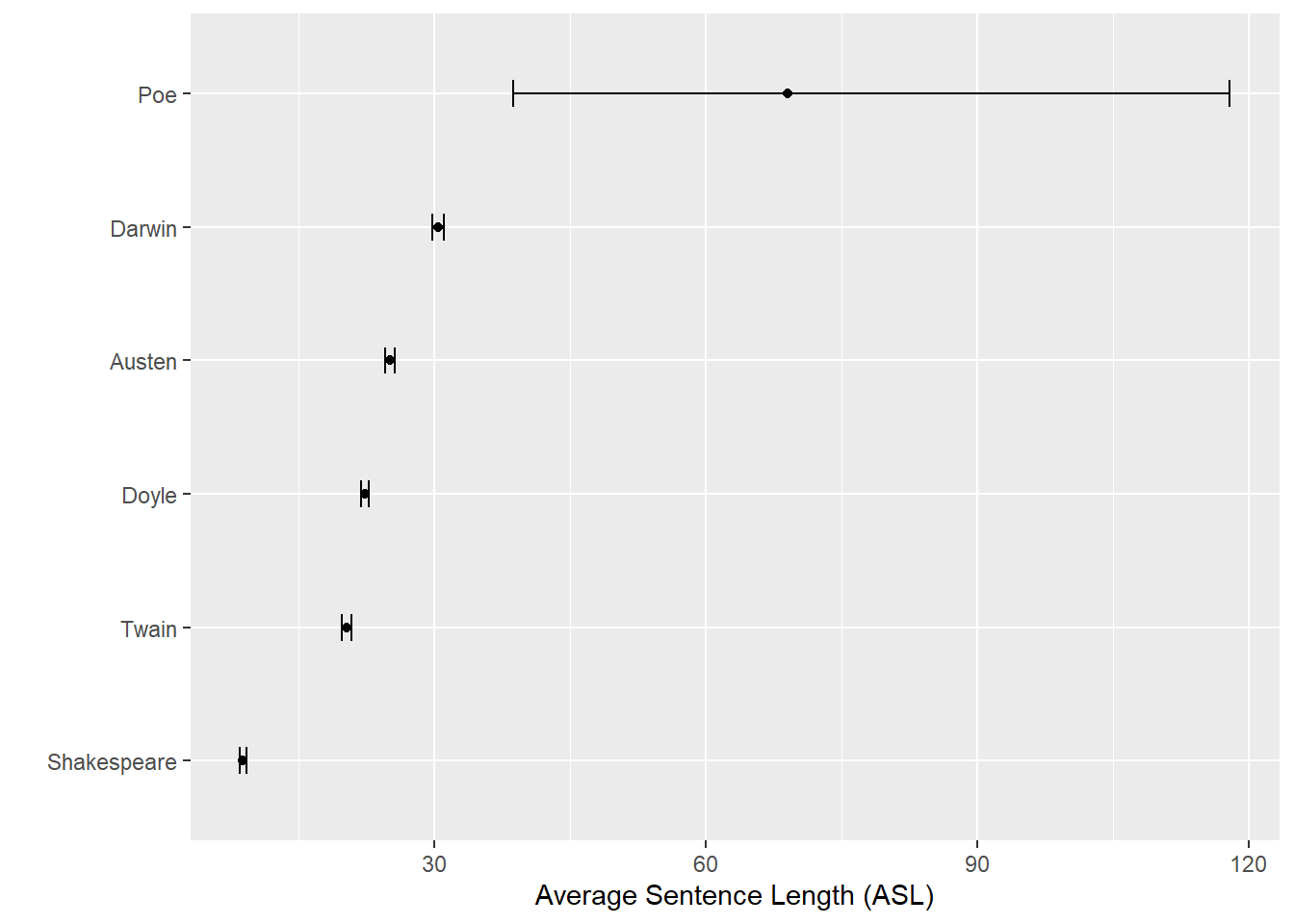

Average Sentence Length

The average sentence length (ASL) is another measure of textual complexity with more sophisticated language use being associated with longer and more complex sentences. As such, we can use the ASL as an alternative measure of the linguistic complexity of a text or texts.

library(lexRankr)

books_sentences <- books %>%

dplyr::group_by(book) %>%

dplyr::summarise(text = paste(text, collapse = " ")) %>%

lexRankr::unnest_sentences(sentence, text)book | sent_id | sentence |

|---|---|---|

Austen | 1 | [Illustration: GEORGE ALLEN PUBLISHER 156 CHARING CROSS ROAD LONDON RUSKIN HOUSE ] [Illustration: _Reading Jane’s Letters._ _Chap 34._ ] PRIDE. |

Austen | 2 | and PREJUDICE by Jane Austen, with a Preface by George Saintsbury and Illustrations by Hugh Thomson [Illustration: 1894] Ruskin 156. |

Austen | 3 | Charing House. |

Austen | 4 | Cross Road. |

Austen | 5 | London George Allen. |

Austen | 6 | CHISWICK PRESS:--CHARLES WHITTINGHAM AND CO. |

Austen | 7 | TOOKS COURT, CHANCERY LANE, LONDON. |

Austen | 8 | [Illustration: _To J. |

Austen | 9 | Comyns Carr in acknowledgment of all I owe to his friendship and advice, these illustrations are gratefully inscribed_ _Hugh Thomson_ ] PREFACE. |

Austen | 10 | [Illustration] _Walt Whitman has somewhere a fine and just distinction between “loving by allowance” and “loving with personal love.” This distinction applies to books as well as to men and women; and in the case of the not very numerous authors who are the objects of the personal affection, it brings a curious consequence with it. |

Austen | 11 | There is much more difference as to their best work than in the case of those others who are loved “by allowance” by convention, and because it is felt to be the right and proper thing to love them. |

Austen | 12 | And in the sect--fairly large and yet unusually choice--of Austenians or Janites, there would probably be found partisans of the claim to primacy of almost every one of the novels. |

Austen | 13 | To some the delightful freshness and humour of_ Northanger Abbey, _its completeness, finish, and_ entrain, _obscure the undoubted critical facts that its scale is small, and its scheme, after all, that of burlesque or parody, a kind in which the first rank is reached with difficulty._ Persuasion, _relatively faint in tone, and not enthralling in interest, has devotees who exalt above all the others its exquisite delicacy and keeping. |

Austen | 14 | The catastrophe of_ Mansfield Park _is admittedly theatrical, the hero and heroine are insipid, and the author has almost wickedly destroyed all romantic interest by expressly admitting that Edmund only took Fanny because Mary shocked him, and that Fanny might very likely have taken Crawford if he had been a little more assiduous; yet the matchless rehearsal-scenes and the characters of Mrs. |

Austen | 15 | Norris and others have secured, I believe, a considerable party for it._ Sense and Sensibility _has perhaps the fewest out-and-out admirers; but it does not want them._ _I suppose, however, that the majority of at least competent votes would, all things considered, be divided between_ Emma _and the present book; and perhaps the vulgar verdict (if indeed a fondness for Miss Austen be not of itself a patent of exemption from any possible charge of vulgarity) would go for_ Emma. |

Let’s now visualize the results for potential differences or trends.

books_sentences %>%

dplyr::mutate(sentlength = stringr::str_count(sentence, '\\w+')) %>%

ggplot(aes(x = sentlength, y = reorder(book, sentlength, mean), group = book)) +

stat_summary(fun = mean, geom = "point") +

stat_summary(fun.data = mean_cl_boot, geom = "errorbar", width = 0.2) +

xlab("Average Sentence Length (ASL)") +

ylab("")

We can see that that The Raven (which does not contain any punctuation) is (unsurprisingly) the text with the longest ASL while Shakespeare’s play Romeo and Juliet (which contains a lost of dialogues) is deemed the work with the shortest ASL. With the exception of Poe’s The Raven, the ALS results reflect text complexity with Darwin’s On the Origin of Species being more complex or written like than the other texts with Romeo and Juliet being the most similar to spoken dialogue.

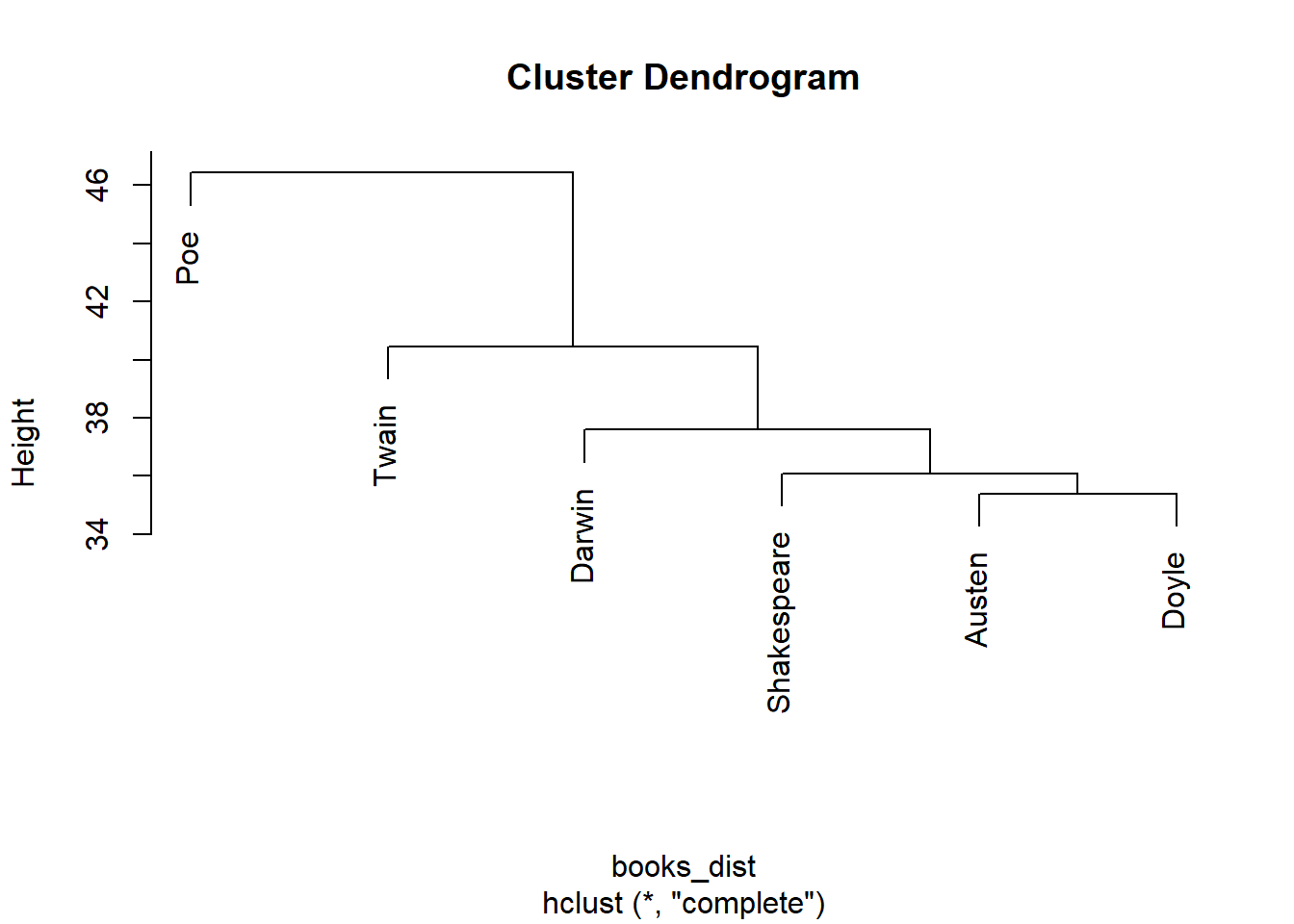

Similarity among literary texts

We will now explore how similar the language of literary works is. This approach can, of course, be extended to syntactic features or, for instance, to determine if certain texts belong to a certain literary genre or were written by a certain author. When extending this approach to syntactic features, one would naturally use features like the ALS, TTRs, or the frequency of adjectives as the basis for determining similarity. Regarding authorship, things like bigrams, spacing or punctuation methods would be relevant features.

To assess the similarity of literary works (based on the words that occur in the texts), we first create a feature list, i.e. a matrix with word frequencies. In the present case, we remove stop words as well as symbols (it can be useful to retain these but this is depends on the task at hand).

books %>%

dplyr::group_by(book) %>%

dplyr::sample_n(100) %>%

dplyr::summarise(text = paste0(text, collapse = " ")) %>%

dplyr::ungroup() %>%

quanteda::corpus(text_field = "text", docid_field = "book") %>%

quanteda::tokens() %>%

quanteda::dfm(remove_punct = TRUE, remove_symbols = TRUE) %>%

quanteda::dfm_remove(pattern = stopwords("en")) -> feature_mat

# inspect data

feature_mat## Document-feature matrix of: 4 documents, 1,240 features (71.17% sparse) and 0 docvars.

## features

## docs room near relation patroness . happened overhear depend upon ,

## Austen 2 1 1 1 52 1 1 2 2 86

## Darwin 0 0 0 0 35 0 0 0 0 72

## Poe 0 0 0 0 21 0 0 0 5 95

## Shakespeare 0 0 0 0 64 0 0 0 2 66

## [ reached max_nfeat ... 1,230 more features ]We see that the texts are represented as the row names and the terms the column names. The content of the matrix consists of the term frequencies.

We can now perform agglomerative hierarchical clustering and visualize the results in a dendrogram to assess the similarity of texts.

books_dist <- as.dist(quanteda.textstats::textstat_dist(feature_mat))

books_clust <- hclust(books_dist)

plot(books_clust)

According to the dendrogram, Conan Doyle’s The Adventures of Sherlock Holmes and Shakespeare’s Romeo and Juliet are the most similar texts. Edgar Allen Poe’s The Raven is the most idiosyncratic texts as it is on a branch by its own and is amalgamated with the other texts only as a very last step at the root of the tree.

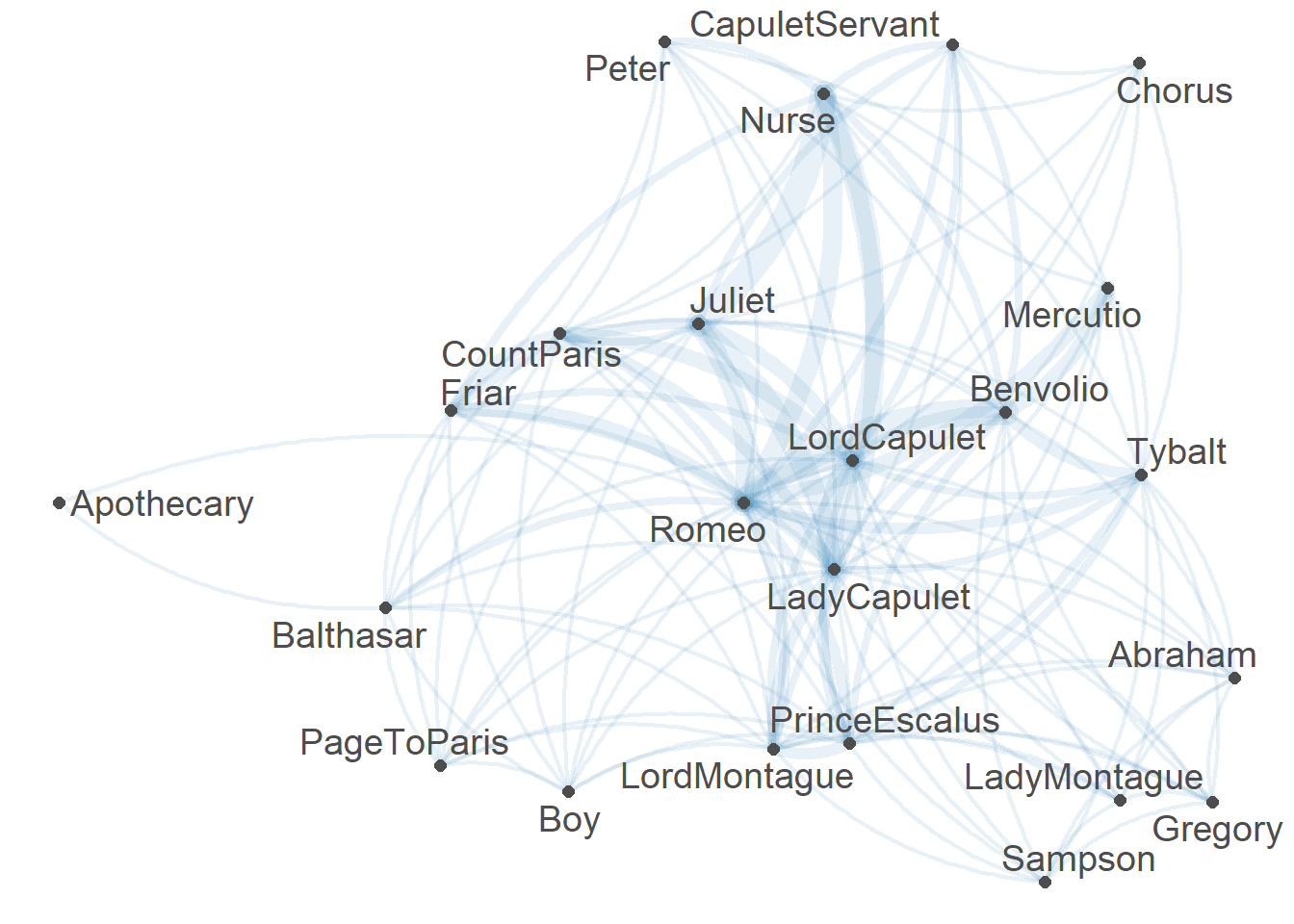

Networks of Personas

A final procedure we will perform is a network analysis of the personas in Shakespeare’s Romeo and Juliet. We directly load a co-occurrence matrix which provides information about how often character’s in that play have been in the same scene (as the extraction of this information is a bit cumbersome, I have done that for you and you can simply load the matrix into R).

# load data

romeo <- read.delim("https://slcladal.github.io/data/romeo.txt", sep = "\t")

# convert into feature co-occurrence matrix

romeo_fcm <- as.fcm(as.matrix(romeo))

# inspect data

romeo_fcm## Feature co-occurrence matrix of: 23 by 23 features.

## features

## features Abraham Benvolio LordCapulet Gregory LadyCapulet LadyMontague

## Abraham 1 1 1 1 1 1

## Benvolio 1 7 3 1 2 1

## LordCapulet 1 3 9 1 7 1

## Gregory 1 1 1 1 1 1

## LadyCapulet 1 2 7 1 10 1

## LadyMontague 1 1 1 1 1 1

## LordMontague 1 2 2 1 3 1

## PrinceEscalus 1 2 2 1 3 1

## Romeo 1 7 5 1 4 1

## Sampson 1 1 1 1 1 1

## features

## features LordMontague PrinceEscalus Romeo Sampson

## Abraham 1 1 1 1

## Benvolio 2 2 7 1

## LordCapulet 2 2 5 1

## Gregory 1 1 1 1

## LadyCapulet 3 3 4 1

## LadyMontague 1 1 1 1

## LordMontague 3 3 3 1

## PrinceEscalus 3 3 3 1

## Romeo 3 3 14 1

## Sampson 1 1 1 1

## [ reached max_feat ... 13 more features, reached max_nfeat ... 13 more features ]As the quanteda package has a very neat and easy to use

function (textplot_network) for generating network graphs,

we make use this function and can directly generate the network.

quanteda.textplots::textplot_network(romeo_fcm, min_freq = 0.1, edge_alpha = 0.1, edge_size = 5)

The thickness of the lines indicates how often characters have co-occurred. We could now generate different network graphs for the personas in different plays to see how these plays and personas differ or we could apply the network analysis to other types of information such as co-occurrences of words.

We have reached the end of this tutorial. Please feel free to explore more of our content at https://slcladal.github.io/index.html - for computational literary stylistics, especially the tutorials on part-of-speech tagging and syntactic parsing as well as on lexicography with R provide relevant additional information.

Citation & Session Info

Majumdar, Dattatreya and Martin Schweinberger. 2024. Literary Stylistics with R. Brisbane: The University of Queensland. url: https://slcladal.github.io/litsty.html (Version 2024.11.08).

@manual{Majumdar2024ta,

author = {Majumdar, Dattatreya and Martin Schweinberger},

title = {Literary Stylistics with R},

note = {https://slcladal.github.io/litsty.html},

year = {2024},

organization = "The University of Queensland, Australia. School of Languages and Cultures},

address = {Brisbane},

edition = {2024.11.08}

}sessionInfo()## R version 4.4.1 (2024-06-14 ucrt)

## Platform: x86_64-w64-mingw32/x64

## Running under: Windows 11 x64 (build 22631)

##

## Matrix products: default

##

##

## locale:

## [1] LC_COLLATE=English_Australia.utf8 LC_CTYPE=English_Australia.utf8

## [3] LC_MONETARY=English_Australia.utf8 LC_NUMERIC=C

## [5] LC_TIME=English_Australia.utf8

##

## time zone: Australia/Brisbane

## tzcode source: internal

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] lexRankr_0.5.2 flextable_0.9.7 gutenbergr_0.2.4 quanteda_4.1.0

## [5] tidytext_0.4.2 janeaustenr_1.0.0 lubridate_1.9.3 forcats_1.0.0

## [9] stringr_1.5.1 dplyr_1.1.4 purrr_1.0.2 readr_2.1.5

## [13] tidyr_1.3.1 tibble_3.2.1 ggplot2_3.5.1 tidyverse_2.0.0

##

## loaded via a namespace (and not attached):

## [1] tidyselect_1.2.1 farver_2.1.2

## [3] fastmap_1.2.0 lazyeval_0.2.2

## [5] fontquiver_0.2.1 rpart_4.1.23

## [7] digest_0.6.37 timechange_0.3.0

## [9] lifecycle_1.0.4 cluster_2.1.6

## [11] tokenizers_0.3.0 magrittr_2.0.3

## [13] compiler_4.4.1 rlang_1.1.4

## [15] Hmisc_5.2-0 sass_0.4.9

## [17] tools_4.4.1 utf8_1.2.4

## [19] yaml_2.3.10 sna_2.8

## [21] data.table_1.16.2 knitr_1.48

## [23] htmlwidgets_1.6.4 askpass_1.2.1

## [25] labeling_0.4.3 stopwords_2.3

## [27] bit_4.5.0 xml2_1.3.6

## [29] klippy_0.0.0.9500 foreign_0.8-87

## [31] withr_3.0.2 nnet_7.3-19

## [33] grid_4.4.1 fansi_1.0.6

## [35] gdtools_0.4.0 colorspace_2.1-1

## [37] scales_1.3.0 cli_3.6.3

## [39] rmarkdown_2.28 crayon_1.5.3

## [41] ragg_1.3.3 generics_0.1.3

## [43] rstudioapi_0.17.1 tzdb_0.4.0

## [45] quanteda.textplots_0.95 cachem_1.1.0

## [47] network_1.18.2 assertthat_0.2.1

## [49] parallel_4.4.1 proxyC_0.4.1

## [51] base64enc_0.1-3 vctrs_0.6.5

## [53] Matrix_1.7-1 jsonlite_1.8.9

## [55] fontBitstreamVera_0.1.1 hms_1.1.3

## [57] ggrepel_0.9.6 bit64_4.5.2

## [59] htmlTable_2.4.3 Formula_1.2-5

## [61] systemfonts_1.1.0 jquerylib_0.1.4

## [63] quanteda.textstats_0.97.2 glue_1.8.0

## [65] statnet.common_4.10.0 stringi_1.8.4

## [67] gtable_0.3.6 munsell_0.5.1

## [69] pillar_1.9.0 htmltools_0.5.8.1

## [71] openssl_2.2.2 R6_2.5.1

## [73] textshaping_0.4.0 vroom_1.6.5

## [75] evaluate_1.0.1 lattice_0.22-6

## [77] highr_0.11 backports_1.5.0

## [79] SnowballC_0.7.1 fontLiberation_0.1.0

## [81] bslib_0.8.0 Rcpp_1.0.13-1

## [83] zip_2.3.1 uuid_1.2-1

## [85] fastmatch_1.1-4 coda_0.19-4.1

## [87] checkmate_2.3.2 gridExtra_2.3

## [89] nsyllable_1.0.1 officer_0.6.7

## [91] xfun_0.49 pkgconfig_2.0.3References

If you want to render the R Notebook on your machine, i.e. knitting the document to html or a pdf, you need to make sure that you have R and RStudio installed and you also need to download the bibliography file and store it in the same folder where you store the Rmd file.↩︎