Concordancing with R

Martin Schweinberger

Introduction

This tutorial introduces how to extract concordances and

keyword-in-context (KWIC) displays with R using the

quanteda package (Benoit et al. 2018).

Please cite as:

Schweinberger, Martin. 2024. Concordancing with

R. Brisbane: The Language Technology and Data Analysis Laboratory

(LADAL). url: https://ladal.edu.au/kwics.html (Version

2024.05.07).

This tutorial is aimed at beginners and intermediate users of R with the aim of showcasing how to extract words and phrases from textual data and how to process the resulting concordances using R. The aim is not to provide a fully-fledged analysis but rather to show and exemplify selected useful methods associated with concordancing.

To be able to follow this tutorial, we suggest you check out and

familiarize yourself with the content of the following R

Basics tutorials:

- Getting started with R

- Loading, saving, and generating data in R

- String Processing in R

- Regular Expressions in R

Click here1 to

download the entire R Notebook for this

tutorial.

Click

here

to open a Jupyter notebook that allows you to follow this tutorial

interactively. This means that you can execute, change, and edit the

code used in this tutorial to help you better understand how the code

shown here works (make sure you run all code chunks in the order in

which they appear to avoid running into errors).

CONCORDANCING TOOL

Click on this badge to

open an notebook-based tool

that allows you to upload your own

text(s), perform concordancing on them, and download the resulting

kwic(s).

Concordancing is a fundamental tool in language sciences, involving the extraction of words from a given text or texts (Lindquist 2009, 5). Typically, these extractions are displayed through keyword-in-context displays (KWICs), where the search term, also referred to as the node word, is showcased within its surrounding context, comprising both preceding and following words. Concordancing serves as a cornerstone for textual analyses, often serving as the initial step towards more intricate examinations of language data (Stefanowitsch 2020). Their significance lies in their capacity to provide insights into how words or phrases are utilized, their frequency of occurrence, and the contexts in which they appear. By facilitating the examination of a word or phrase’s contextual usage and offering frequency data, concordances empower researchers to delve into collocations or the collocational profiles of words and phrases (Stefanowitsch 2020, 50–51).

Examples of Concordancing Applications:

Linguistic Research: Linguists often use concordances to explore the usage patterns of specific words or phrases in different contexts. For instance, researchers might use concordancing to analyze the various meanings and connotations of a polysemous word across different genres of literature.

Language Teaching: Concordancing can be a valuable tool in language teaching. Educators can create concordances to illustrate how vocabulary words are used in authentic contexts, helping students grasp their usage nuances and collocational patterns.

Stylistic Analysis: Literary scholars use concordances to conduct stylistic analyses of texts. By examining how certain words or phrases are employed within a literary work, researchers can gain insights into the author’s writing style, thematic concerns, and narrative techniques.

Translation Studies: Concordancing is also applied in translation studies to analyze the usage of specific terms or expressions in source and target languages. Translators use concordances to identify appropriate equivalents and ensure accurate translation of idiomatic expressions and collocations.

Lexicography: Lexicographers employ concordances to compile and refine dictionaries. By examining the contexts in which words appear, lexicographers can identify common collocations and usage patterns, informing the creation of more comprehensive and accurate lexical entries.

These examples underscore the usefulness of concordancing across various disciplines within the language sciences, showcasing its role in facilitating nuanced analyses and insights into language usage.

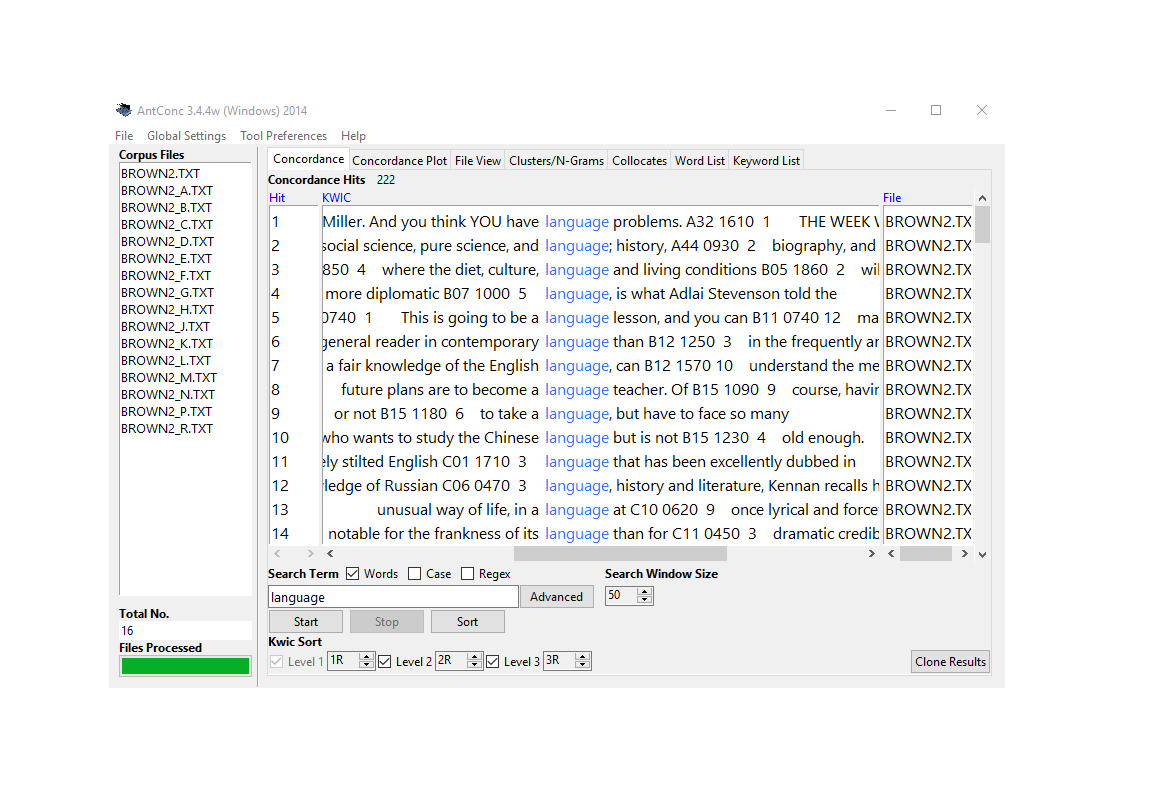

Concordances in AntConc.

There are various very good software packages that can be used to create concordances - both for offline use. There are, for example

- AntConc (Anthony 2004)

- SketchEngine (Kilgarriff et al. 2004)

- MONOCONC (Barlow 1999)

- ParaConc) (Barlow 2002)

- Web Concordancers

- CorpusMate (Crosthwaite and Baisa 2024)

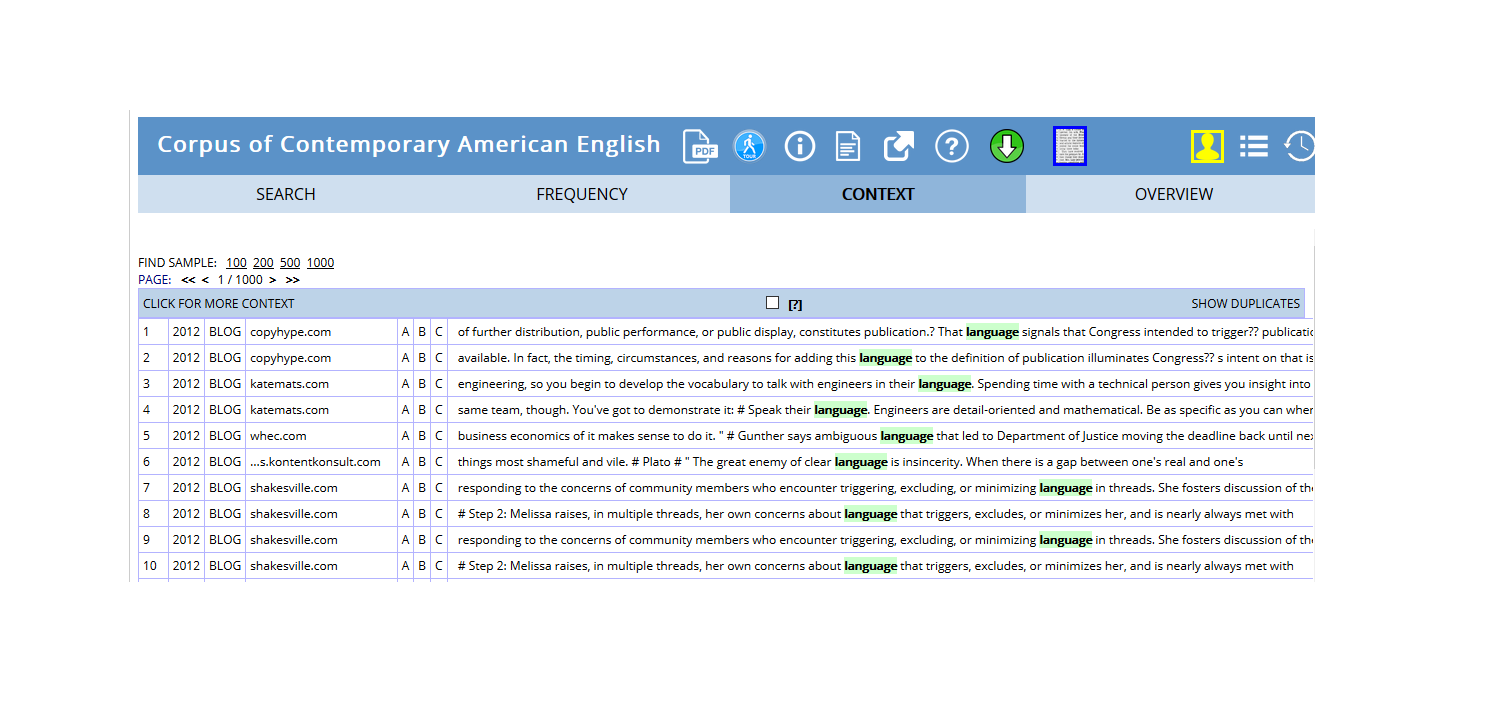

In addition, many corpora that are available such as the BYU corpora can be accessed via a web interface that have in-built concordancing functions.

Online concordances extracted from the COCA corpus that is part of the BYU corpora.

While many concordance software packages boast user-friendly interfaces and additional functionalities, their inherent limitations make R a compelling alternative. These shortcomings include:

Lack of Transparency: Traditional concordance software often operates as black boxes, leaving researchers without full control or insight into the inner workings of the software. This opacity can compromise the reproducibility and transparency of analyses, as researchers are unable to fully understand or document the analytical process.

Closed Source: The proprietary nature of many concordance software packages restricts access to their source code, hindering collaborative development and innovation within the research community. In contrast, R’s open-source framework encourages transparency, collaboration, and customization, empowering researchers to tailor analyses to their specific needs.

Replication Challenges: Replicating analyses conducted using traditional concordance software can be time-consuming and labor-intensive, particularly when compared to analyses documented in Notebooks. R’s integrated scripting environment facilitates reproducibility by allowing researchers to document their entire workflow in a clear, executable format, streamlining the replication process and enhancing research transparency.

Cost and Restrictions: Many concordance software packages are not free-of-charge or impose usage restrictions, limiting accessibility for researchers with budget constraints or specific licensing requirements. While exceptions like AntConc exist, the prevalence of cost barriers or usage restrictions underscores the importance of open-source alternatives like R, which offer greater accessibility and flexibility for researchers across diverse settings.

By addressing these key limitations, R emerges as a robust and versatile platform for concordancing, offering researchers greater transparency, flexibility, and accessibility. As such, R offers a compelling alternative to off-the-shelf concordancing applications for several reasons:

Flexibility: R provides unparalleled flexibility, allowing researchers to conduct their entire analysis within a single environment. This versatility enables users to tailor their analysis precisely to their research needs, incorporating custom functions and scripts as required.

Transparency and Documentation: One of R’s strengths lies in its capacity for full transparency and documentation. Analyses can be documented and presented in R Notebooks, providing a comprehensive record of the analytical process. This transparency ensures that every step of the analysis is clearly documented, enhancing the reproducibility of research findings.

Version Control: R offers robust version control measures, enabling researchers to track and trace specific versions of software and packages used in their analysis. This ensures that analyses are conducted using consistent software environments, minimizing the risk of discrepancies or errors due to software updates or changes.

Replicability: Replicability is paramount in scientific research, particularly in light of the ongoing Replication Crisis (Yong 2018; Aschwanden 2018; Diener and Biswas-Diener 2019; Velasco 2019; McRae 2018). By conducting analyses within R and documenting the entire workflow in scripts or Notebooks, researchers facilitate easy replication of their analyses by others. This not only enhances the credibility and robustness of research findings but also fosters greater collaboration and transparency within the scientific community.

The Replication Crisis, which has affected various fields since the early 2010s (Fanelli 2009), underscores the importance of transparent and replicable research practices. R’s capability to document workflows comprehensively in scripts or Notebooks addresses this challenge directly. Researchers can readily share their scripts or Notebooks with others, enabling full transparency and facilitating replication of analyses. This emphasis on transparency and replicability aligns closely with best practices in scientific research, ensuring that findings can be scrutinized, validated, and built upon by the broader research community.

Preparation and session set up

This tutorial is conducted within the R environment. If you’re new to R or haven’t installed it yet, you can find an introduction to R and further instructions on how to use it here. To ensure smooth execution of the scripts provided in this tutorial, it’s necessary to install specific packages from the R library. Before proceeding to the code examples, please ensure you’ve installed these packages by running the code provided below. If you’ve already installed the required packages, feel free to skip ahead and disregard this section. To install the necessary packages, simply execute the following code. Please note that installation may take some time (usually between 1 and 5 minutes), so there’s no need to be concerned if it takes a while.

# install packages

install.packages("quanteda")

install.packages("dplyr")

install.packages("stringr")

install.packages("writexl")

install.packages("here")

install.packages("flextable")

# install klippy for copy-to-clipboard button in code chunks

install.packages("remotes")

remotes::install_github("rlesur/klippy")Now that we’ve installed the required packages, let’s activate them using the following code snippet:

# activate packages

library(quanteda)

library(dplyr)

library(stringr)

library(writexl)

library(here)

library(flextable)

# activate klippy for copy-to-clipboard button

klippy::klippy()Once you’ve initiated the session by executing the provided code, you’re ready to proceed.

Loading and Processing Text

For this tutorial, we will use Lewis Carroll’s classic novel Alice’s Adventures in Wonderland as our primary text dataset. This whimsical tale follows the adventures of Alice as she navigates a fantastical world filled with peculiar characters and surreal landscapes.

Loading Text (from Project Gutenberg)

To access the text within R, you can use the following code snippet, ensuring you have an active internet connection:

# Load Alice's Adventures in Wonderland text into R

rawtext <- readLines("https://www.gutenberg.org/files/11/11-0.txt"). |

|---|

*** START OF THE PROJECT GUTENBERG EBOOK 11 *** |

[Illustration] |

Alice’s Adventures in Wonderland |

by Lewis Carroll |

THE MILLENNIUM FULCRUM EDITION 3.0 |

Contents |

CHAPTER I. Down the Rabbit-Hole |

CHAPTER II. The Pool of Tears |

CHAPTER III. A Caucus-Race and a Long Tale |

CHAPTER IV. The Rabbit Sends in a Little Bill |

CHAPTER V. Advice from a Caterpillar |

CHAPTER VI. Pig and Pepper |

CHAPTER VII. A Mad Tea-Party |

CHAPTER VIII. The Queen’s Croquet-Ground |

CHAPTER IX. The Mock Turtle’s Story |

CHAPTER X. The Lobster Quadrille |

CHAPTER XI. Who Stole the Tarts? |

CHAPTER XII. Alice’s Evidence |

CHAPTER I. |

Down the Rabbit-Hole |

Alice was beginning to get very tired of sitting by her sister on the |

bank, and of having nothing to do: once or twice she had peeped into |

the book her sister was reading, but it had no pictures or |

conversations in it, “and what is the use of a book,” thought Alice |

“without pictures or conversations?” |

After retrieving the text from Project Gutenberg, it becomes available for analysis within R. However, upon loading the text into our environment, we notice that it requires some processing. This includes removing extraneous elements such as the table of contents to isolate the main body of the text. Therefore, in the next step, we process the text. This will include consolidating the text into a single object and eliminating any non-essential content. Additionally, we clean up the text by removing superfluous white spaces to ensure a tidy dataset for our analysis.

Data Preparation

Data processing and preparation play a crucial role in text analysis, as they directly impact the quality and accuracy of the results obtained. When working with text data, it’s essential to ensure that the data is clean, structured, and formatted appropriately for analysis. This involves tasks such as removing irrelevant information, standardizing text formats, and handling missing or erroneous data.

The importance of data processing and preparation lies in its ability to transform raw text into a usable format that can be effectively analyzed. By cleaning and pre-processing the data, researchers can mitigate the impact of noise and inconsistencies, enabling more accurate and meaningful insights to be extracted from the text.

However, it’s worth noting that data preparation can often be a time-consuming process, sometimes requiring more time and effort than the actual analysis task itself. The extent of data preparation required can vary significantly depending on the complexity of the data and the specific research objectives. While some datasets may require minimal processing, others may necessitate extensive cleaning and transformation.

Ultimately, the time and effort invested in data preparation are essential for ensuring the reliability and validity of the analysis results. By dedicating sufficient attention to data processing and preparation, researchers can enhance the quality of their analyses and derive more robust insights from the text data at hand.

text <- rawtext %>%

# collapse lines into a single text

paste0(collapse = " ") %>%

# remove superfluous white spaces

stringr::str_squish() %>%

# remove everything before "CHAPTER I."

stringr::str_remove(".*CHAPTER I\\."). |

|---|

Down the Rabbit-Hole Alice was beginning to get very tired of sitting by her sister on the bank, and of having nothing to do: once or twice she had peeped into the book her sister was reading, but it had no pictures or conversations in it, “and what is the use of a book,” thought Alice “without pictures or conversations?” So she was considering in her own mind (as well as she could, for the hot day made her feel very sleepy and stupid), whether the pleasure of making a daisy-chain would be worth the trouble of getting up and picking the daisies, when suddenly a White Rabbit with pink eyes ran close by her. There was nothing so _very_ remarkable in that; nor did Alice think it so _very_ much out of the way to hear the Rabbit say to itself, “Oh dear! Oh dear! I shall be late!” (when she thought it over afterwards, it occurred to her that she ought to have wondered at this, but at the time it all seemed quite natural); but when the Rabbit actually _took a watch out of its waistcoat-pocke |

ADDITIONAL INFO: the regular expression “.CHAPTER I\.” can be

interpreted as follows:

Match any sequence of characters (.)

followed by the exact text”CHAPTER I” and ending with a period (.). This

pattern is commonly used to locate occurrences of a chapter heading

labeled “CHAPTER I” within a larger body of text.

The entire content of Lewis Carroll’s Alice’s Adventures in Wonderland is now combined into a single character object and we can begin with generating concordances (KWICs).

Generating Basic KWICs

Now, extracting concordances becomes straightforward with the

kwic function from the quanteda package. This

function is designed to enable the extraction of keyword-in-context

(KWIC) displays, a common format for displaying concordance lines.

To prepare the text for concordance extraction, we first need to

tokenize it, which involves splitting it into individual words or

tokens. Additionally, we specify the phrase argument in the

kwic function, allowing us to extract phrases consisting of

more than one token, such as “poor alice”.

The kwic function primarily requires two arguments: the

tokenized text (x) and the search pattern

(pattern). Additionally, it offers flexibility by allowing

users to specify the context window, determining the number of words or

elements displayed to the left and right of the nodeword. We’ll delve

deeper into customizing this context window later on.

mykwic <- quanteda::kwic(

# define and tokenise text

quanteda::tokens(text),

# define search pattern and add the phrase function

pattern = phrase("Alice")) %>%

# convert it into a data frame

as.data.frame()docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 4 | 4 | Down the Rabbit-Hole | Alice | was beginning to get very | Alice |

text1 | 63 | 63 | a book , ” thought | Alice | “ without pictures or conversations | Alice |

text1 | 143 | 143 | in that ; nor did | Alice | think it so _very_ much | Alice |

text1 | 229 | 229 | and then hurried on , | Alice | started to her feet , | Alice |

text1 | 299 | 299 | In another moment down went | Alice | after it , never once | Alice |

text1 | 338 | 338 | down , so suddenly that | Alice | had not a moment to | Alice |

The resulting table showcases how “Alice” is used within our example

text. However, since we use the head function, the table

only displays the first six instances.

After extracting a concordance table, we can easily determine the

frequency of the search term (“alice”) using either the

nrow or length functions. These functions

provide the number of rows in a table (nrow) or the length

of a vector (length).

nrow(mykwic)## [1] 386length(mykwic$keyword)## [1] 386The results indicate that there are 386 instances of the search term (“alice”). Moreover, we can also explore how often different variants of the search term were found using the table function. While this may be particularly useful for searches involving various search terms (although less so in the present example).

table(mykwic$keyword)##

## Alice

## 386To gain a deeper understanding of how a word is used, it can be

beneficial to extract more context. This can be achieved by adjusting

the size of the context window. To do so, we simply specify the

window argument of the kwic function. In the

following example, we set the context window size to 10 words/elements,

deviating from the default size of 5 words/elements.

mykwic_long <- quanteda::kwic(

# define text

quanteda::tokens(text),

# define search pattern

pattern = phrase("alice"),

# define context window size

window = 10) %>%

# make it a data frame

as.data.frame()docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 4 | 4 | Down the Rabbit-Hole | Alice | was beginning to get very tired of sitting by her | alice |

text1 | 63 | 63 | what is the use of a book , ” thought | Alice | “ without pictures or conversations ? ” So she was | alice |

text1 | 143 | 143 | was nothing so _very_ remarkable in that ; nor did | Alice | think it so _very_ much out of the way to | alice |

text1 | 229 | 229 | and looked at it , and then hurried on , | Alice | started to her feet , for it flashed across her | alice |

text1 | 299 | 299 | rabbit-hole under the hedge . In another moment down went | Alice | after it , never once considering how in the world | alice |

text1 | 338 | 338 | , and then dipped suddenly down , so suddenly that | Alice | had not a moment to think about stopping herself before | alice |

EXERCISE TIME!

`

- Extract the first 10 concordances for the word

confused.

Answer

kwic_confused <- quanteda::kwic(x = quanteda::tokens(text), pattern = phrase("confused"))

# inspect

kwic_confused %>%

as.data.frame() %>%

head(10) ## docname from to pre keyword

## 1 text1 6217 6217 , calling out in a confused

## 2 text1 19140 19140 . ” This answer so confused

## 3 text1 19325 19325 said Alice , very much confused

## 4 text1 33422 33422 she knew ) to the confused

## post pattern

## 1 way , “ Prizes ! confused

## 2 poor Alice , that she confused

## 3 , “ I don’t think confused

## 4 clamour of the busy farm-yard—while confused- How many instances are there of the word

wondering?

Answer

quanteda::kwic(x = quanteda::tokens(text), pattern = phrase("wondering")) %>%

as.data.frame() %>%

nrow() ## [1] 7- Extract concordances for the word strange and show the

first 5 concordance lines.

Answer

kwic_strange <- quanteda::kwic(x = quanteda::tokens(text), pattern = phrase("strange"))

# inspect

kwic_strange %>%

as.data.frame() %>%

head(5) ## docname from to pre keyword

## 1 text1 3527 3527 her voice sounded hoarse and strange

## 2 text1 13147 13147 , that it felt quite strange

## 3 text1 32997 32997 remember them , all these strange

## 4 text1 33204 33204 her became alive with the strange

## 5 text1 33514 33514 and eager with many a strange

## post pattern

## 1 , and the words did strange

## 2 at first ; but she strange

## 3 Adventures of hers that you strange

## 4 creatures of her little sister’s strange

## 5 tale , perhaps even with strange`

Exporting KWICs

To export or save a concordance table as an MS Excel spreadsheet, you

can utilize the write_xlsx function from the

writexl package, as demonstrated below. It’s important to

note that we employ the here function from the

here package to specify the location where we want to save

the file. In this instance, we save the file in the current working

directory. If you’re working with Rproj files in RStudio, which is

recommended, then the current working directory corresponds to the

directory or folder where your Rproj file is located.

write_xlsx(mykwic, here::here("mykwic.xlsx"))Extracting Multi-Word Expressions

While extracting single words is a common practice, there are situations where you may need to extract more than just one word at a time. This can be particularly useful when you’re interested in extracting phrases or multi-word expressions from your text data. To accomplish this, you simply need to specify that the pattern you are searching for is a phrase. This allows you to extract contiguous sequences of words that form meaningful units of text.

For example, if you’re analyzing a text and want to extract phrases like “poor alice”, “mad hatter”, or “cheshire cat”, you can easily do so by specifying these phrases as your search patterns.

# extract concordances for the phrase "poor alice" using the kwic function from the quanteda package

kwic_pooralice <- quanteda::kwic(

# tokenizing the input text

quanteda::tokens(text),

# specifying the search pattern as the phrase "poor alice"

pattern = phrase("poor alice") ) %>%

# converting the result to a data frame for easier manipulation

as.data.frame() docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 1,541 | 1,542 | go through , ” thought | poor Alice | , “ it would be | poor alice |

text1 | 2,130 | 2,131 | ; but , alas for | poor Alice | ! when she got to | poor alice |

text1 | 2,332 | 2,333 | use now , ” thought | poor Alice | , “ to pretend to | poor alice |

text1 | 2,887 | 2,888 | to the garden door . | Poor Alice | ! It was as much | poor alice |

text1 | 3,604 | 3,605 | right words , ” said | poor Alice | , and her eyes filled | poor alice |

text1 | 6,876 | 6,877 | mean it ! ” pleaded | poor Alice | . “ But you’re so | poor alice |

text1 | 7,290 | 7,291 | more ! ” And here | poor Alice | began to cry again , | poor alice |

text1 | 8,239 | 8,240 | at home , ” thought | poor Alice | , “ when one wasn’t | poor alice |

text1 | 11,788 | 11,789 | to it ! ” pleaded | poor Alice | in a piteous tone . | poor alice |

text1 | 19,141 | 19,142 | ” This answer so confused | poor Alice | , that she let the | poor alice |

In addition to exact words or phrases, there are situations where you may need to extract more or less fixed patterns from your text data. These patterns might allow for variations in spelling, punctuation, or formatting. To search for such flexible patterns, you need to incorporate regular expressions into your search pattern.

Regular expressions (regex) are powerful tools for pattern matching and text manipulation. They allow you to define flexible search patterns that can match a wide range of text variations. For example, you can use regex to find all instances of a word regardless of whether it’s in lowercase or uppercase, or to identify patterns like dates, email addresses, or URLs.

To incorporate regular expressions into your search pattern, you can

use functions like grepl() or grep() in base

R, or str_detect() and str_extract() in the

stringr package. These functions allow you to specify regex

patterns to search for within your text data.

EXERCISE TIME!

`

- Extract the first 10 concordances for the phrase the

hatter.

Answer

kwic_thehatter <- quanteda::kwic(x = quanteda::tokens(text), pattern = phrase("the hatter"))

# inspect

kwic_thehatter %>%

as.data.frame() %>%

head(10) ## docname from to pre keyword

## 1 text1 16576 16577 wish I’d gone to see the Hatter

## 2 text1 16607 16608 and the March Hare and the Hatter

## 3 text1 16859 16860 wants cutting , ” said the Hatter

## 4 text1 16905 16906 it’s very rude . ” The Hatter

## 5 text1 17049 17050 a bit ! ” said the Hatter

## 6 text1 17174 17175 with you , ” said the Hatter

## 7 text1 17209 17210 , which wasn’t much . The Hatter

## 8 text1 17287 17288 days wrong ! ” sighed the Hatter

## 9 text1 17338 17339 in as well , ” the Hatter

## 10 text1 17453 17454 should it ? ” muttered the Hatter

## post pattern

## 1 instead ! ” CHAPTER VII the hatter

## 2 were having tea at it the hatter

## 3 . He had been looking the hatter

## 4 opened his eyes very wide the hatter

## 5 . “ You might just the hatter

## 6 , and here the conversation the hatter

## 7 was the first to break the hatter

## 8 . “ I told you the hatter

## 9 grumbled : “ you shouldn’t the hatter

## 10 . “ Does _your_ watch the hatter- How many instances are there of the phrase the

hatter?

Answer

kwic_thehatter %>%

as.data.frame() %>%

nrow() ## [1] 51- Extract concordances for the phrase the cat and show the

first 5 concordance lines.

Answer

kwic_thecat <- quanteda::kwic(x = quanteda::tokens(text), pattern = phrase("the cat"))

# inspect

kwic_thecat %>%

as.data.frame() %>%

head(5) ## docname from to pre keyword post

## 1 text1 932 933 ! ” ( Dinah was the cat . ) “ I hope

## 2 text1 15624 15625 a few yards off . The Cat only grinned when it saw

## 3 text1 15749 15750 get to , ” said the Cat . “ I don’t much

## 4 text1 15775 15776 you go , ” said the Cat . “ — so long

## 5 text1 15805 15806 do that , ” said the Cat , “ if you only

## pattern

## 1 the cat

## 2 the cat

## 3 the cat

## 4 the cat

## 5 the cat`

Concordancing Using Regular Expressions

Regular expressions provide a powerful means of searching for abstract patterns within text data, offering unparalleled flexibility beyond concrete words or phrases. Often abbreviated as regex or regexp, a regular expression is a special sequence of characters that describe a pattern to be matched in a text.

You can conceptualize regular expressions as highly potent

combinations of wildcards, offering an extensive range of

pattern-matching capabilities. For instance, the sequence

[a-z]{1,3} is a regular expression that signifies one to

three lowercase characters. Searching for this regular expression would

yield results such as “is”, “a”, “an”, “of”, “the”, “my”, “our”, and

other short words.

There are three fundamental types of regular expressions:

Regular expressions for individual symbols and frequencies: These regular expressions represent single characters and determine their frequencies within the text. For example,

[a-z]matches any lowercase letter,[0-9]matches any digit, and{1,3}specifies a range of occurrences (one to three in this case).Regular expressions for classes of symbols: These regular expressions represent classes or groups of symbols with shared characteristics. For instance,

\dmatches any digit,\wmatches any word character (alphanumeric characters and underscores), and\smatches any whitespace character.Regular expressions for structural properties: These regular expressions represent structural properties or patterns within the text. For example,

^matches the start of a line,$matches the end of a line, and\bmatches a word boundary.

The regular expressions below show the first type of regular expressions, i.e. regular expressions that stand for individual symbols and determine frequencies.

RegEx Symbol/Sequence | Explanation | Example |

|---|---|---|

? | The preceding item is optional and will be matched at most once | walk[a-z]? = walk, walks |

* | The preceding item will be matched zero or more times | walk[a-z]* = walk, walks, walked, walking |

+ | The preceding item will be matched one or more times | walk[a-z]+ = walks, walked, walking |

{n} | The preceding item is matched exactly n times | walk[a-z]{2} = walked |

{n,} | The preceding item is matched n or more times | walk[a-z]{2,} = walked, walking |

{n,m} | The preceding item is matched at least n times, but not more than m times | walk[a-z]{2,3} = walked, walking |

The regular expressions below show the second type of regular expressions, i.e. regular expressions that stand for classes of symbols.

RegEx Symbol/Sequence | Explanation |

|---|---|

[ab] | lower case a and b |

[AB] | upper case a and b |

[12] | digits 1 and 2 |

[:digit:] | digits: 0 1 2 3 4 5 6 7 8 9 |

[:lower:] | lower case characters: a–z |

[:upper:] | upper case characters: A–Z |

[:alpha:] | alphabetic characters: a–z and A–Z |

[:alnum:] | digits and alphabetic characters |

[:punct:] | punctuation characters: . , ; etc. |

[:graph:] | graphical characters: [:alnum:] and [:punct:] |

[:blank:] | blank characters: Space and tab |

[:space:] | space characters: Space, tab, newline, and other space characters |

[:print:] | printable characters: [:alnum:], [:punct:] and [:space:] |

The regular expressions that denote classes of symbols are enclosed

in [] and :. The last type of regular

expressions, i.e. regular expressions that stand for structural

properties are shown below.

RegEx Symbol/Sequence | Explanation |

|---|---|

\\w | Word characters: [[:alnum:]_] |

\\W | No word characters: [^[:alnum:]_] |

\\s | Space characters: [[:blank:]] |

\\S | No space characters: [^[:blank:]] |

\\d | Digits: [[:digit:]] |

\\D | No digits: [^[:digit:]] |

\\b | Word edge |

\\B | No word edge |

< | Word beginning |

> | Word end |

^ | Beginning of a string |

$ | End of a string |

To incorporate regular expressions into your KWIC searches, you

include them in your search pattern and set the valuetype

argument to "regex". This allows you to specify complex

search patterns that go beyond exact word matches.

For example, consider the search pattern

"\\balic.*|\\bhatt.*". In this pattern:

\\bindicates a word boundary, ensuring that the subsequent characters are at the beginning of a word.alic.*matches any sequence of characters (.*) that begins withalic.hatt.*matches any sequence of characters that begins withhatt.- The

|operator functions as an OR operator, allowing the pattern to match eitheralic.*orhatt.*.

As a result, this search pattern retrieves elements that contain

alic or hatt followed by any characters, but

only where alic and hatt are the first letters

of a word. Consequently, words like “malice” or “shatter” would not be

retrieved.

By uaing regular expressions in your KWIC searches, you can conduct more nuanced and precise searches, capturing specific patterns or variations within your text data.

# define search patterns

patterns <- c("\\balic.*|\\bhatt.*")

kwic_regex <- quanteda::kwic(

# define text

quanteda::tokens(text),

# define search pattern

patterns,

# define valuetype

valuetype = "regex") %>%

# make it a data frame

as.data.frame()docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 4 | 4 | Down the Rabbit-Hole | Alice | was beginning to get very | \balic.*|\bhatt.* |

text1 | 63 | 63 | a book , ” thought | Alice | “ without pictures or conversations | \balic.*|\bhatt.* |

text1 | 143 | 143 | in that ; nor did | Alice | think it so _very_ much | \balic.*|\bhatt.* |

text1 | 229 | 229 | and then hurried on , | Alice | started to her feet , | \balic.*|\bhatt.* |

text1 | 299 | 299 | In another moment down went | Alice | after it , never once | \balic.*|\bhatt.* |

text1 | 338 | 338 | down , so suddenly that | Alice | had not a moment to | \balic.*|\bhatt.* |

text1 | 521 | 521 | “ Well ! ” thought | Alice | to herself , “ after | \balic.*|\bhatt.* |

text1 | 647 | 647 | for , you see , | Alice | had learnt several things of | \balic.*|\bhatt.* |

text1 | 719 | 719 | got to ? ” ( | Alice | had no idea what Latitude | \balic.*|\bhatt.* |

text1 | 910 | 910 | else to do , so | Alice | soon began talking again . | \balic.*|\bhatt.* |

EXERCISE TIME!

`

- Extract the first 10 concordances for words containing

exu.

Answer

kwic_exu <- quanteda::kwic(x = quanteda::tokens(text), pattern = ".*exu.*", valuetype = "regex")

# inspect

kwic_exu %>%

as.data.frame() %>%

head(10) ## [1] docname from to pre keyword post pattern

## <0 rows> (or 0-length row.names)- How many instances are there of words beginning with

pit?

Answer

quanteda::kwic(x = quanteda::tokens(text), pattern = "\\bpit.*", valuetype = "regex") %>%

as.data.frame() %>%

nrow() ## [1] 5- Extract concordances for words ending with ption and show

the first 5 concordance lines.

Answer

quanteda::kwic(x = quanteda::tokens(text), pattern = "ption\\b", valuetype = "regex") %>%

as.data.frame() %>%

head(5) ## docname from to pre keyword

## 1 text1 5775 5775 adjourn , for the immediate adoption

## post pattern

## 1 of more energetic remedies — ption\\b`

Concordancing and Piping

Quite often, we want to retrieve patterns only if they occur in a specific context. For instance, we might be interested in instances of “alice”, but only if the preceding word is “poor” or “little”. While such conditional concordances could be extracted using regular expressions, they are more easily retrieved by piping.

Piping is achieved using the %>% function from the

dplyr package, and the piping sequence can be interpreted

as “and then”. We can then filter those concordances that contain

“alice” using the filter function from the

dplyr package. Note that the $ symbol stands

for the end of a string, so “poor$” signifies that “poor” is the last

element in the string that precedes the nodeword.

# extract KWIC concordances

quanteda::kwic(

# input tokenized text

x = quanteda::tokens(text),

# define search pattern ("alice")

pattern = "alice"

# pipe (and then)

) %>%

# convert result to data frame

as.data.frame() %>%

# filter concordances with "poor" or "little" preceding "alice"

# save result in object called "kwic_pipe"

dplyr::filter(stringr::str_detect(pre, "poor$|little$")) -> kwic_pipe docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 1,542 | 1,542 | through , ” thought poor | Alice | , “ it would be | alice |

text1 | 1,725 | 1,725 | ” but the wise little | Alice | was not going to do | alice |

text1 | 2,131 | 2,131 | but , alas for poor | Alice | ! when she got to | alice |

text1 | 2,333 | 2,333 | now , ” thought poor | Alice | , “ to pretend to | alice |

text1 | 3,605 | 3,605 | words , ” said poor | Alice | , and her eyes filled | alice |

text1 | 6,877 | 6,877 | it ! ” pleaded poor | Alice | . “ But you’re so | alice |

text1 | 7,291 | 7,291 | ! ” And here poor | Alice | began to cry again , | alice |

text1 | 8,240 | 8,240 | home , ” thought poor | Alice | , “ when one wasn’t | alice |

text1 | 11,789 | 11,789 | it ! ” pleaded poor | Alice | in a piteous tone . | alice |

text1 | 19,142 | 19,142 | This answer so confused poor | Alice | , that she let the | alice |

In this code:

quanteda::kwic: This function extracts KWIC concordances from the input text.quanteda::tokens(text): The input text is tokenized using thetokensfunction from thequantedapackage.pattern = "alice": Specifies the search pattern as “alice”.%>%: The pipe operator (%>%) chains together multiple operations, passing the result of one operation as the input to the next.as.data.frame(): Converts the resulting concordance object into a data frame.dplyr::filter(...): Filters the concordances based on the specified condition, which is whether “poor” or “little” precedes “alice”.

Piping is an indispensable tool in R, commonly used across various

data science domains, including text processing. This powerful function,

denoted by %>%, allows for a more streamlined and

readable workflow by chaining together multiple operations in a

sequential manner.

Instead of nesting functions or creating intermediate variables, piping allows to take an easy-to-understand and more intuitive approach to data manipulation and analysis. With piping, each operation is performed “and then” the next, leading to code that is easier to understand and maintain.

While piping is frequently used in the context of text processing, its versatility extends far beyond. In data wrangling, modeling, visualization, and beyond, piping offers a concise and elegant solution for composing complex workflows.

By leveraging piping, R users can enhance their productivity and efficiency, making their code more expressive and succinct while maintaining clarity and readability. It’s a fundamental tool in the toolkit of every R programmer, empowering them to tackle data science challenges with confidence and ease.

Ordering and Arranging KWICs

When examining concordances, it’s often beneficial to reorder them

based on their context rather than the order in which they appeared in

the text or texts. This allows for a more organized and structured

analysis of the data. To reorder concordances, we can utilize the

arrange function from the dplyr package, which

takes the column according to which we want to rearrange the data as its

main argument.

Ordering Alphabetically

In the example below, we extract all instances of “alice” and then arrange the instances according to the content of the post column in alphabetical order.

# extract KWIC concordances

quanteda::kwic(

# input tokenized text

x = quanteda::tokens(text),

# define search pattern ("alice")

pattern = "alice"

# end function and pipe (and then)

) %>%

# convert result to data frame

as.data.frame() %>%

# arrange concordances based on the content of the "post" column

# save result in object called "kwic_ordered"

dplyr::arrange(post) -> kwic_ordered docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

text1 | 7,754 | 7,754 | happen : “ ‘ Miss | Alice | ! Come here directly , | alice |

text1 | 2,888 | 2,888 | the garden door . Poor | Alice | ! It was as much | alice |

text1 | 2,131 | 2,131 | but , alas for poor | Alice | ! when she got to | alice |

text1 | 30,891 | 30,891 | voice , the name “ | Alice | ! ” CHAPTER XII . | alice |

text1 | 8,423 | 8,423 | “ Oh , you foolish | Alice | ! ” she answered herself | alice |

text1 | 2,606 | 2,606 | and curiouser ! ” cried | Alice | ( she was so much | alice |

text1 | 25,861 | 25,861 | I haven’t , ” said | Alice | ) — “ and perhaps | alice |

text1 | 32,275 | 32,275 | explain it , ” said | Alice | , ( she had grown | alice |

text1 | 32,843 | 32,843 | for you ? ” said | Alice | , ( she had grown | alice |

text1 | 1,678 | 1,678 | here before , ” said | Alice | , ) and round the | alice |

Ordering by Co-Occurrence Frequency

Arranging concordances based on alphabetical properties may not always be the most informative approach. A more insightful option is to arrange concordances according to the frequency of co-occurring terms or collocates. This allows us to identify the most common words that appear alongside our search term, providing valuable insights into its usage patterns.

To accomplish this, we need to follow these steps:

Create a new variable or column that represents the word that co-occurs with the search term. In the example below, we use the

mutatefunction from thedplyrpackage to create a new column calledpost_word. We then use thestr_remove_allfunction from thestringrpackage to extract the word that immediately follows the search term. This is achieved by removing everything except for the word following the search term (including any white space).Group the data by the word that immediately follows the search term.

Create a new column called

post_word_freqthat represents the frequencies of all the words that immediately follow the search term.Arrange the concordances by the frequency of the collocates in descending order. This is accomplished by using the

arrangefunction and specifying the columnpost_word_freqin descending order (indicated by the-symbol).

quanteda::kwic(

# define text

x = quanteda::tokens(text),

# define search pattern

pattern = "alice") %>%

# make it a data frame

as.data.frame() %>%

# extract word following the nodeword

dplyr::mutate(post1 = str_remove_all(post, " .*")) %>%

# group following words

dplyr::group_by(post1) %>%

# extract frequencies of the following words

dplyr::mutate(post1_freq = n()) %>%

# arrange/order by the frequency of the following word

dplyr::arrange(-post1_freq) -> kwic_ordered_colldocname | from | to | pre | keyword | post | pattern | post1 | post1_freq |

|---|---|---|---|---|---|---|---|---|

text1 | 1,542 | 1,542 | through , ” thought poor | Alice | , “ it would be | alice | , | 78 |

text1 | 1,678 | 1,678 | here before , ” said | Alice | , ) and round the | alice | , | 78 |

text1 | 2,333 | 2,333 | now , ” thought poor | Alice | , “ to pretend to | alice | , | 78 |

text1 | 2,410 | 2,410 | eat it , ” said | Alice | , “ and if it | alice | , | 78 |

text1 | 2,739 | 2,739 | to them , ” thought | Alice | , “ or perhaps they | alice | , | 78 |

text1 | 2,945 | 2,945 | of yourself , ” said | Alice | , “ a great girl | alice | , | 78 |

text1 | 3,605 | 3,605 | words , ” said poor | Alice | , and her eyes filled | alice | , | 78 |

text1 | 3,751 | 3,751 | oh dear ! ” cried | Alice | , with a sudden burst | alice | , | 78 |

text1 | 3,918 | 3,918 | narrow escape ! ” said | Alice | , a good deal frightened | alice | , | 78 |

text1 | 4,181 | 4,181 | so much ! ” said | Alice | , as she swam about | alice | , | 78 |

In this code:

mutate: This function from thedplyrpackage creates a new column in the data frame.str_remove_all: This function from thestringrpackage removes all occurrences of a specified pattern from a character string.group_by: This function from thedplyrpackage groups the data by a specified variable.n(): This function from thedplyrpackage calculates the number of observations in each group.arrange: This function from thedplyrpackage arranges the rows of a data frame based on the values of one or more columns.

We add more columns according to which we could arrange the concordance following the same schema. For example, we could add another column that represented the frequency of words that immediately preceded the search term and then arrange according to this column.

Ordering by Multiple Co-Occurrence Frequencies

In this section, we extract the three words preceding and following the nodeword “alice” from the concordance data and organize the results by the frequencies of the following words (you can also order by the preceding words which is why we also extract them).

We begin by iterating through each row of the concordance data using

rowwise(). Then, we extract the three words following the

nodeword (“alice”) and the three words preceding it from the

post and pre columns, respectively. These

words are split using the strsplit function and stored in

separate columns (post1, post2,

post3, pre1, pre2,

pre3).

Next, we group the data by each of the following words

(post1, post2, post3,

pre1, pre2, pre3) and calculate

the frequency of each word using the n() function within

each group. This allows us to determine how often each word occurs in

relation to the nodeword “alice”.

Finally, we arrange the concordances based on the frequencies of the

following words (post1, post2,

post3) in descending order using the arrange()

function, storing the result in the mykwic_following data

frame.

mykwic %>%

dplyr::rowwise() %>% # Row-wise operation for each entry

# Extract words preceding and following the node word

# Extracting the first word following the node word

dplyr::mutate(post1 = unlist(strsplit(post, " "))[1],

# Extracting the second word following the node word

post2 = unlist(strsplit(post, " "))[2],

# Extracting the third word following the node word

post3 = unlist(strsplit(post, " "))[3],

# Extracting the last word preceding the node word

pre1 = unlist(strsplit(pre, " "))[length(unlist(strsplit(pre, " ")))],

# Extracting the second-to-last word preceding the node word

pre2 = unlist(strsplit(pre, " "))[length(unlist(strsplit(pre, " ")))-1],

# Extracting the third-to-last word preceding the node word

pre3 = unlist(strsplit(pre, " "))[length(unlist(strsplit(pre, " ")))-2]) %>%

# Extract frequencies of the words around the node word

# Grouping by the first word following the node word and counting its frequency

dplyr::group_by(post1) %>% dplyr::mutate(npost1 = n()) %>%

# Grouping by the second word following the node word and counting its frequency

dplyr::group_by(post2) %>% dplyr::mutate(npost2 = n()) %>%

# Grouping by the third word following the node word and counting its frequency

dplyr::group_by(post3) %>% dplyr::mutate(npost3 = n()) %>%

# Grouping by the last word preceding the node word and counting its frequency

dplyr::group_by(pre1) %>% dplyr::mutate(npre1 = n()) %>%

# Grouping by the second-to-last word preceding the node word and counting its frequency

dplyr::group_by(pre2) %>% dplyr::mutate(npre2 = n()) %>%

# Grouping by the third-to-last word preceding the node word and counting its frequency

dplyr::group_by(pre3) %>% dplyr::mutate(npre3 = n()) %>%

# Arranging the results

# Arranging in descending order of frequencies of words following the node word

dplyr::arrange(-npost1, -npost2, -npost3) -> mykwic_following docname | from | to | pre | keyword | post | pattern | post1 | post2 | post3 | pre1 | pre2 | pre3 | npost1 | npost2 | npost3 | npre1 | npre2 | npre3 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

text1 | 2,945 | 2,945 | of yourself , ” said | Alice | , “ a great girl | Alice | , | “ | a | said | ” | , | 78 | 89 | 13 | 115 | 164 | 106 |

text1 | 2,410 | 2,410 | eat it , ” said | Alice | , “ and if it | Alice | , | “ | and | said | ” | , | 78 | 89 | 9 | 115 | 164 | 106 |

text1 | 6,525 | 6,525 | you know , ” said | Alice | , “ and why it | Alice | , | “ | and | said | ” | , | 78 | 89 | 9 | 115 | 164 | 106 |

text1 | 28,751 | 28,751 | the jury-box , ” thought | Alice | , “ and those twelve | Alice | , | “ | and | thought | ” | , | 78 | 89 | 9 | 26 | 164 | 106 |

text1 | 2,333 | 2,333 | now , ” thought poor | Alice | , “ to pretend to | Alice | , | “ | to | poor | thought | ” | 78 | 89 | 7 | 10 | 3 | 9 |

text1 | 4,312 | 4,312 | , now , ” thought | Alice | , “ to speak to | Alice | , | “ | to | thought | ” | , | 78 | 89 | 7 | 26 | 164 | 106 |

text1 | 1,542 | 1,542 | through , ” thought poor | Alice | , “ it would be | Alice | , | “ | it | poor | thought | ” | 78 | 89 | 5 | 10 | 3 | 9 |

text1 | 16,113 | 16,113 | very much , ” said | Alice | , “ but I haven’t | Alice | , | “ | but | said | ” | , | 78 | 89 | 4 | 115 | 164 | 106 |

text1 | 26,693 | 26,693 | “ Yes , ” said | Alice | , “ I’ve often seen | Alice | , | “ | I’ve | said | ” | , | 78 | 89 | 4 | 115 | 164 | 106 |

text1 | 22,354 | 22,354 | matter much , ” thought | Alice | , “ as all the | Alice | , | “ | as | thought | ” | , | 78 | 89 | 2 | 26 | 164 | 106 |

The updated concordance now presents the arrangement based on the frequency of words following the node word. This means that the words occurring most frequently immediately after the keyword “alice” are listed first, followed by less frequent ones.

It’s essential to note that the arrangement can be customized by

modifying the arguments within the dplyr::arrange function.

By altering the order and content of these arguments, you can adjust the

sorting criteria. For instance, if you want to prioritize the frequency

of words preceding the node word instead, you can simply rearrange the

arguments accordingly. This flexibility empowers users to tailor the

arrangement to suit their specific analysis goals and preferences.

Concordances from Transcriptions

Since many analyses rely on transcripts as their main data source, and transcripts often require additional processing due to their specific features, we will now demonstrate concordancing using transcripts. To begin, we will load five example transcripts representing the first five files from the Irish component of the International Corpus of English2. These transcripts will serve as our dataset for conducting concordance analysis.

We first load these files so that we can process them and extract KWICs. To load the files, the code below dynamically generates URLs for a series of text files, then reads the content of each file into R, storing the text data in the transcripts object. This is a common procedure when working with multiple text files or when the filenames follow a consistent pattern.

# define corpus files

files <- paste("https://slcladal.github.io/data/ICEIrelandSample/S1A-00", 1:5, ".txt", sep = "")

# load corpus files

transcripts <- sapply(files, function(x){

x <- readLines(x)

}). |

|---|

<S1A-001 Riding> |

<I> |

<S1A-001$A> <#> Well how did the riding go tonight |

<S1A-001$B> <#> It was good so it was <#> Just I I couldn't believe that she was going to let me jump <,> that was only the fourth time you know <#> It was great <&> laughter </&> |

<S1A-001$A> <#> What did you call your horse |

<S1A-001$B> <#> I can't remember <#> Oh Mary 's Town <,> oh |

<S1A-001$A> <#> And how did Mabel do |

<S1A-001$B> <#> Did you not see her whenever she was going over the jumps <#> There was one time her horse refused and it refused three times <#> And then <,> she got it round and she just lined it up straight and she just kicked it and she hit it with the whip <,> and over it went the last time you know <#> And Stephanie told her she was very determined and very well-ridden <&> laughter </&> because it had refused the other times you know <#> But Stephanie wouldn't let her give up on it <#> She made her keep coming back and keep coming back <,> until <,> it jumped it you know <#> It was good |

<S1A-001$A> <#> Yeah I 'm not so sure her jumping 's improving that much <#> She uh <,> seemed to be holding the reins very tight |

The first ten lines shown above let us know that, after the header

(<S1A-001 Riding>) and the symbol which indicates the

start of the transcript (<I>), each utterance is

preceded by a sequence which indicates the section, file, and speaker

(e.g. <S1A-001$A>). The first utterance is thus

uttered by speaker A in file 001 of section

S1A. In addition, there are several sequences that provide

meta-linguistic information which indicate the beginning of a speech

unit (<#>), pauses (<,>), and

laughter (<&> laughter </&>).

To perform the concordancing, we need to change the format of the

transcripts because the kwic function only works on

character, corpus, tokens object- in their present form, the transcripts

represent a list which contains vectors of strings. To change the

format, we collapse the individual utterances into a single character

vector for each transcript.

transcripts_collapsed <- sapply(files, function(x){

# read-in text

x <- readLines(x)

# paste all lines together

x <- paste0(x, collapse = " ")

# remove superfluous white spaces

x <- str_squish(x)

}). |

|---|

<S1A-001 Riding> <I> <S1A-001$A> <#> Well how did the riding go tonight <S1A-001$B> <#> It was good so it was <#> Just I I couldn't believe that she was going to let me jump <,> that was only the fourth time you know <#> It was great <&> laughter </&> <S1A-001$A> <#> What did you call your horse <S1A-001$B> <#> I can't remember <#> Oh Mary 's Town <,> oh <S1A-001$A> <#> And how did Mabel do <S1A-001$B> <#> Did you not see her whenever she was going over the jumps <#> There was one time her horse |

<S1A-002 Dinner chat 1> <I> <S1A-002$A> <#> He 's been married for three years and is now <{> <[> getting divorced </[> <S1A-002$B> <#> <[> No no </[> </{> he 's got married last year and he 's getting <{> <[> divorced </[> <S1A-002$A> <#> <[> He 's now </[> </{> getting divorced <S1A-002$C> <#> Just right <S1A-002$D> <#> A wee girl of her age like <S1A-002$E> <#> Well there was a guy <S1A-002$C> <#> How long did she try it for <#> An hour a a year <S1A-002$B> <#> Mhm <{> <[> mhm </[> <S1A-002$E |

<S1A-003 Dinner chat 2> <I> <S1A-003$A> <#> I <.> wa </.> I want to go to Peru but uh <S1A-003$B> <#> Do you <S1A-003$A> <#> Oh aye <S1A-003$B> <#> I 'd love to go to Peru <S1A-003$A> <#> I want I want to go up the Machu Picchu before it falls off the edge of the mountain <S1A-003$B> <#> Lima 's supposed to be a bit dodgy <S1A-003$A> <#> Mm <S1A-003$B> <#> Bet it would be <S1A-003$B> <#> Mm <S1A-003$A> <#> But I I just I I would like <,> Machu Picchu is collapsing <S1A-003$B> <#> I don't know wh |

<S1A-004 Nursing home 1> <I> <S1A-004$A> <#> Honest to God <,> I think the young ones <#> Sure they 're flying on Monday in I think it 's Shannon <#> This is from Texas <S1A-004$B> <#> This English girl <S1A-004$A> <#> The youngest one <,> the dentist <,> she 's married to the dentist <#> Herself and her husband <,> three children and she 's six months pregnant <S1A-004$C> <#> Oh God <S1A-004$B> <#> And where are they going <S1A-004$A> <#> Coming to Dublin to the mother <{> <[> or <unclear> 3 sy |

<S1A-005 Masons> <I> <S1A-005$A> <#> Right shall we risk another beer or shall we try and <,> <{> <[> ride the bikes down there or do something like that </[> <S1A-005$B> <#> <[> Well <,> what about the </[> </{> provisions <#> What time <{> <[> <unclear> 4 sylls </unclear> </[> <S1A-005$C> <#> <[> Is is your </[> </{> man coming here <S1A-005$B> <#> <{> <[> Yeah </[> <S1A-005$A> <#> <[> He said </[> </{> he would meet us here <S1A-005$B> <#> Just the boat 's arriving you know a few minutes ' wa |

We now move on to extracting concordances. We begin by splitting the text simply by white space. This ensures that tags and markup remain intact, preventing accidental splitting. Additionally, we extend the context surrounding our target word or phrase. While the default is five tokens before and after the keyword, we opt to widen this context to 10 tokens. Furthermore, for improved organization and readability, we refine the file names. Instead of using the full path, we extract only the name of the text. This simplifies the presentation of results and enhances clarity when navigating through the corpus.

kwic_trans <- quanteda::kwic(

# tokenize transcripts

quanteda::tokens(transcripts_collapsed, what = "fasterword"),

# define search pattern

pattern = phrase("you know"),

# extend context

window = 10) %>%

# make it a data frame

as.data.frame() %>%

# clean docnames / filenames / text names

dplyr::mutate(docname = str_replace_all(docname, ".*/(.*?).txt", "\\1"))docname | from | to | pre | keyword | post | pattern |

|---|---|---|---|---|---|---|

S1A-001 | 42 | 43 | let me jump <,> that was only the fourth time | you know | <#> It was great <&> laughter </&> <S1A-001$A> <#> What | you know |

S1A-001 | 140 | 141 | the whip <,> and over it went the last time | you know | <#> And Stephanie told her she was very determined and | you know |

S1A-001 | 164 | 165 | <&> laughter </&> because it had refused the other times | you know | <#> But Stephanie wouldn't let her give up on it | you know |

S1A-001 | 193 | 194 | and keep coming back <,> until <,> it jumped it | you know | <#> It was good <S1A-001$A> <#> Yeah I 'm not | you know |

S1A-001 | 402 | 403 | 'd be far better waiting <,> for that one <,> | you know | and starting anew fresh <S1A-001$A> <#> Yeah but I mean | you know |

S1A-001 | 443 | 444 | the best goes top of the league <,> <{> <[> | you know | </[> <S1A-001$A> <#> <[> So </[> </{> it 's like | you know |

S1A-001 | 484 | 485 | I 'm not sure now <#> We didn't discuss it | you know | <S1A-001$A> <#> Well it sounds like more money <S1A-001$B> <#> | you know |

S1A-001 | 598 | 599 | on Monday and do without her lesson on Tuesday <,> | you know | <#> But I was keeping her going cos I says | you know |

S1A-001 | 727 | 728 | to take it tomorrow <,> that she could take her | you know | the wee shoulder bag she has <S1A-001$A> <#> Mhm <S1A-001$B> | you know |

S1A-001 | 808 | 809 | <,> and <,> sort of show them around <,> uhm | you know | their timetable and <,> give them their timetable and show | you know |

Custom Concordances

As R represents a fully-fledged programming environment, we can, of course, also write our own, customized concordance function. The code below shows how you could go about doing so. Note, however, that this function only works if you enter more than a single file.

mykwic <- function(txts, pattern, context) {

# activate packages

require(stringr)

# list files

txts <- txts[stringr::str_detect(txts, pattern)]

conc <- sapply(txts, function(x) {

# determine length of text

lngth <- as.vector(unlist(nchar(x)))

# determine position of hits

idx <- str_locate_all(x, pattern)

idx <- idx[[1]]

ifelse(nrow(idx) >= 1, idx <- idx, return(NA))

# define start position of hit

token.start <- idx[,1]

# define end position of hit

token.end <- idx[,2]

# define start position of preceding context

pre.start <- ifelse(token.start-context < 1, 1, token.start-context)

# define end position of preceding context

pre.end <- token.start-1

# define start position of subsequent context

post.start <- token.end+1

# define end position of subsequent context

post.end <- ifelse(token.end+context > lngth, lngth, token.end+context)

# extract the texts defined by the positions

PreceedingContext <- substring(x, pre.start, pre.end)

Token <- substring(x, token.start, token.end)

SubsequentContext <- substring(x, post.start, post.end)

Id <- 1:length(Token)

conc <- cbind(Id, PreceedingContext, Token, SubsequentContext)

# return concordance

return(conc)

})

concdf <- do.call(rbind, conc) %>%

as.data.frame()

return(concdf)

}We can now try if this function works by searching for the sequence

you know in the transcripts that we have loaded earlier. One

difference between the kwic function provided by the

quanteda package and the customized concordance function

used here is that the kwic function uses the number of

words to define the context window, while the mykwic

function uses the number of characters or symbols instead (which is why

we use a notably higher number to define the context window).

kwic_youknow <- mykwic(transcripts_collapsed, "you know", 50)Id | PreceedingContext | Token | SubsequentContext |

|---|---|---|---|

1 | to let me jump <,> that was only the fourth time | you know | <#> It was great <&> laughter </&> <S1A-001$A> <# |

2 | with the whip <,> and over it went the last time | you know | <#> And Stephanie told her she was very determine |

3 | ghter </&> because it had refused the other times | you know | <#> But Stephanie wouldn't let her give up on it |

4 | k and keep coming back <,> until <,> it jumped it | you know | <#> It was good <S1A-001$A> <#> Yeah I 'm not so |

5 | she 'd be far better waiting <,> for that one <,> | you know | and starting anew fresh <S1A-001$A> <#> Yeah but |

6 | er 's the best goes top of the league <,> <{> <[> | you know | </[> <S1A-001$A> <#> <[> So </[> </{> it 's like |

As this concordance function only works for more than one text, we split the text into chapters and assign each section a name.

# read in text

text_split <- text %>%

stringr::str_squish() %>%

stringr::str_split("[CHAPTER]{7,7} [XVI]{1,7}\\. ") %>%

unlist()

text_split <- text_split[which(nchar(text_split) > 2000)]

# add names

names(text_split) <- paste0("text", 1:length(text_split))

# inspect data

nchar(text_split)## text1 text2 text3 text4 text5 text6 text7 text8 text9 text10 text11

## 11331 10888 9137 13830 11767 13730 12563 13585 12527 11287 10292

## text12

## 11564Now that we have named elements, we can search for the pattern

poor alice. We also need to clean the concordance as some

sections do not contain any instances of the search pattern. To clean

the data, we select only the columns File,

PreceedingContext, Token, and

SubsequentContext and then remove all rows where

information is missing.

mykwic_pooralice <- mykwic(text_split, "poor Alice", 50)Id | PreceedingContext | Token | SubsequentContext |

|---|---|---|---|

1 | ; “and even if my head would go through,” thought | poor Alice | , “it would be of very little use without my shoul |

2 | d on going into the garden at once; but, alas for | poor Alice | ! when she got to the door, she found she had forg |

3 | to be two people. “But it’s no use now,” thought | poor Alice | , “to pretend to be two people! Why, there’s hardl |

1 | !” “I’m sure those are not the right words,” said | poor Alice | , and her eyes filled with tears again as she went |

1 | lking such nonsense!” “I didn’t mean it!” pleaded | poor Alice | . “But you’re so easily offended, you know!” The M |

2 | onder if I shall ever see you any more!” And here | poor Alice | began to cry again, for she felt very lonely and |

You can go ahead and modify the customized concordance function to suit your needs.

Citation & Session Info

Schweinberger, Martin. 2024. Concordancing with R. Brisbane: The Language Technology and Data Analysis Laboratory (LADAL). url: https://ladal.edu.au/kwics.html (Version 2024.05.07).

@manual{schweinberger2024kwics,

author = {Schweinberger, Martin},

title = {Concordancing with R},

note = {https://ladal.edu.au/kwics.html},

year = {2024},

organization = {The Language Technology and Data Analysis Laboratory (LADAL)},

address = {Brisbane},

edition = {2024.05.07}

}sessionInfo()## R version 4.4.1 (2024-06-14 ucrt)

## Platform: x86_64-w64-mingw32/x64

## Running under: Windows 11 x64 (build 22631)

##

## Matrix products: default

##

##

## locale:

## [1] LC_COLLATE=English_Australia.utf8 LC_CTYPE=English_Australia.utf8

## [3] LC_MONETARY=English_Australia.utf8 LC_NUMERIC=C

## [5] LC_TIME=English_Australia.utf8

##

## time zone: Australia/Brisbane

## tzcode source: internal

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] flextable_0.9.7 here_1.0.1 writexl_1.5.1 stringr_1.5.1

## [5] dplyr_1.1.4 quanteda_4.1.0

##

## loaded via a namespace (and not attached):

## [1] sass_0.4.9 utf8_1.2.4 generics_0.1.3

## [4] fontLiberation_0.1.0 xml2_1.3.6 stringi_1.8.4

## [7] lattice_0.22-6 digest_0.6.37 magrittr_2.0.3

## [10] evaluate_1.0.1 grid_4.4.1 fastmap_1.2.0

## [13] rprojroot_2.0.4 jsonlite_1.8.9 Matrix_1.7-0

## [16] zip_2.3.1 stopwords_2.3 fansi_1.0.6

## [19] fontBitstreamVera_0.1.1 klippy_0.0.0.9500 textshaping_0.4.0

## [22] jquerylib_0.1.4 cli_3.6.3 rlang_1.1.4

## [25] fontquiver_0.2.1 withr_3.0.1 cachem_1.1.0

## [28] yaml_2.3.10 gdtools_0.4.0 tools_4.4.1

## [31] officer_0.6.7 uuid_1.2-1 fastmatch_1.1-4

## [34] assertthat_0.2.1 vctrs_0.6.5 R6_2.5.1

## [37] lifecycle_1.0.4 ragg_1.3.3 pkgconfig_2.0.3

## [40] pillar_1.9.0 bslib_0.8.0 data.table_1.16.2

## [43] glue_1.8.0 Rcpp_1.0.13 systemfonts_1.1.0

## [46] xfun_0.48 tibble_3.2.1 tidyselect_1.2.1

## [49] highr_0.11 rstudioapi_0.17.0 knitr_1.48

## [52] htmltools_0.5.8.1 rmarkdown_2.28 compiler_4.4.1

## [55] askpass_1.2.1 openssl_2.2.2References

If you want to render the R Notebook on your machine, i.e. knitting the document to html or a pdf, you need to make sure that you have R and RStudio installed and you also need to download the bibliography file and store it in the same folder where you store the Rmd file.↩︎

This data is freely available after registration. To get access to the data represented in the Irish Component of the International Corpus of Eng;lish (or any other component), you or your institution will need a valid licence. You need to send your request from an academic edu e-mail to proof your educational status. To get an academic licence with download access please fill in the licence form (PDF, 82 KB) and send it to

ice@es.uzh.ch. You should get the credentials for downloading here and unpacking the corpora within about 10 working days.↩︎